Pat Patterson · @metadaddy

250 followers · 350 posts · Server fosstodon.org@vwbusguy @hmiron Technically, it’s #BackblazeB2, which has an S3-compatible API 🤓

codeHaiku :fosstodon: · @codeHaiku

320 followers · 2637 posts · Server fosstodon.orgI have had #BackblazeB2 storage and #nextcloud for years now. This past week, I was able to set up external storage on my NextCloud instance by adding some BackBlazeB2 buckets to each user on my NextCloud. Who needs #google or #apple native cloud storage, when you can host your own for cheaper?!

Thank you @nextcloud for providing a superior and high-utility FOSS product that keeps getting better and better.

#BackblazeB2 #nextcloud #google #apple

Ewelina Krzeptowska · @ekrzeptowski

16 followers · 60 posts · Server hachyderm.ioRecap on Mastodon instance storage:

- ZFS 5400rpm HDD: too slow with hundreds of thousands small files

- Storj: too expensive in the long run because of the segment fee($0.0000088)

- IDrive e2: timeouts on media upload 20% of time (over 1 minute object put time in some cases)

- Backblaze B2: works well and I will probably settle on it

#mastodon #storj #BackblazeB2 #backblaze #s3 #idrive #idrivee2 #zfs

#mastodon #Storj #BackblazeB2 #backblaze #s3 #idrive #idrivee2 #zfs

Pat Patterson · @metadaddy

239 followers · 312 posts · Server fosstodon.orgI spend much of my time emphasizing that #BackblazeB2 is API-compatible with Amazon S3 but, ironically, there are some compelling reasons you should look at the B2 Native CLI rather than use the AWS equivalent: https://www.backblaze.com/blog/go-wild-with-wildcards-in-backblaze-b2-command-line-tool-3-7-1/

Pat Patterson · @metadaddy

223 followers · 297 posts · Server fosstodon.orgI’ll be sharing some of my work with #edge computing in this webinar tomorrow, explaining how to minimize latency in content delivery using #Fastly Compute@Edge to route requests to #BackblazeB2 regions depending on the edge server location.

Register at https://brighttalk.com/webcast/14807/571685

Pat Patterson · @metadaddy

218 followers · 285 posts · Server fosstodon.orgGetting started with #BackblazeB2? Check out the new #Backblaze tutorial covering Java, Python and the AWS CLI. If you’ve written code against the S3 SDKs, chances are it will work with B2 - just override the endpoint.

https://www.backblaze.com/blog/build-a-cloud-storage-app-in-30-minutes/

Originally posted by Backblaze / @backblaze@twitter.com: https://twitter.com/metadaddy/status/1617951807338348546#m

RT by @backblaze: Getting started with #BackblazeB2? Check out our new tutorial covering Java, Python and the AWS CLI. If you’ve written code against the S3 SDKs, chances are it will work with B2 - just override the endpoint.

Referenced link: https://hubs.ly/Q01xKdB50

Originally posted by Backblaze / @backblaze@twitter.com: https://twitter.com/backblaze/status/1616088094729445377#m

🔥Webinar Alert🔥

Don't forget to register and attend this upcoming webinar today with #Catalogic and #Backblaze!

Register now: https://hubs.ly/Q01xKdB50

#catalogic #backblaze #BackblazeB2 #ransomware #cloudstorage

Pat Patterson · @metadaddy

218 followers · 285 posts · Server fosstodon.org@ricard @vash Looking at the Mastodon source, it looks like Mastodon deletes each media file individually, so there would be an API call per file, each with its own HTTP round trip. The S3 API (which #BackblazeB2 implements) includes a DeleteObjects operation that allows you to delete up to 1000 files in a single API call.

https://github.com/mastodon/mastodon/blob/main/app/lib/vacuum/media_attachments_vacuum.rb#L18

1/2

Pat Patterson · @metadaddy

218 followers · 285 posts · Server fosstodon.org@vito Work laptop: #Backblaze Computer Backup.

Personal laptop - Time Machine to a #Synology NAS, backed up via Synology’s Hyper Backup to #BackblazeB2

#backblaze #synology #BackblazeB2

Pat Patterson · @metadaddy

154 followers · 126 posts · Server fosstodon.org@mo Is it possible to tweak Mastodon’s timeouts? I’ve seen a lot of people posting (tooting?) about successfully using #BackblazeB2 with Mastodon, so I’m sure it’s doable.

Pat Patterson · @metadaddy

154 followers · 126 posts · Server fosstodon.org@blasteh @henry For sure - I’ve written a few blog posts about using Cloudflare Workers with #BackblazeB2 - for example:

https://www.backblaze.com/blog/use-a-cloudflare-worker-to-send-notifications-on-backblaze-b2-events/

Pat Patterson · @metadaddy

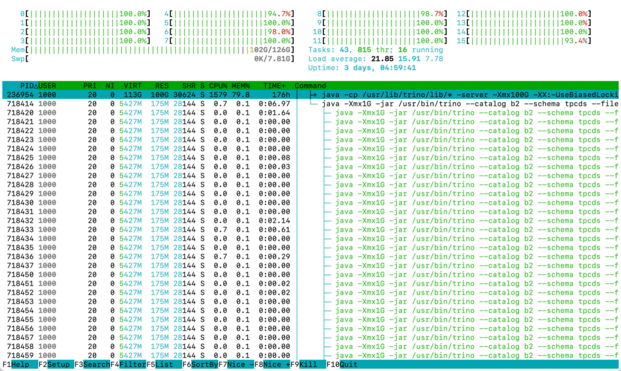

154 followers · 126 posts · Server fosstodon.orgRunning an informal TPC-DS scale factor 1000 benchmark of @trinodb on a @vultr VM accessing Parquet tables stored in #BackblazeB2. Absolutely pegging all 16 CPUs!

Pat Patterson · @metadaddy

154 followers · 126 posts · Server fosstodon.orgWe've been using #Trino at #Backblaze both to experiment with #BackblazeB2 as #datalake storage, and to query our #DriveStats data set. I wrote up our experience in a blog post: https://www.backblaze.com/blog/querying-a-decade-of-drive-stats-data/

#trino #backblaze #BackblazeB2 #datalake #DriveStats