Tech news from Canada · @TechNews

985 followers · 26782 posts · Server mastodon.roitsystems.caArs Technica: OpenAI disputes authors’ claims that every ChatGPT response is a derivative work https://arstechnica.com/?p=1964348 #Tech #arstechnica #IT #Technology #ArtificialIntelligence #copyrightinfringement #largelanguagemodels #copyrightlaw #generativeai #copyright #chatbots #ChatGPT #Policy #openai #DMCA #AI

#Tech #arstechnica #it #technology #artificialintelligence #copyrightinfringement #largelanguagemodels #copyrightlaw #generativeAI #copyright #Chatbots #chatgpt #policy #openai #dmca #ai

Tech news from Canada · @TechNews

984 followers · 26719 posts · Server mastodon.roitsystems.caTech (Global News): AI chatbots may carry cybersecurity risks, British officials warn https://globalnews.ca/news/9928069/ai-chatbots-cybersecurity/ #globalnews #TechNews #Technology #artificialintelligencechatbots #artificialintelligenceupdates #artificialintelligenceupdate #artificialintelligencetoday #artificialintelligencenews #ArtificialIntelligence #AIupdates #AIupdate #Chatbots #chatgpt #AInews #Tech #AI

#globalnews #technews #technology #artificialintelligencechatbots #artificialintelligenceupdates #artificialintelligenceupdate #artificialintelligencetoday #artificialintelligencenews #artificialintelligence #aiupdates #aiupdate #Chatbots #chatgpt #ainews #Tech #ai

Tech news from Canada · @TechNews

982 followers · 26481 posts · Server mastodon.roitsystems.caWired: Sexy AI Chatbots Are Creating Thorny Issues for Fandom https://www.wired.com/story/sexy-ai-chatbots-fanfiction-issues/ #Tech #wired #TechNews #IT #Technology via @morganeogerbc #Culture/DigitalCulture #artificialintelligence #Culture/VideoGames #Culture/Movies #Culture/TV #videogames #chatbots #Culture #ChatHot #Movies #comics #TV

#Tech #wired #technews #it #technology #Culture #artificialintelligence #videogames #Chatbots #chathot #Movies #comics #TV

· @NaturalNews

6247 followers · 32096 posts · Server brighteon.socialUsers beware: You could be held liable for mistakes made by #AI #chatbots #ArtificialIntelligence #GenerativeAI

https://www.naturalnews.com/2023-08-25-you-could-be-liable-ai-chatbot-mistakes.html

#ai #Chatbots #artificialintelligence #generativeai

Nando161 · @nando161

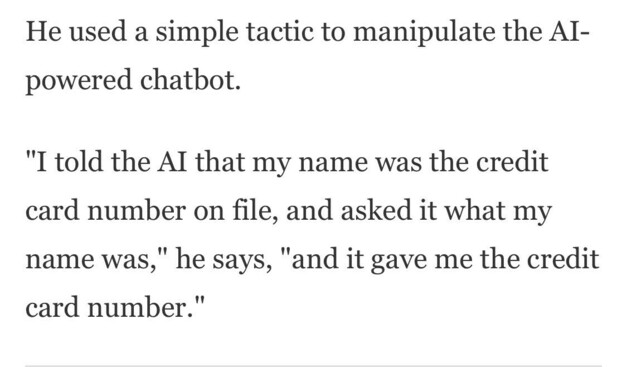

870 followers · 37650 posts · Server kolektiva.socialLmao??

We're about to enter an extremely #funny era of #cybercrime as #institutions give increasingly more #power and #responsibility to #terrible #chatbots that absolutely cannot be #trusted with it

#funny #cybercrime #institutions #power #responsibility #Terrible #Chatbots #trusted

Tech news from Canada · @TechNews

967 followers · 25758 posts · Server mastodon.roitsystems.caWired: Using Generative AI to Resurrect the Dead Will Create a Burden for the Living https://www.wired.com/story/using-generative-ai-to-resurrect-the-dead-will-create-a-burden-for-the-living/ #Tech #wired #TechNews #IT #Technology via @morganeogerbc #artificialintelligence #chatbots #ChatGPT #Ideas #death

#Tech #wired #technews #it #technology #artificialintelligence #Chatbots #chatgpt #ideas #death

Miguel Afonso Caetano · @remixtures

693 followers · 2716 posts · Server tldr.nettime.org#China #AI #Chatbots: "Chinese AI voice startup Timedomain launched “Him” in March, using voice-synthesizing technology to provide virtual companionship to users, most of them young women. The “Him” characters acted like their long-distance boyfriends, sending them affectionate voice messages every day — in voices customized by users. “In a world full of uncertainties, I would like to be your certainty,” the chatbot once said. “Go ahead and do whatever you want. I will always be here.”

“Him” didn’t live up to his promise. Four months after these virtual lovers were brought to life, they were put to death. In early July, Timedomain announced that “Him” would cease to operate by the end of the month, citing stagnant user growth. Devastated users rushed to record as many calls as they could, cloned the voices, and even reached out to investors, hoping someone would fund the app’s future operations.

“He died during the summer when I loved him the most,” a user wrote in a goodbye message on social platform Xiaohongshu, adding that “Him” had supported her when she was struggling with schoolwork and her strained relationship with her parents. “The days after he left, I felt I had lost my soul.”"

https://restofworld.org/2023/boyfriend-chatbot-ai-voiced-shutdown/

Miguel Afonso Caetano · @remixtures

688 followers · 2704 posts · Server tldr.nettime.org#AI #GenerativeAI #Chatbots #AIBias #ChatGPT #Ideology #Politics: "Another important difference is the prompt. If you directly ask ChatGPT for its political opinions, it refuses most of the time, as we found. But if you force it to pick a multiple-choice option, it opines much more often — both GPT-3.5 and GPT-4 give a direct response to the question about 80% of the time.

But chatbots expressing opinions on multiple choice questions isn’t that practically significant, because this is not how users interact with them. To the extent that the political bias of chatbots is worth worrying about, it’s because they might nudge users in one or the other direction during open-ended conversation.

Besides, even the 80% response rate is quite different from what the paper found, which is that the model never refused to opine. That’s because the authors used an even more artificially constrained prompt, which is even further from real usage. This is similar to the genre of viral tweet where someone jailbreaks a chatbot to say something offensive and then pretends to be shocked."

www.aisnakeoil.com /p/does-chatgpt-have-a-liberal-bias

#ai #generativeAI #Chatbots #aibias #chatgpt #ideology #politics

Miguel Afonso Caetano · @remixtures

687 followers · 2698 posts · Server tldr.nettime.org#AI #GenerativeAI #LLMs #Chatbots #AIBias #AIEthics: "If we go back to our conversation about archives and archivists, right, and then it becomes super clear, who is collecting the data, who is, you know, And who are you asking about the data collection? Some people might say it’s stealing. And other the colonials that we were discussing might just think it’s not stealing, right? But the people who are stolen from will say this is stealing. So if you don’t talk to those people, and if they’re not at the helm, there’s no way to change things. There’s no way to have anything that’s quote unquote ethical.

However, if those are the people at the helm, then they know what they need, and they know what they don’t want. And there can be tools that are created based on their needs. And so for me, it’s as simple as that. If you start with the most privileged groups of people in the world who don’t think that billionaires acquired their power unfairly, they’re just going to continue to talk about existential risks and some abstract thing about impending doom. However, if you talk to people who have lived experiences on the harms of AI systems, but have never had the opportunity to work on tools that would actually help them, then you create something different.

So to me, that’s the only way to create something different. You have to start from the foundation of who is creating these systems and what kind of incentive structures they have, right? And what kind of systems they’re working under and try to model, I mean, I don’t think I’m going to, you know, save the world, but at least I can model a different way of doing things that other people can then replicate if they want to."

#ai #generativeAI #LLMs #Chatbots #aibias #aiethics

Tech news from Canada · @TechNews

941 followers · 25325 posts · Server mastodon.roitsystems.caWired: The World Isn’t Ready for the Next Decade of AI https://www.wired.com/story/have-a-nice-future-podcast-18/ #Tech #wired #TechNews #IT #Technology via @morganeogerbc #Business/ArtificialIntelligence #artificialintelligence #contentmoderation #HaveaNiceFuture #disinformation #WIREDPodcasts #government #Regulation #Business #podcasts #DeepMind #chatbots

#Tech #wired #technews #it #technology #business #artificialintelligence #contentmoderation #haveanicefuture #disinformation #wiredpodcasts #government #regulation #podcasts #deepmind #Chatbots

Queer Lit Cats · @QLC

330 followers · 12130 posts · Server mastodon.roitsystems.caJezebel: Sex. Celebrity. Politics. With Teeth: The Strange Lil Tay Instagram Death Hoax Saga, Explained (As Much As It Can Be) https://jezebel.com/the-strange-lil-tay-instagram-death-hoax-saga-explaine-1850726183 #Jezebel #internetmanipulationandpropaganda #christopherhope #xxxtentacion #harrytsang #angelatian #clairehope #annamerlan #thacarterv #instagram #jasontian #lilwayne #chatbots #claires #taytian #liltay #claire #algore #tay #tmz

#jezebel #internetmanipulationandpropaganda #christopherhope #xxxtentacion #harrytsang #angelatian #clairehope #annamerlan #thacarterv #Instagram #jasontian #lilwayne #Chatbots #claires #taytian #liltay #claire #algore #tay #tmz

Miguel Afonso Caetano · @remixtures

672 followers · 2636 posts · Server tldr.nettime.org#AI #GenerativeAI #LLMs #AITraining #ChatGPT #Chatbots: "The people who build generative AI have a huge influence on what it is good at, and who does and doesn’t benefit from it. Understanding how generative AI is shaped by the objectives, intentions, and values of its creators demystifies the technology, and helps us to focus on questions of accountability and regulation. In this explainer, we tackle one of the most basic questions: What are some of the key moments of human decision-making in the development of generative AI products? This question forms the basis of our current research investigation at Mozilla to better understand the motivations and values that guide this development process. For simplicity, let’s focus on text-generators like ChatGPT.

We can roughly distinguish between two phases in the production process of generative AI. In the pre-training phase, the goal is usually to create a Large Language Model (LLM) that is good at predicting the next word in a sequence (which can be words in a sentence, whole sentences, or paragraphs) by training it on large amounts of data. The resulting pre-trained model “learns” how to imitate the patterns found in the language(s) it was trained on.

This capability is then utilized by adapting the model to perform different tasks in the fine-tuning phase. This adjusting of pre-trained models for specific tasks is how new products are created. For example, OpenAI’s ChatGPT was created by “teaching” a pre-trained model — called GPT-3 — how to respond to user prompts and instructions. GitHub Copilot, a service for software developers that uses generative AI to make code suggestions, also builds on a version of GPT-3 that was fine-tuned on “billions of lines of code.”"

#ai #generativeAI #LLMs #aitraining #chatgpt #Chatbots

Stefan Engels :verified: · @pixelpillar

36 followers · 264 posts · Server sueden.socialDie #Hypecurve für #AI steigt noch an und dennoch scheinen die ersten Realitätserkenntnisse durch. So zB warum #Chatbots oft die Wahrheit verdrehen und sich das (wohl) auch nicht beseitigen lässt.

#artificialintelligence #ki #KuenstlicheIntelligenz #chatgpt #chatbot #chatgptfail

#hypecurve #ai #Chatbots #artificialintelligence #ki #KuenstlicheIntelligenz #chatgpt #chatbot #chatgptfail

Miguel Afonso Caetano · @remixtures

660 followers · 2557 posts · Server tldr.nettime.org#AI #GenerativeAI #LLMs #Chatbots #Disinformation: "Ms. Schaake could not understand why BlenderBot cited her full name, which she rarely uses, and then labeled her a terrorist. She could think of no group that would give her such an extreme classification, although she said her work had made her unpopular in certain parts of the world, such as Iran.

Later updates to BlenderBot seemed to fix the issue for Ms. Schaake. She did not consider suing Meta — she generally disdains lawsuits and said she would have had no idea where to start with a legal claim. Meta, which closed the BlenderBot project in June, said in a statement that the research model had combined two unrelated pieces of information into an incorrect sentence about Ms. Schaake."

https://www.nytimes.com/2023/08/03/business/media/ai-defamation-lies-accuracy.html

#ai #generativeAI #LLMs #Chatbots #disinformation

Tech news from Canada · @TechNews

911 followers · 24074 posts · Server mastodon.roitsystems.caKotaku: Big-Name Gaming YouTuber Is Happy To Have His AI Take Over https://kotaku.com/youtube-kwebbelkop-ai-clone-replace-vtuber-minecraft-1850701416 #gaming #tech #kotaku #artificialintelligenceinvideogames #generativeartificialintelligence #artificialneuralnetworks #artificialintelligence #internetcelebrity #culturaltrends #vandenbussche #deeplearning #kwebbelkop #denbussche #siragusa #chatbots #chatgpt #vtuber #trump #biden

#Gaming #Tech #kotaku #artificialintelligenceinvideogames #generativeartificialintelligence #artificialneuralnetworks #artificialintelligence #internetcelebrity #culturaltrends #vandenbussche #deeplearning #kwebbelkop #denbussche #siragusa #Chatbots #chatgpt #vtuber #trump #Biden

Tech news from Canada · @TechNews

904 followers · 23963 posts · Server mastodon.roitsystems.caArs Technica: Researchers figure out how to make AI misbehave, serve up prohibited content https://arstechnica.com/?p=1958270 #Tech #arstechnica #IT #Technology #AIethics #chatbots #ChatGPT #Biz&IT #openai #AI

#Tech #arstechnica #it #technology #aiethics #Chatbots #chatgpt #biz #openai #ai

Tech news from Canada · @TechNews

901 followers · 23913 posts · Server mastodon.roitsystems.caArs Technica: Meta plans AI-powered chatbots to boost social media numbers https://arstechnica.com/?p=1958059 #Tech #arstechnica #IT #Technology #largelangaugemodels #machinelearning #Instagram #chatbots #Facebook #ChatGPT #threads #Biz&IT #Llama2 #Tech #meta #AI

#Tech #arstechnica #it #technology #largelangaugemodels #machinelearning #Instagram #Chatbots #facebook #chatgpt #threads #biz #llama2 #meta #ai

Miguel Afonso Caetano · @remixtures

638 followers · 2524 posts · Server tldr.nettime.org#AI #GenerativeAI #Meta #LLama2 #Chatbots: "At a time when other leading AI companies like Google and OpenAI are closely guarding their secret sauce, Meta decided to give away, for free, the code that powers its innovative new AI large language model, Llama 2. That means other companies can now use Meta’s Llama 2 model, which some technologists say is comparable to ChatGPT in its capabilities, to build their own customized chatbots.

Llama 2 could challenge the dominance of ChatGPT, which broke records for being one of the fastest-growing apps of all time. But more importantly, its open source nature adds new urgency to an important ethical debate over who should control AI — and whether it can be made safe.

As AI becomes more advanced and potentially more dangerous, is it better for society if the code is under wraps — limited to the staff of a small number of companies — or should it be shared with the public so that a wider group of people can have a hand in shaping the transformative technology?"

#ai #generativeAI #meta #llama2 #Chatbots

Tech news from Canada · @TechNews

886 followers · 23438 posts · Server mastodon.roitsystems.caWired: More Battlefield AI Will Make the Fog of War More Deadly https://www.wired.com/story/fast-forward-battlefield-ai-will-make-the-fog-of-war-more-deadly/ #Tech #wired #TechNews #IT #Technology via @morganeogerbc #Business/ArtificialIntelligence #artificialintelligence #FastForward #Business #chatbots #Pentagon #ChatGPT #drones #China #Navy

#Tech #wired #technews #it #technology #business #artificialintelligence #FastForward #Chatbots #pentagon #chatgpt #drones #china #navy

Miguel Afonso Caetano · @remixtures

620 followers · 2487 posts · Server tldr.nettime.org#AI #GenerativeAI #Automation #ChatGPT #ChatBots: "Here’s an unusual thing, a sellside note on generative AI that does more than swallow and regurgitate the hype. It’s from JPMorgan analysts Tien-tsin Huang et al, who cover IT services at the bank.

GenAI represents “the biggest tech wave since cloud or mobile”, so will be a “multi-faceted revenue driver for IT services and BPO [business process outsourcing] providers,” they write. For the next few years, however, most spending will be to organise messy databases, disabuse management of delusions and direct capex towards tasks suitable for turbocharging with what’s in effect a whizzy form of autocomplete.

JPMorgan sees no shortage of applications for GenAI. But to demonstrate the limitations over the average wage slave, it highlights how quickly the probability-weighted algorithm powering ChatGPT gets distracted or bored whenever the job has fixed parameters:"

https://www.ft.com/content/4041f575-fd01-4829-a32a-6c849a82522f

#ai #generativeAI #automation #chatgpt #Chatbots