Colin Sullender · @shiruken

175 followers · 1015 posts · Server octodon.socialTesla has officially paused the rollout of the Full Self-Driving (FSD) Beta to new users while working to resolve issues identified by the NHTSA. The voluntary recall to address the safety concerns will be deployed as an over-the-air software update once available.

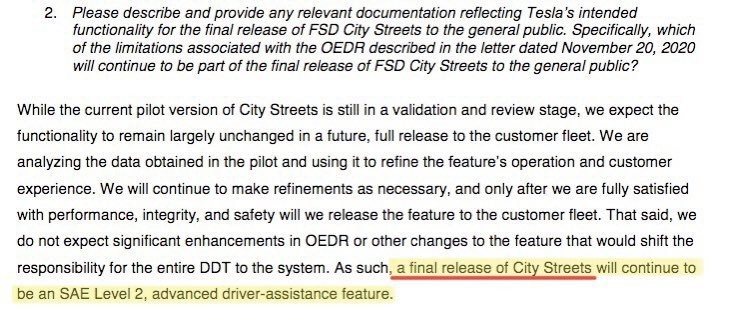

Tesla also explicitly refers to the FSD Beta as only an "SAE Level 2 driver support feature" for the first time. This description significantly differs from their [Elon Musk's] marketing claims around the product.

https://www.tesla.com/support/recall-fsd-beta-driving-operations

#Tesla #FullSelfDriving #FSD #FSDBeta #Recall #NHTSA #Safety #Car #Automobile #AutonomousDriving #ElonMusk

#tesla #FullSelfDriving #fsd #FSDBeta #recall #nhtsa #safety #car #automobile #autonomousdriving #elonmusk

fly4dat · @fly4dat

195 followers · 1967 posts · Server mastodon.socialWhen the biggest fans start to realize that they've been conned.

$TSLA

---

RT @chazman

I'm really disappointed. Can anyone really read this and feel like we are where we thought we were? I am all in on #FSDBeta and supportive of the path we are on, but this wording sounds so "legal" that I am not sure what to expect going forward. https://www.tesla.com/support/recall-fsd-beta-driving-operations… https://twitter.com/i/web/status/16300135…

https://twitter.com/chazman/status/1630013553863163905

Adam Cook · @adamjcook

622 followers · 3989 posts · Server mastodon.socialThe danger with partial automated driving systems, like #FSDBeta, is that they *appear* to be controlling the vehicle at times without human control input - but that is an illusion.

At all times, the human driver is providing the same amount of control input as if the vehicle was not equipped with any automated driving system at all.

If one wants to somehow separate the human driver from the control input, an extremely detailed safety case must be presented with a validated foundation.

Adam Cook · @adamjcook

622 followers · 3987 posts · Server mastodon.social*“I don’t think they [#Tesla] should have released their self-driving software. I think that was a mistake,"* said Jim Farley (CEO of #Ford).

Ok. Look. This is the stuff I am talking about.

It is one thing to see the media clumsily dance around Tesla's vast #FSDBeta marketing wrongdoings, but I know that Ford has highly-capable controls and Human Factors engineers that are aware of this fact...

Tesla vehicles *do not* contain any software that makes their vehicles capable of "self-driving".

Adam Cook · @adamjcook

614 followers · 3869 posts · Server mastodon.socialLastly, consider reviewing Professor Phil Koopman's response to this NHTSA recall action (on LinkedIn) - as he touches on a few other aspects of this.

Be sure to review Professor Koopman's follow-up comments on his post as well.

Professor Koopman is one of the foremost experts in embedded systems, systems safety and automated driving systems.

Adam Cook · @adamjcook

614 followers · 3862 posts · Server mastodon.socialThe NHTSA investigators performed a series of assessments in a #FSDBeta-equipped vehicle in undoubtedly a small area or a series of relatively small areas (relative to the enormous size of FSD Beta's ODD) and made some observations of automated vehicle behavior that deviated from established roadway regulations.

That misses the point entirely.

What is *really* needed is scrutiny of Tesla's systems safety lifecycle (should they actually have one) and Safety Management System.

Adam Cook · @adamjcook

614 followers · 3861 posts · Server mastodon.socialSuch "QA" processes are extremely cheap compared to safety-critical system validation - which is why #Tesla embraces it.

Ultimately, #Tesla operates under a "safety case" in the #FSDBeta program that mimics the default position of the #NHTSA - that is, why is there a need for Human Factors considerations and robust validation scrutiny when the human driver can just be blamed by everyone?

This is where this particular NHTSA recall action falls terminally short.

Adam Cook · @adamjcook

614 followers · 3859 posts · Server mastodon.socialThe other core issue with the #FSDBeta program is that validation (which is very different from "QA") is not possible when the Operational Design Domain (ODD) of the automated vehicle is effectively unbounded.

Or, more accurately, Tesla has presented nothing in terms of what would undoubtedly be a revolutionary, ground-breaking safety case that describes such a validation process.

Instead, Tesla clumsily argues that "AI training" is equivalent to validation - which it is not.

Adam Cook · @adamjcook

614 followers · 3858 posts · Server mastodon.socialBuilding on that, *the* structural systems safety issue with #FSDBeta is that it attempts to mesh the very real limitations of an enormous pool of possible human drivers with an opaque automated driving system that *appears* unlimited in its capabilities.

A hard mismatch therefore exists.

An extremely dangerous mismatch.

#Tesla does this in an effort to provide Tesla owners with a *sense* that their vehicle is capable of "driving itself".

But these concepts are foreign to the NHTSA...

Adam Cook · @adamjcook

614 followers · 3851 posts · Server mastodon.socialSo now that things have quieted down a little bit from yesterday, here are my thoughts on the #Tesla recall of their #FSDBeta product...

First things first, Tesla does not sell any vehicle that is capable of "self driving" or "driving itself" at any time.

The human driver is *always* driving.

I do wish that media publications and editors (who generally write the article titles) would cease this practice. It is harmful to public safety.

Tech news from Canada · @TechNews

277 followers · 7007 posts · Server mastodon.roitsystems.caArs Technica: Tesla to recall 362,758 cars because Full Self Driving Beta is dangerous https://arstechnica.com/?p=1918268 #Tech #arstechnica #IT #Technology #fullself-driving #autopilotrecall #safetyrecall #Teslarecall #NHTSAreall #FSDBeta #nhtsa #Tesla #Cars

#Tech #arstechnica #it #technology #fullself #autopilotrecall #safetyrecall #teslarecall #nhtsareall #FSDBeta #nhtsa #tesla #cars

Adam Cook · @adamjcook

607 followers · 3759 posts · Server mastodon.socialThe context for this Musk Tweet, by the way, is that a commercial that is highly-critical of Tesla's #FSDBeta program will be run tonight during the #SuperBowl .

I had a suspicion that this commercial would draw Musk out and force Musk to make a very damning, self-incriminating statement.

https://www.cnn.com/2023/02/12/business/super-bowl-ad-tesla-full-self-driving/index.html

Adam Cook · @adamjcook

607 followers · 3755 posts · Server mastodon.socialAt no time, is any Tesla vehicle capable of "driving itself".

This statement by Musk is one of the more direct statements (that I can recall) that *entirely* undercuts the (already flimsy) systems safety arguments that #Tesla has made relative to the #FSDBeta and Autopilot programs.

Both Autopilot and FSD Beta-equipped vehicles are Level 2-capable vehicles and require an attentive human driver at all times - the same as if no automated driving system existed at all.

Adam Cook · @adamjcook

569 followers · 3231 posts · Server mastodon.socialInstead of validation, what Tesla is doing (at least per their latest "AI Day" presentation) is to treat this as a "consumer/business #AI software problem" much like #ChatGPT - and it is not surprising that Musk has been nodding at ChatGPT in the context of the #FSDBeta program lately.

But a unhandled failure in ChatGPT cannot readily kill or injury someone... so it is a totally different development ballgame.

But Tesla's development path *is* cheap - which is always a Musk priority.

Adam Cook · @adamjcook

565 followers · 3196 posts · Server mastodon.social@niedermeyer So... the other thing here also is the fact that "safer than a human" is really ill-defined and, in fact, immaterial for these types of systems.

A continuously safe system is built upon a system that was previously deemed safe by a prior validation process.

If the design intent of #FSDBeta is to have an automated driving system in a certain Operational Design Domain (ODD) such that it does not require a human driver fallback... then a "human-based metric" is nonsense.

Adam Cook · @adamjcook

565 followers · 3192 posts · Server mastodon.socialI am not an investor in anything, but I guess Musk stated something similar to this tonight on a #Tesla earnings call...

"Musk is now saying that Tesla's FSD with Hardware 3 is (will be?) around 200% safer than a human, while the upcoming Hardware 4 will be more like 500%."

Musk constantly throws the term "safety" around like a cheap suit.

If I was ever in the position to and actually cared to ask him, I would ask Musk how he defines "safety".

#tesla #systemssafety #FSDBeta

Adam Cook · @adamjcook

564 followers · 3141 posts · Server mastodon.social"After all this time, #Tesla #Autopilot still doesn't allow collaborative steering and doesn't have an effective driver monitoring system," said Consumer Reports Auto Testing director Jake Fisher in a statement.

And that is amongst the least of Tesla's #SystemsSafety wrongdoings associated with this system... and we have not even broached the enormous #FSDBeta issues yet.

https://www.autonews.com/automakers-suppliers/tesla-autopilot-slips-driver-assistance-ratings

#tesla #autopilot #systemssafety #FSDBeta

Adam Cook · @adamjcook

541 followers · 2741 posts · Server mastodon.social“Our mission is to ensure artificial general intelligence benefits all of humanity, and we work hard to build safe and useful AI systems that limit bias and harmful content,” the [#OpenAI] spokesperson said.

It is not exactly the same, but it is curious that this statement has nearly the exact same dimensions in how #Tesla justifies its own, wholesale sloppy experimentation on the public via its #FSDBeta program.

Probably not a coincidence that Musk is a part of both organizations.

halfpress · @halfpress

37 followers · 36 posts · Server mastodon.socialI think the best description of my Tesla #FSDBeta experience of late could be described as a whiplash combination of Mr. Toad’s Wild Ride and Mr. Toad Soils Himself with periods of Mr. Toad Sorta Gets The Job Done in between. To say it’s nowhere close to ready for prime time is a vast understatement.

Adam Cook · @adamjcook

471 followers · 2042 posts · Server mastodon.socialFrom @KenKlippenstein reporting for @theintercept on Twitter...

"I obtained surveillance footage of the self-driving #Tesla that abruptly stopped on the Bay Bridge, resulting in an eight-vehicle crash that injured 9 people including a 2 yr old child just hours after Musk announced the self-driving feature."

Full story: https://theintercept.com/2023/01/10/tesla-crash-footage-autopilot/