Fred Hebert · @mononcqc

1735 followers · 230 posts · Server hachyderm.ioCool #paper for this week, Ben Lupton and Richard Warren's "Managing Without Blame? Insights from the Philosophy of Blame" at https://link.springer.com/article/10.1007/s10551-016-3276-6

They look at no-blame approaches, then contrast them with at least 4 broad philosophical conceptualizations of blame, and then try to suggest a better alternative to blamelessness, which builds upon more careful blame within communities of practice.

Notes at https://ferd.ca/notes/paper-managing-without-blame.html & https://cohost.org/mononcqc/post/2256727-paper-managing-with

Fred Hebert · @mononcqc

1725 followers · 228 posts · Server hachyderm.ioDug out my older notes on Gary Klein's Anticipatory Thinking #paper — https://www.researchgate.net/publication/228953044_Anticipatory_Thinking

The paper looks at what is described as "gambling with your attention" with multiple variants: pattern matching, trajectory tracking, and convergence. It then covers problems and blockers to these functioning well, with suggested work-arounds for individuals and organizations.

Notes at https://ferd.ca/notes/paper-anticipatory-thinking.html & https://cohost.org/mononcqc/post/2186632-paper-anticipatory

Fred Hebert · @mononcqc

1725 followers · 228 posts · Server hachyderm.io@deliverator I’ve expanded on that latter quote in a blog post for the #LearningFromIncidents community at https://www.learningfromincidents.io/posts/carrots-sticks-and-making-whings-worse — you may find that one interesting

juno suárez · @juno

163 followers · 314 posts · Server hachyderm.ioFound this in an old technology and society book, in a footnote by Madeleine Akrich:

Clint "SpamapS" Byrum · @SpamapS

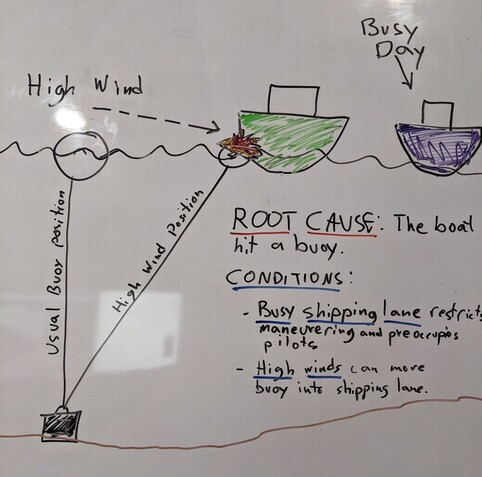

209 followers · 257 posts · Server fosstodon.orgInspired by Dr. Ivan Pupulidy's talk at #LFIConf23 about moving gracefully from compliance to learning. If you only talk about #RootCause when reviewing incidents you will likely miss opportunities to learn. What is normal? What is exceptional? How do experts know which one is at play? #LearningFromIncidents #WhiteboardBackground

#lficonf23 #rootcause #LearningFromIncidents #whiteboardbackground

Fred Hebert · @mononcqc

1675 followers · 215 posts · Server hachyderm.ioA discussion in the #LearningFromIncidents slack had me quickly pull up my notes from Unruly Bodies of Code in Time by Marisa Leavitt Cohn:

https://www.jstor.org/stable/j.ctv1xcxr3n.14#metadata_info_tab_contents

The chapter covers sample stories from the ethnographic work, done by embedding in the software development teams at the JPL labs (NASA) responsible for the Cassini mission. She reviews what maintainability means to them.

Notes at https://ferd.ca/notes/paper-unruly-bodies-of-code-in-time.html & https://cohost.org/mononcqc/post/1840750-paper-unruly-bodies

Fred Hebert · @mononcqc

1648 followers · 207 posts · Server hachyderm.ioHuh, #LearningFromIncidents folks just shared a link to https://metrist.io/blog/the-data-behind-delayed-status-page-updates/

I for one, believe each of our pageable alerts should also page all of our customers so they have the freshest information available at all times.

What do you mean that's a terrible idea? It's the most automated of all solutions! Could it be that time-to-customer-notification isn't that useful of a signal?

Fred Hebert · @mononcqc

1631 followers · 197 posts · Server hachyderm.ioToday's #paper was Accident Report Interpretation by Derek Heraghty: https://www.mdpi.com/2313-576X/4/4/46/htm

He takes a linear fact-centric accident report from a construction site, uses its investigation data to write two other reports, one based on a systems analysis, and one that publishes the stories told by workers.

He then compares the resulting suggested fixes by various test groups, to show the impact of framing.

Notes at https://cohost.org/mononcqc/post/1591376-paper-accident-repo

Fred Hebert · @mononcqc

1579 followers · 174 posts · Server hachyderm.ioToday's #paper was long overdue: Lisanne Bainbridge's Ironies of Automation (https://ckrybus.com/static/papers/Bainbridge_1983_Automatica.pdf)

The core thesis is that automated systems always end up being human-machine systems, and even as you automate more and more, human factors keep being of critical importance.

Two requirements clash at a fundamental level with automation: the need for someone to monitor if it behaves correctly, and to take over when it does not.

Notes at https://cohost.org/mononcqc/post/1376487-paper-ironies-of-au

Fred Hebert · @mononcqc

1561 followers · 168 posts · Server hachyderm.ioFetched and transferred my old notes on Richard Cook & David Wood's "Distancing Through Differencing" #paper https://www.researchgate.net/publication/292504703_Distancing_Through_Differencing_An_Obstacle_to_Organizational_Learning_Following_Accidents

In this one, they point that very local incident investigation reports and audiences who over-emphasize the differences between worksites can end up ignoring useful potential learnings that could apply to them, even in organizations with strong safety cultures

Notes at: https://cohost.org/mononcqc/post/1321331-paper-distancing-th

#paper #LearningFromIncidents #ResilienceEngineering

Fred Hebert · @mononcqc

1523 followers · 155 posts · Server hachyderm.ioThis week's #paper is "Nine Steps to Move Forward from Error" by Woods and Cook. It states 9 steps and 8 maxims (with 8 corollaries) to provide ways in which organizations and systems can constructively respond to failure, rather than getting stuck around concepts such as "human error."

https://www.researchgate.net/publication/226450254_Nine_Steps_to_Move_Forward_from_Error

It's a sort of quick overview of a lot of the content from both authors.

Notes at: https://cohost.org/mononcqc/post/1235221-paper-nine-steps-to

#paper #ResilienceEngineering #LearningFromIncidents

Fred Hebert · @mononcqc

1424 followers · 141 posts · Server hachyderm.ioA work discussion had me dig up my notes on one of my favorite texts On People and Computers in JCSs at Work, Chapter 11 of the book Joint Cognitive Systems: Patterns in Cognitive Systems Engineering by David Woods.

It explains the concept of the "context gap" from #cybernetics and why humans and computers do balancing work in a joint alliance, rather than a strict separation of concerns.

Notes at https://cohost.org/mononcqc/post/1157774-paper-on-people-and

#cybernetics #LearningFromIncidents #ResilienceEngineering

Fred Hebert · @mononcqc

1401 followers · 134 posts · Server hachyderm.ioI decided to revisit Richard Cook's paper titled "Those found responsible have been sacked: Some observations on the usefulness of error".

The paper classifies human error as not useful in investigations, but instead as useful for organizations as a whole to limit liability, provide an illusion of control, distance yourself from incidents, and as a sign for observers of failed investigations.

Notes at https://cohost.org/mononcqc/post/1127828-paper-those-found-r

Fred Hebert · @mononcqc

1389 followers · 129 posts · Server hachyderm.ioDigging up some older notes for a #paper this week:

When mental models go wrong. Co-occurrences in dynamic, critical systems by Denis Besnard: https://hal.archives-ouvertes.fr/docs/00/69/18/13/PDF/Besnard-Greathead-Baxter-2004--Mental-models-go-wrong.pdf

This paper looks at a pattern that in many incidents where someone's mental model and understanding of a situation is wrong, and they end up repeatedly ignoring cues and events that contradict it, and into what causes this when trying to actually do a good job.

Notes at https://cohost.org/mononcqc/post/1097297-paper-when-mental-m

Tim Nicholas · @tim

196 followers · 569 posts · Server cloudisland.nzWell, the journey begins. 48 hours earlier than initially planned (in anticipation of Cyclone Gabrielle travel disruption) but I’m on my way to Denver for #LFIcon23 #LearningFromIncidents

#lficon23 #LearningFromIncidents

Fred Hebert · @mononcqc

1376 followers · 121 posts · Server hachyderm.ioRe-posting some old notes I had on a #paper by Sidney Dekker: Failure to adapt or adaptations that fail: contrasting models on procedure and safety

The paper mentions that deviating from procedures can both be a source of errors, but also of success; preventing all deviance can be as risky as tolerating them all. It's a skill worth training in people, and a procedural gap to monitor.

Notes at https://cohost.org/mononcqc/post/1002599-paper-failure-to-ad

#paper #LearningFromIncidents #ResilienceEngineering

Fred Hebert · @mononcqc

1292 followers · 107 posts · Server hachyderm.ioThis week I decided to revisit Sidney Dekker's #paper titled "MABA-MABA or Abracadabra? Progress on Human–Automation Co-ordination", which discusses something called "the substitution myth", a misguided attempt at replacing human weaknesses with automation.

Instead, the suggestion is to focus on cooperation and team work, rather than substitution:

My notes are at: https://cohost.org/mononcqc/post/960352-paper-maba-maba-or

#paper #LearningFromIncidents #humanfactors

Fred Hebert · @mononcqc

1292 followers · 107 posts · Server hachyderm.ioEnded up writing about how we (@honeycombio) run incident response: dealing with the unknown, limited cognitive bandwidth, coordination patterns, psychological safety, and feeding information back into the organization.

https://thenewstack.io/how-we-manage-incident-response-at-honeycomb/

#sre #ResilienceEngineering #LearningFromIncidents

Fred Hebert · @mononcqc

1228 followers · 101 posts · Server hachyderm.ioThis week's #paper: Richard Cook and Jans Rasmussen's "Going Solid": https://qualitysafety.bmj.com/content/qhc/14/2/130.full.pdf

The paper highlights properties of loosely-coupled systems saturating, then going tightly-coupled, and situating it within Rasmussen's Drift Model for accidents to frame the risks of hitting these points. It also suggests that better understanding of what your operating point is can help improve safety.

Notes at https://cohost.org/mononcqc/post/888958-paper-going-solid

#paper #ResilienceEngineering #LearningFromIncidents

Fred Hebert · @mononcqc

1206 followers · 91 posts · Server hachyderm.ioPost I wrote on @honeycombio, on why counting incidents is not a useful target (though a possibly useful signal).

Your objectives should be things you can do, not events you wish do not happen.

You hope that forest fires don’t happen, but there’s only so much that prevention can do. Likewise with incidents. You want to know that your response is adequate. And you want to have a systemic perspective that's actually useful in guiding work.