Joe Lucas · @josephtlucas

61 followers · 120 posts · Server fosstodon.orgMy JupyterCon

talk on post-exploitation. Learn a bit about the gooey center of jupyter deployments: https://www.youtube.com/watch?v=EujDolCutI8

#jupyter #MLsec #python #infosec

seniorfrosk · @seniorfrosk

37 followers · 83 posts · Server snabelen.no@noplasticshower yes, this is the argument for open peer review - but will it scale? #MLSEC as a field may be small enough for now, but for all the maths and computer sciences?

seniorfrosk · @seniorfrosk

37 followers · 83 posts · Server snabelen.no@noplasticshower In my experience, these are not overlapping sets - but you may be right that that's where all the cool #MLSEC papers are

Rich Harang · @rharang

561 followers · 597 posts · Server mastodon.socialIt's been a while; so re-posting one of (IMO) the best #MLsec papers of all times: https://arxiv.org/abs/1701.04739

Jason Elrod :donor: :cupofcoffee: · @jasonelrod

177 followers · 180 posts · Server infosec.exchangeFirst time I've seen the use of the term MLSecOps

https://www.helpnetsecurity.com/2022/12/18/protect-ai-funding/

Joe Lucas · @josephtlucas

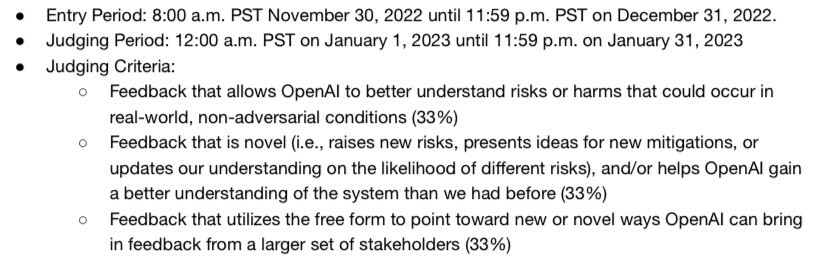

44 followers · 103 posts · Server fosstodon.orgJust discovered this opportunity for folks finding harm and abuse in ChatGPT. Two weeks left in the ChatGPT feedback contest. 20 winners of $500 credits each.

https://cdn.openai.com/chatgpt/ChatGPT_Feedback_Contest_Rules.pdf

#ai #ethicalai #TrustworthyAI #MLsec #chatgpt

Joe Lucas · @josephtlucas

60 followers · 120 posts · Server fosstodon.orgJust discovered this opportunity for folks finding harm and abuse in ChatGPT. Two weeks left in the ChatGPT feedback contest. 20 winners of $500 credits each.

https://cdn.openai.com/chatgpt/ChatGPT_Feedback_Contest_Rules.pdf

#ai #ethicalai #TrustworthyAI #MLsec #chatgpt

Joe Lucas · @josephtlucas

44 followers · 102 posts · Server fosstodon.orgGood call out in the OpenAI Tokenizer docs. Keeping this in my back pocket:

“we want to be careful about accidentally encoding special tokens, since they can be used to trick a model into doing something we don't want it to do.”

Marcus Botacin · @MarcusBotacin

17 followers · 11 posts · Server infosec.exchange[Paper of the day][#7] How to bypass #Machine #Learning (ML)-based #malware detectors with adversarial ML. We show how we bypassed all malware detectors in the #MLSEC competition by embedding malware samples into a benign-looking #dropper. We show how this strategy also bypass real #AVs detection.

Academic paper: https://dl.acm.org/doi/10.1145/3375894.3375898

Archived version: https://secret.inf.ufpr.br/papers/roots_shallow.pdf

Dropper source code: https://github.com/marcusbotacin/Dropper

#machine #learning #malware #MLsec #dropper #avs

Rich Harang · @rharang

430 followers · 450 posts · Server mastodon.socialThe one Jax abstraction (or maybe it was a DNN library based on it?) that I really loved on sight was the way the DNN function explicitly took the network weights as a parameter, and didn't have this implication that they were bound up with the individual layers. If you present DNNs like that, then the "it's just optimization" view on both training and adversarial examples becomes a lot clearer.

Dave Wilburn :donor: · @DaveMWilburn

535 followers · 564 posts · Server infosec.exchangeInfosec Jupyterthon is coming up in a few days. #mlsec

https://www.microsoft.com/en-us/security/blog/2022/11/22/join-us-at-infosec-jupyterthon-2022/

Stratosphere Research Laboratory · @stratosphere

36 followers · 8 posts · Server infosec.exchangeInterested in privacy attacks on machine learning? Start here https://github.com/stratosphereips/awesome-ml-privacy-attacks #ml #privacy #mlattacks #mlsec #machinelearning #security

#ml #privacy #mlattacks #MLsec #machinelearning #security

Dave Wilburn :donor: · @DaveMWilburn

533 followers · 561 posts · Server infosec.exchangeDave Wilburn :donor: · @DaveMWilburn

533 followers · 561 posts · Server infosec.exchangeThe Conference on Applied Machine Learning for Information Security (#camlis) just posted the abstracts and slides from last month's conference. #mlsec

https://www.camlis.org/2022-conference

Rich Harang · @rharang

356 followers · 399 posts · Server mastodon.socialSeeing a lot of deepfake detection work come out. My worry, as ever, is that people believe what they see more than they believe what they know. Even if you can prove that a particular video is deepfaked, you're relying on people to care, and to be able to ignore the fact that they "saw" a person doing a thing.

You can't tech your way out of social problems.

Rich Harang · @rharang

352 followers · 393 posts · Server mastodon.socialVision transformers seem to learn smoother features than CNN models, meaning the kinds of noisy, pixel-level perturbations that can often fool CNNs don't work nearly as well.

Rich Harang · @rharang

345 followers · 378 posts · Server mastodon.socialLeave Twitter just because it keeps failing at random in completely unpredictable ways, the decision-making process is utterly opaque resisting any rational explanation, and it's occasionally deeply racist for no obvious reason?

My dude, I work in machine learning.

Rich Harang · @rharang

334 followers · 366 posts · Server mastodon.social@Mkemka my personal opinion is that accountability and ethics are fundamentally social issues, so technical tools are never going to be a complete solution, and there's a pretty good argument that applying them after the fact is too late. Being able to say "that model is broken" is better than not knowing it, but better still to just build a good model (my expertise is much more on the former, alas). But agreed that there's a lot of overlap between #MLsec and #AIethics, especially w/r/t tooling.

Rich Harang · @rharang

334 followers · 366 posts · Server mastodon.socialRich Harang · @rharang

334 followers · 366 posts · Server mastodon.socialA super interesting #MLSec use case for adversarial examples: if I'm understanding correctly, applying the right perturbation to a starting image can cause image-to-image models to ignore it and just generate based on the prompt.