antonio vergari · @nolovedeeplearning

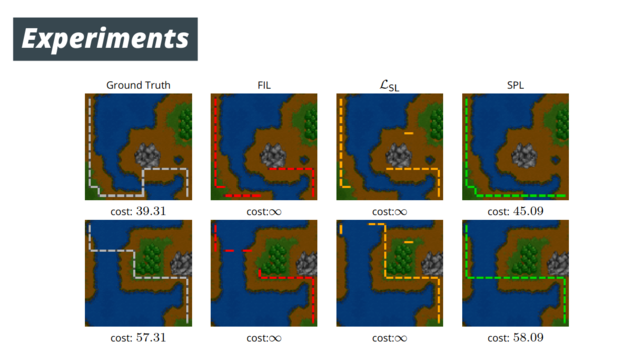

345 followers · 164 posts · Server mastodon.socialFrom #routing to #hierarchical multi-label classification and user #preference learning, SPLs outperform other baselines that relax constraints or use problem-specific architectures.

Even when they predict the wrong labels, they still form a valid configuration!

Join Kareem Ahmed, Stefano Teso, Kai-wei Chang, @guy

and me at

#NeurIPS2022 to talk about #SPLs and how to have #neural #nets to behave in the way we #expect them to do!

📜https://openreview.net/forum?id=o-mxIWAY1T8

🖥️https://github.com/KareemYousrii/SPL

6/6

#routing #hierarchical #preference #NeurIPS2022 #SPLS #neural #nets #expect

antonio vergari · @nolovedeeplearning

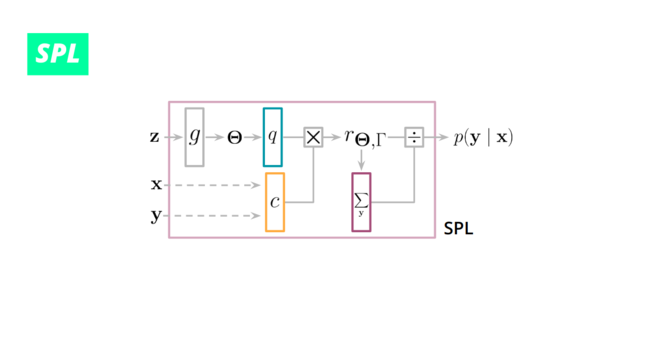

345 followers · 162 posts · Server mastodon.socialOur #Semantic #Probabilistic #Layers #SPLs instead always guarantee 100% of the times that predictions satisfy the injected constraints!

They can be readily used in deep nets as they can be trained by #backprop and #maximum #likelihood #estimation.

4/

#likelihood #estimation #semantic #probabilistic #layers #SPLS #backprop #maximum