Elias Dabbas :verified: · @elias

64 followers · 109 posts · Server seocommunity.socialThe recording of yesterday's discussion is available: scaling your use of #ChatGPT using two techniques

1. Bulk prompts: creating prompt templates using rich structured data

2. Fine tuning: creating a very specific functionality by training the model to do one particular task by learning from hundreds/thousands of examples. An entity extraction app that also provides Wikipedia URLs of extracted entities.

#chatgpt #datascience #python #generativeAI #advertools #seo #llm

Elias Dabbas :verified: · @elias

63 followers · 108 posts · Server seocommunity.socialHappy to announce a new cohort for my course:

Data Science with Python for SEO 🎉 🎉 🎉

🔵 For absolute beginners

🔵 Run, automate, and scale many SEO tasks with Python like crawling, analyzing XML sitemaps, text/keyword analysis

🔵 Intro to data manipulation and visualization skills

🔵 Get started with #advertools #pandas and #plotly

🔵 Make the transition from #Excel to #Python

🔵 Online, live, cohort-based, interactive

🔵 Spans three days in one week

#advertools #pandas #plotly #excel #python

Elias Dabbas :verified: · @elias

63 followers · 107 posts · Server seocommunity.socialThis week: Crawl with #advertools, scale with #ChatGPT

Two techniques to scale your prompts

1. Generating prompts on a large scale by creating prompt templates + structured data (e.g. creating many product descriptions)

2. Using fine-tuning to train ChatGPT to perform a highly specialized task, using hundreds/thousands of training examples. I'll share details on my entity extraction app.

Join us Thursday:

https://lnkd.in/d2uyr_6U

#advertools #chatgpt #datascience #DigitalMarketing #python #structureddata #seo

Elias Dabbas :verified: · @elias

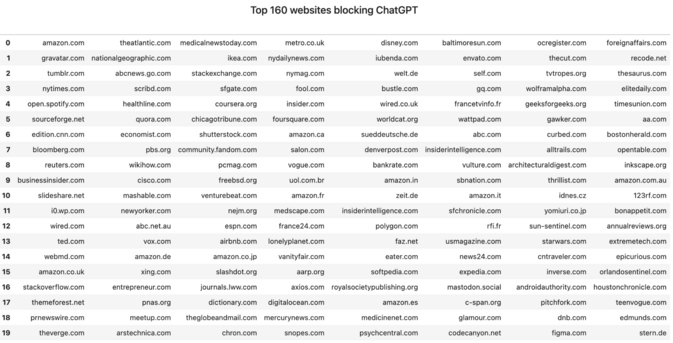

63 followers · 105 posts · Server seocommunity.socialWho's blocking OpenAI's GPTBot?

🔵 Use the #advertools robotstxt_to_df function to fetch robots files in bulk (one, five, ten thousand... ) in one go.

🔵 Run as many times as you want, for as many domains

🔵 Top domains list obtained from the Majestic (Majestic.com) Million dataset (thank you)

🔵 This was run for 10k domains (7.3k successful)

🔵 Get the code and data (and answer to the poll question):

#advertools #datascience #ai #generativeAI #chatgpt #seo #crawling

Elias Dabbas :verified: · @elias

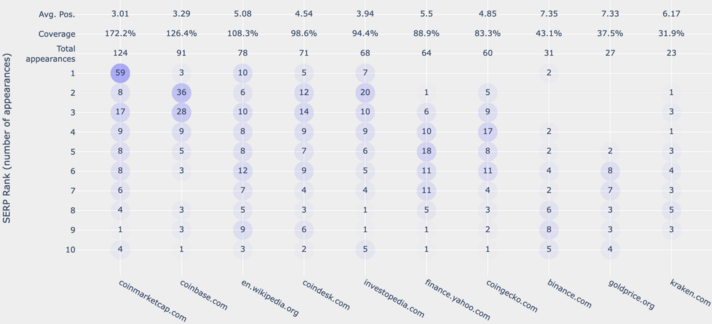

61 followers · 100 posts · Server seocommunity.socialAnalyzing SERPs on a large scale with #Python and #advertools

The recording is now available

🔵 Creating a large set of queries in an industry

🔵 Creating query variants

🔵 Running the requests in bulk

🔵 Running the requests across various dimensions (country, language, etc)

🔵 Visualizing the results with a heatmap

#python #advertools #datascience #seo #datavisualization

Elias Dabbas :verified: · @elias

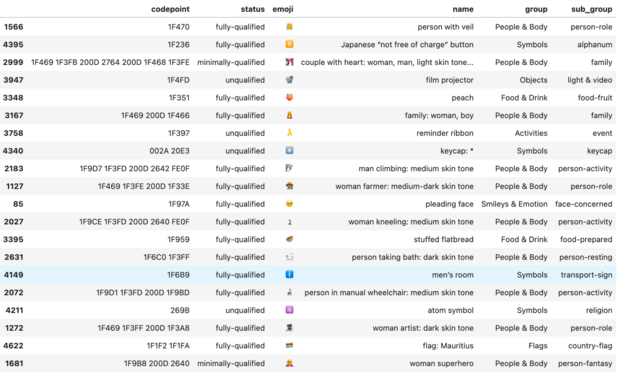

57 followers · 98 posts · Server seocommunity.socialWhat's the longest regular expression that I wrote?

140,820 characters (one hundred and forty thousand)

It's a regex for finding emojis (any of them).

Here's how to create it, with general explanations on regex in general:

We'll discuss more text processing and analysis techniques in the #advertools office hours tomorrow if you'd like to join.

#advertools #datascience #python

Elias Dabbas :verified: · @elias

57 followers · 98 posts · Server seocommunity.socialLog file analysis

🔵 Parse file fields IP, datetime, request, method, status, size, referer, user-agent

🔵 Compress to parquet

🔵 Bulk reverse DNS lookup for IPs

🔵 Split request & referer URLs into their components

🔵 Parse user-agents into their components (OS, version, device name, etc)

🔵 7-8 fields become hundreds of columns

🔵 Generate any report, ask any question about any combination of those elements

Example

https://bit.ly/3qnfLr5

#advertools #seo #datrascience #digitalanalytics #python

Elias Dabbas :verified: · @elias

55 followers · 91 posts · Server seocommunity.social1/2

Happy to announce my course:

Data Science with Python for SEO 🎉 🎉 🎉

🔵 For absolute beginners

🔵 Make a leap in your data skills

🔵 Run, automate, and scale many SEO tasks with Python like crawling, analyzing XML sitemaps, text/keyword analysis

🔵 In depth intro to data manipulation and visualization skills

🔵 Get started with #advertools #pandas and #plotly

🔵 Make the transition from Excel to Python

🔵 Online, live, cohort-based, interactive

Elias Dabbas :verified: · @elias

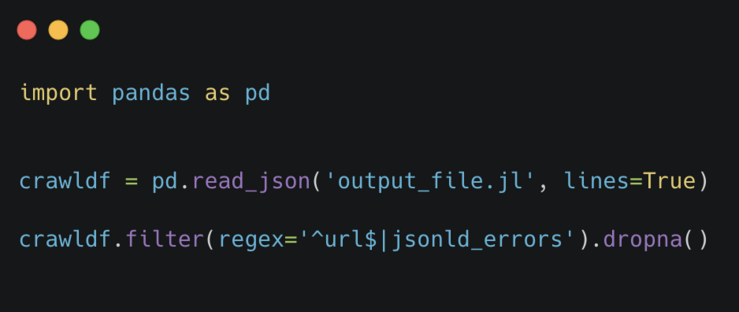

55 followers · 90 posts · Server seocommunity.social🕷🕸🕷🕸🕷🕸🕷

#JSON-LD errors on webpages:

#advertools reports those errors in the "jsonld_errors" column, and provides detailed error messages. For example:

Expecting ',' delimiter: line 11 column 437 (char 665)

Invalid control character at: line 27 column 450 (char 1728)

Invalid \\escape: line 27 column 466 (char 2096)

Simply filter for the columns "url" and "json_ld" to get them.

python3 -m pip install advertools

#json #advertools #datascience #seo #DigitalMarketing #crawling

Elias Dabbas :verified: · @elias

54 followers · 88 posts · Server seocommunity.social🕸🕷🕸🕷🕸🕷🕸

#advertools +

@JupyterNaas

= Cloud #SEO #Crawler

🔵 Low code

🔵 Save crawl templates to re-run multiple times

🔵 Create a separate template for each website

🔵 Run multiple crawls at the same time

🔵 Enjoy!

#advertools #seo #crawler #datascience #python #DigitalMarketing #digitalanalytics

Elias Dabbas :verified: · @elias

55 followers · 85 posts · Server seocommunity.social🕷🕸🕷🕸🕷

My website has ten pages:

Title tag lengths: [10, 10, 10, 10, 10, 130, 130, 130, 130, 130]

Average title length: 70 characters

Good, right?

Wrong.

🔵 Show length distributions

🔵 Show counts per bin [0, 10], [11, 20], etc...

🔵 Interactive, downloadable, emailable, HTML chart

🔵 Show shortest/longest desired lengths with vertical guides

🔵 Hover to see URL and title

Suggestions?

#DataScience #advertools #SEO #DigitalMarketing #DigitalAnalytics #DataVisualization

#datascience #advertools #seo #DigitalMarketing #digitalanalytics #datavisualization

Elias Dabbas :verified: · @elias

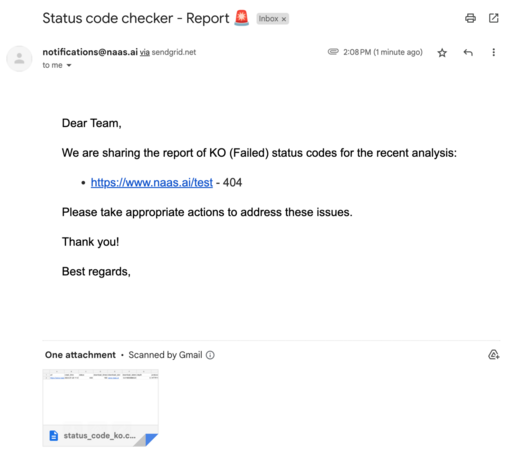

55 followers · 85 posts · Server seocommunity.social#advertools + Naas.ai = Automated bulk status code checker & email notifier

🔵 Runs in bulk fast & light

🔵 Runs on Naas.ai (zero setup)

🔵 Low code: start with the notebook we created, configure URLs, email notification settings, how often to run the checker, where to get URLs from, etc.

🔵 Get response headers

🔵 Improve: report bugs, issues, suggest changes

Use notebook: Advertools_Check_status_code_and_Send_notifications

#advertools #datascience #seo #automation #python

Elias Dabbas :verified: · @elias

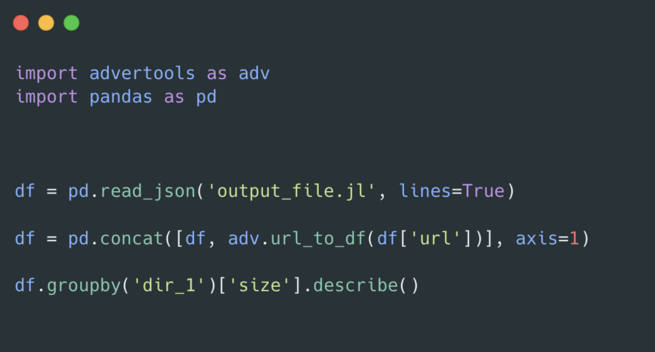

55 followers · 83 posts · Server seocommunity.socialQ: How many lines of code does it take to analyze segments of a website by any available metric?

A: 3

1. Open the crawl file

2. Split URLs into segments (path, dir_1, dir_2, ..)

3. Summarize segments by any metric (page size, latency, etc.)

Code and more examples here:

#advertools #pandas #datascience #crawling

Elias Dabbas :verified: · @elias

55 followers · 82 posts · Server seocommunity.social#advertools office hours - 3

Thursday, same time, same link:

Using the parquet file format to

1. Reduce the size of crawl files

2. Speed up the analysis process

Join if you're interested:

https://bit.ly/adv-office-hours

#DataScience #SEO #DigitalMarketing #DigitalAnalyticw #Python

#advertools #datascience #seo #DigitalMarketing #digitalanalyticw #python

Elias Dabbas :verified: · @elias

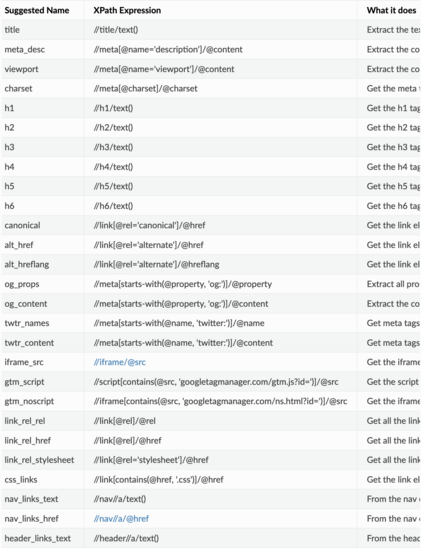

55 followers · 81 posts · Server seocommunity.social🕸️🕷️🕸️🕷️🕸️🕷️🕸️

Here is a list of custom extraction XPath selectors to take your crawling to the next level.

This can be expanded to include other extractors and/or ones for popular sites/CMSes

Amazon, WP, Shopify etc.

If you have a favorite list that you would like to contribute or create please let me know.

#datascience #advertools #seo #crawling #python

Elias Dabbas :verified: · @elias

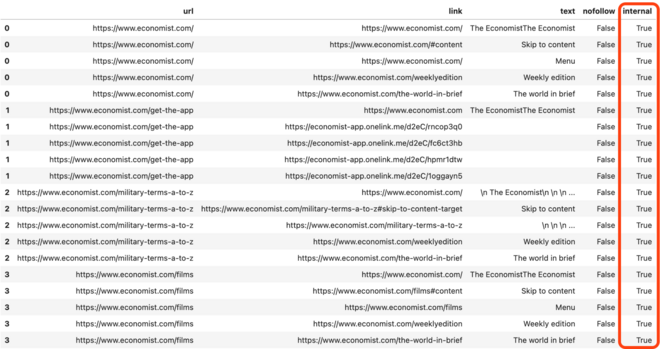

55 followers · 78 posts · Server seocommunity.social🕸️🕷️🕸️🕷️🕸️🕷️🕸️

Analyzing links of a crawled website begins with organizing them in a "tidy" (long form) DataFrame, allowing you to:

🔵 Get link URL, anchor text, & nofollow tag

🔵 Split internal/external links to easily get inlinks & outlinks

🔵 Run network analysis on internal links (pagerank, betweenness centrality, etc)

🔵 Analyze anchor text

This function takes the links from an #advertools crawl DataFrame and organizes them for easier analysis

#advertools #datascience #seo #python

Elias Dabbas :verified: · @elias

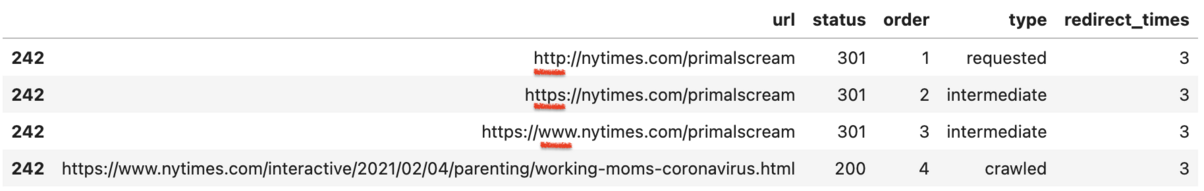

55 followers · 77 posts · Server seocommunity.social#advertools office hours - episode 2

Today at 14:00 GMT

We'll discuss redirects, and how to get and analyze them.

Join here if you're interested:

#advertools #datascience #seo #digitalanalytics #python

Elias Dabbas :verified: · @elias

55 followers · 73 posts · Server seocommunity.social#advertools office hours - 2

Same time (Thursday), same link. Sign up here if you haven't

https://bit.ly/adv-office-hours

A better way to analyze redirects on a website

with full redirect chains, status codes & the logic behind them.

(nudged by Nitin Manchanda)

#advertools #datascience #seo #python

Elias Dabbas :verified: · @elias

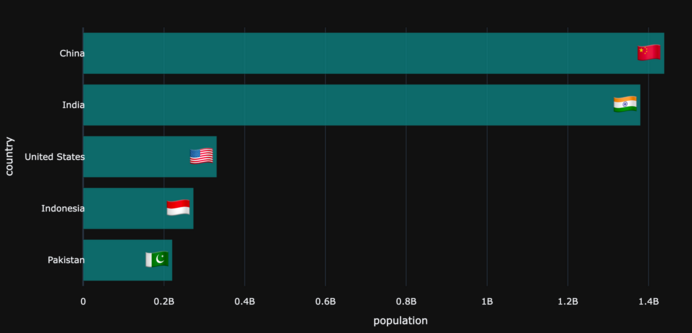

52 followers · 70 posts · Server seocommunity.socialCountry flags can make your charts/reports easier to read, & can give more space vs full country names.

Just released a simple new #adviz function flag() which converts a 2 or 3-letter country code or country name to its respective flag

python3 -m pip install --upgrade adviz

#adviz #advertools #datascience #datavisualization #python #plotly

Elias Dabbas :verified: · @elias

52 followers · 69 posts · Server seocommunity.socialHappy to announce

#advertools office hours

Free

Live coding (you'll also code, make charts, analyze data)

For beginners (advanced users more than welcome)

No recording

1st episode - Crawling: July 6th

#advertools #datascience #seo #python