Mortal Corley · @corley

0 followers · 39 posts · Server social.tchncs.de#IBM arbeitet an sparsamen, analogen #AIMC (Analogue in-memory computing) Chips, die zukünftig für #machinelearning eingesetzt werden könnten und weitaus weniger Energie verbrauchen als vergleichbare #GPU.

#ai #aiinference #energyconsumption #ki

https://www.theregister.com/2023/08/14/ibm_describes_analog_ai_chip/

#ibm #aimc #machinelearning #gpu #ai #aiinference #energyconsumption #ki

Norobiik · @Norobiik

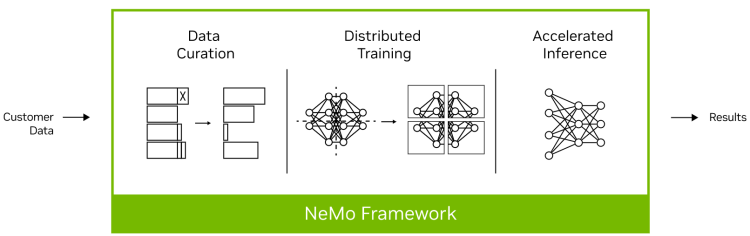

247 followers · 3924 posts · Server noc.social#Quantiphi is working with #NeMo to build a modular generative AI solution to improve worker productivity. Nvidia also announced four inference GPUs, optimized for a diverse range of emerging LLM and generative AI applications. Each GPU is designed to be optimized for specific #AIInference workloads while also featuring specialized software.

#SpeechAI, #supercomputing in the #cloud, and #GPUs for #LLMs and #GenerativeAI among #Nvidia’s next big moves | #AI

https://venturebeat.com/ai/speech-ai-supercomputing-cloud-gpus-llms-generative-ai-nvidia-next-big-moves/

#ai #nvidia #generativeAI #LLMs #gpus #cloud #supercomputing #speechAI #aiinference #nemo #quantiphi