Miguel Afonso Caetano · @remixtures

388 followers · 1281 posts · Server tldr.nettime.org#EU #Netherlands #Algorithms #AlgorithmicDiscrimination: "New, leaked documents obtained by Lighthouse Reports and NRC reveal that at the same time Hoekstra was promising change, officials were sounding the alarm over a secretive algorithm that ethnically profiles visa applicants. They show the agency’s own data protection officer — the person tasked with ensuring its use of data is legal — warning of potential ethnic discrimination. Despite these warnings, the ministry has continued to use the system.

Unknown to the public, the Ministry of Foreign Affairs has been using a profiling system to calculate the risk score of short-stay visa applicants applying to enter the Netherlands and Schengen area since 2015.

An investigation by Lighthouse and NRC reveals that the ministry’s algorithm, referred to internally as Informatie Ondersteund Beslissen (IOB), has profiled millions of visa applicants using variables like nationality, gender and age. Applicants scored as ‘high risk’ are automatically moved to an “intensive track” that can involve extensive investigation and delay."

https://www.lighthousereports.com/investigation/ethnic-profiling/

#eu #Netherlands #algorithms #algorithmicdiscrimination

sorelle · @sorelle

909 followers · 55 posts · Server mastodon.social#AlgorithmicDiscrimination "when automated systems contribute to unjustified different treatment or impacts disfavoring people based on their actual or perceived race, color, ethnicity, sex (including based on pregnancy, childbirth, and related conditions; gender identity; intersex status; and sexual orientation) religion, age, national origin, limited English proficiency, disability, veteran status, genetic information, or any other classification protected by law."

Miguel Afonso Caetano · @remixtures

209 followers · 277 posts · Server tldr.nettime.org#Algorithms #AlgorithmicDiscrimination #Labor #GigEconomy: "Recent technological developments related to the extraction and processing of data have given rise to widespread concerns about a reduction of privacy in the workplace. For a growing number of low-income and subordinated racial minority work forces in the United States, however, on-the-job data collection and algorithmic decision-making systems are having a much more profound yet overlooked impact: these technologies are fundamentally altering the experience of labor and undermining the possibility of economic stability and mobility through work. Drawing on a multi-year, first-of-its-kind ethnographic study of organizing on-demand workers, this Article examines the historical rupture in wage calculation, coordination, and distribution arising from the logic of informational capitalism: the use of granular data to produce unpredictable, variable, and personalized hourly pay. Rooted in worker on-the-job experiences, I construct a novel framework to understand the ascent of digitalized variable pay practices, or the transferal of price discrimination from the consumer to the labor context, what I identify as algorithmic wage discrimination.

Across firms, the opaque practices that constitute algorithmic wage discrimination raise central questions about the changing nature of work and its regulation under informational capitalism. Most centrally, what makes payment for labor in platform work fair? How does algorithmic wage discrimination change and affect the experience of work? And, considering these questions, how should the law intervene in this moment of rupture?"

#algorithms #algorithmicdiscrimination #labor #gigeconomy

Michele Dusi · @thoozee

4 followers · 4 posts · Server mastodon.unoConsiglio serale non richiesto: ecco un articolo de #IlPost sugli algoritmi che governano le principali app di #fooddelivery, sull'etica lavorativa che "implementano" e sui loro trascorsi legali non troppo felici.

Si parla di punteggi personali, di diritto allo sciopero e di algoritmi che pagano le persone.

#rider #algorithmicdiscrimination #aiethics #fooddelivery #IlPost

Michele Dusi · @thoozee

3 followers · 7 posts · Server mastodon.unoConsiglio serale non richiesto: ecco un articolo de #IlPost sugli algoritmi che governano le principali app di #fooddelivery, sull'etica lavorativa che "implementano" e sui loro trascorsi legali non troppo felici.

Si parla di punteggi personali, di diritto allo sciopero e di algoritmi che pagano le persone.

#IlPost #fooddelivery #aiethics #algorithmicdiscrimination #rider

Michele Dusi · @thoozee

3 followers · 7 posts · Server mastodon.unoHi! #introduction

I'm a Ph.D. student in #artificialintelligence, based in northern Italy. My research focuses on #AlgorithmicDiscrimination, that is when evil computers do evil things on humans.

Think of it like algorithms excluding minorities, more than mecha-Hitler destroying cities. (Yep, the name sounds better, but the content is pretty good too).

I'm interested in #sciencecommunication, especially on the #AI and #NLP sides. We'll see if I can make something good out of this profile 🙃

#introduction #artificialintelligence #sciencecommunication #ai #nlp #algorithmicdiscrimination

uRi · @usabach

144 followers · 206 posts · Server tooot.imהמשטרה משתמשת ב-AI כלשהו כדי לנחש במי לבצע חיפוש בנתב״ג?

לא יודע מה אני חושב על זה "בגדול", אבל ברור שצריך קצת יותר שקיפות בעניין - כמה חיפושים, כמה מתוכם מוצדקים, וכו'.

האלגוריתם המשטרתי שיעצור אתכם בנחיתה בנתב"ג | מוסף כלכליסט

https://newmedia.calcalist.co.il/magazine-10-11-22/m02.html

#פרטיות #צדקאלגוריתמי #AlgorithmicJustice #algorithmicdiscrimination

#algorithmicdiscrimination #algorithmicjustice #צדקאלגוריתמי #פרטיות

fcr · @fcr

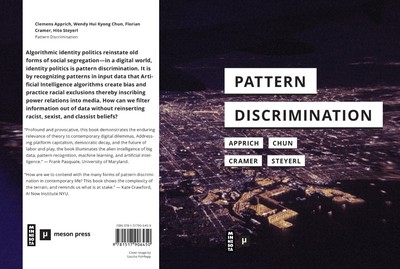

108 followers · 100 posts · Server post.lurk.orgforthcoming:

Clemens Apprich, Wendy Hui Kyong Chun, Florian Cramer, Hito Steyerl

Pattern Discrimination

University of Minnesota Press / Meson Press (Open Access)

Algorithmic identity politics reinstate old forms of social segregation — in a digital world, identity politics is pattern discrimination. It is by recognizing patterns in input data that Artificial Intelligence algorithms create bias and practice racial exclusions thereby inscribing power relations into media. How can we filter information out of data without reinserting

racist, sexist, and classist beliefs?

ISBN 978-1-51790-645-0

#mediastudies #newmedia #algorithms #bigdata #discrimination #algorithmicdiscrimination #analytics #ai #racism

#mediastudies #newmedia #algorithms #bigdata #discrimination #algorithmicdiscrimination #analytics #ai #racism