Jitse Niesen · @jitseniesen

65 followers · 140 posts · Server mathstodon.xyzThis chocolate reminded me of Bernstein ellipses, which govern how fast the Chebyshev approximation converges to a function.

#approximationtheory #occupationalhazard #mathsiseverywhere

Jared Davis · @jared

177 followers · 112 posts · Server mathstodon.xyzI’m pretty sure I bombed my latest assignment for #OpenUniversity M832. Orthogonal polynomials, Gaussian quadrature, both types of Chebyshev polynomials, deriving Fourier transforms, using Parseval’s theorem. I knew that what I needed but wow was it hard for me to write down solutions.

I’ll file these away for revision and keep moving forward with the next round!

#approximationtheory #openuniversity

Jitse Niesen · @jitseniesen

58 followers · 108 posts · Server mathstodon.xyzMy thoughts keep turning back to the OWNA (One World Numerical Analysis) talk of Daan Huybrechs a few weeks ago. Most of numerical analysis is built on approximating functions in finite-dimensional spaces:

\[ f(x) \approx \sum_i a_i \varphi_i(i) \],

where 𝑓 is the function we want to approximate and φᵢ are easy functions like polynomials. In the standard setting, the φᵢ form a basis. The talk explained why you sometimes want to add some more "basis" functions, which destroys the linear independence of the φᵢ so that they are no longer a basis. The main topic was the theory behind this.

As motivation, consider the square root function on [0, 1]. This is not analytic at x=0 and approximation by polynomials does not converge fast. However, you can get fast convergence (root exponential IIRC) if you use rational functions. More generally, the solution of Laplace's equation on a domain with re-entrant corners has singularities at the corners. The lightning method uses an overcomplete "basis" of polynomials and rational functions, which converges fast.

It's one of those talks that I wished I understood fully, but it would take me over a month of sustained effort or more to do so. Hopefully I will find an excuse to immerse myself in the topic.

#numericalanalysis #approximationtheory

Jared Davis · @jared

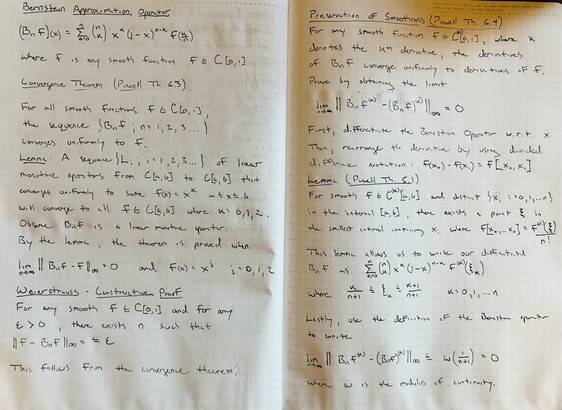

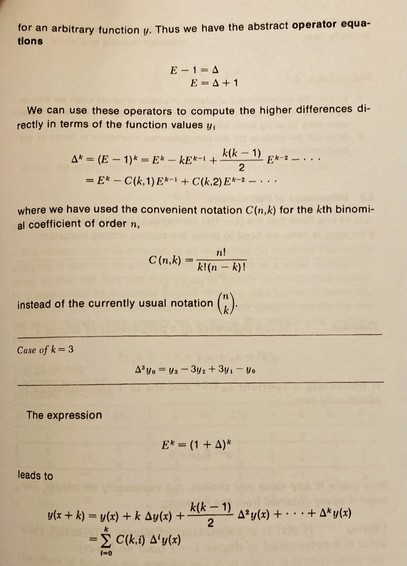

156 followers · 289 posts · Server mathstodon.xyzThe answer is a definite “yes.” Hamming named his E as “the shift operator”. If we read Powell’s proof on the convergence (Th. 6.3) and smoothness (Th. 6.4) carefully, and follow the smoothness proof to higher derivatives, we see that the use of binomials (clearly emphasized in Hamming), involves rather tedious shifting of summation indices.

Hamming obviously the engineer, eliding the extra work as part of a subroutine!

#approximationtheory #numericalanalysis

Jared Davis · @jared

151 followers · 248 posts · Server mathstodon.xyzit seems to me that the operator equation given by Hamming (1989) Ch6 Sec7 is closely related, if not the same as, the Bernstein operator shown in Powell (1981) Eq 6.23

Can someone familiar with #ApproximationTheory or #NumericalAnalysis confirm?

Dover did a nice job reprinting Hamming’s book, but I wish they preserved or appended a citation listing. There’s no bibliography or reference index at all!

#numericalanalysis #approximationtheory

Jared Davis · @jared

151 followers · 248 posts · Server mathstodon.xyzit seems to me that the job operator equation given by Hamming (1989) Ch6 Sec7 is closely related, if not the same as, the Bernstein operator shown in Powell (1981) Eq 6.23

Can someone familiar with #ApproximationTheory or #NumericalAnalysis confirm?

Dover did a nice job reprinting Hamming’s book, but I wish they preserved or appended a citation listing. There’s no bibliography or reference index at all!

#numericalanalysis #approximationtheory

Jared Davis · @jared

125 followers · 210 posts · Server mathstodon.xyzLet p* be a trial approximation from a finite dimensional linear space A to a arbitrary function f. How do we change p* to reduce maximum error from the “true” f?

Curious how others answer this, so adding a few tags to get a sort of survey

#machinelearning #datascience #approximationtheory

Jared Davis · @jared

118 followers · 181 posts · Server mathstodon.xyzBernstein Proves the Weierstrass Approximation Theorem | Ex Libris

Jared Davis · @jared

109 followers · 187 posts · Server mathstodon.xyzhere's the proof that engendered my complaint. Powell uses Bolanzo-Weierstrauss to prove a fixed point theorem for a sequence of operators. This gives support for an earlier theorem establishing a boundary condition on least errors for approximating functions. #ApproximationTheory

Jared Davis · @jared

104 followers · 177 posts · Server mathstodon.xyz#ApproximationTheory and #NumericalMethods constitute the arts and sciences of acquiring "close enough" calculations for computationally intractable functions.

Let's unpack this

"computationally intractable" simply means it's either inefficient or impossible to obtain an exact answer to a function - either mechanically or in general.

Take root two as an example. In some sense, root two is exact. But a mechanical representation is not possible

#numericalmethods #approximationtheory

Jared Davis · @jared

104 followers · 173 posts · Server mathstodon.xyzI've been studying the past few weeks #ApproximationTheory through #OpenUniversity -- an excellent and affordable distance learning institution for English speakers. This topic I've written about before ( see https://mathstodon.xyz/@jared/109313785067280336 ); and I'd like to continue my slow approach to understanding by sharing my personal learning experience here.

So as not to take too much space on folks timeline, I'm going to update this thread with a "Approximation Theory" CW. But, fear not, this is fun stuff!

#openuniversity #approximationtheory