Samantha Davis · @sndavis

22 followers · 25 posts · Server fediscience.orgchanneling my inner Wicked Witch of the East at the #ARO2023 Vestibular Periphery session

Jonathan Peelle · @jpeelle

1187 followers · 444 posts · Server neuromatch.socialA colleague just brought me a mug of coffee in the middle of a talk in the session I am co-chairing and I have never felt fancier.

Jonathan Peelle · @jpeelle

1187 followers · 442 posts · Server neuromatch.socialStarting now in Crystal DE: Non-sensory processes in speech perception. Thread of talks below.

· @abbynoyce

54 followers · 25 posts · Server fediscience.orgHeading home from #ARO2023! I didn’t spend a ton of time at the conference this weekend (dealing with some family stuff has taken a bunch of time), but it was so good to see old friends, meet new friends, stay up too late kibitzing, and think about a bunch of science.

Face to face conferences are expensive (in $$, time, emissions), but they are also uniquely valuable and I am so grateful I get to do this.

SoundBrain Lab · @soundbrainlab

33 followers · 18 posts · Server mastodon.socialDoctoral candidate

Jacie McHaney

will be giving a talk today at

@AROMWM

on factors underlying self-perceived listening difficulties in adults with normal hearing in Session #30 at 2:00pm! #ARO2023 #auditory #neuroscience #AuditoryNeuroscience

#aro2023 #auditory #neuroscience #auditoryneuroscience

Ansley Kunnath · @ansleykunnath

2 followers · 1 posts · Server neuromatch.socialSamantha Davis · @sndavis

21 followers · 24 posts · Server fediscience.orgso happy to see all these hair cells at #ARO2023 after being in a neuroscience department for 18 months 🤩

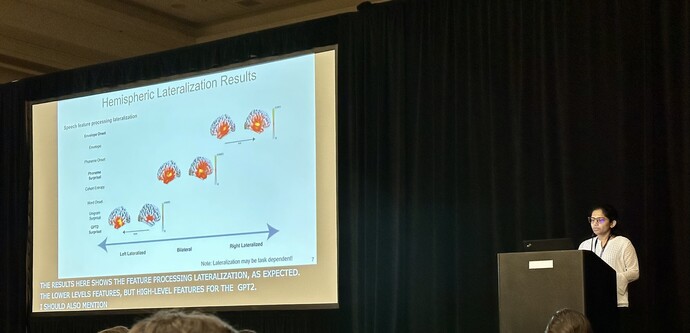

Jonathan Z Simon · @jzsimon

54 followers · 161 posts · Server mas.toSenior lab member Dushyanthi Karunathilake totally nails her first ever slide presentation at an in-person international conference (Progression of Acoustic, Phonemic, Lexical and Sentential Neural Features Emerge for Different Speech Listening). Can’t wait for her poster presentation this afternoon (poster MO211).

#AROMWM #ARO2023

Jonathan Peelle · @jpeelle

1185 followers · 434 posts · Server neuromatch.socialNext Dushyanthi Karunathilake talking about hierarchical levels of speech representation (phonemes up through pragmatics). Results from MEG study with 1-minute passages (speech modulated noise, non-words, scrambled words, narrative).

Estimated multiple TRFs simultaneously (long list of features: surprisal, word onset, cohort entropy, phoneme onset, etc.).

Acoustic features are processed for all, but linguistic features encoded for speech. Lower-level (acoustic features: e.g. envelope) are more right lateralized, languagey features (surprisal) left lateralized. Phoneme surprisal and cohort entropy bilateral.

For TRFs:

-acoustic envelope onset: speech > noise responses (doesn't distinguish between speech conditions). Envelope (overall, not onset) speech > noise again, but distinguishes scrambled vs narrative.

-cohort entropy shows interesting differences across condition. (lots of results, I can't keep up)

Using these results to look at timing of top-down and bottom-up mechanisms 👍

Jonathan Peelle · @jpeelle

1185 followers · 434 posts · Server neuromatch.socialUp now: Kelsey Mankel. As part of a larger comprehensive study, did a spatial attention story listening task (+EEG and pupillometry). Visual cues told participants to listen to the left or right speaker.

Historical challenge getting ERPs from natural speech. Use chirped speech (aka "cheech") that can give standard ERPs (Backer et al., 2019 J Neurophysiology).

Cheech ERPs (cherps? <- my term) affected by attention (differ target vs distractor).

N400 responses to color words (targets) in the midst of this task.

Brain-behavior relationships also present, e.g., with subjective measurements of listening effort. Lots of other correlations that I wasn't able to capture fast enough.

Jonathan Peelle · @jpeelle

1185 followers · 434 posts · Server neuromatch.socialNow Gavin Bidelman on looking at brainstem activity during categorical perception using EEG.

Background: Auditory cortex shows categorical responses in ERPs.

In the past, used FFR to look at subcortical categorical responses. Prior work (Bidelman et al. 2013) suggested not.

Newer work: develop active task that can be used for studying FFR (most FFR studies are passive). Used perceptual warping and categorization task.

-2-3x enhancement of F0 in FFR during active relative to passive

listening

-Category ends seem more strongly represented than midpoints during active perception

Conclusion: FFR more than "just" an acoustic perception, reflects attention and categorical representations

Jonathan Peelle · @jpeelle

1185 followers · 434 posts · Server neuromatch.socialSara Carta now talking about phonetic features in attended and ignored speech.

They used a multi-speaker array to present background noise, target speech, and masker speech, collecting data using EEG. Expect phonetic features will be better represented in target than in masker based on listener attention.

This is what they found, ✅

Also, differential coding for phoneme onsets. (Accounting for "acoustic" information using spectrogram and half-wave spectrogram in the model.)

Jonathan Z Simon · @jzsimon

54 followers · 161 posts · Server mas.toJonathan Peelle · @jpeelle

1185 followers · 425 posts · Server neuromatch.socialFirst up: Jaime Hannah, looking at a meta analysis looking at brain activity associated with effortful listening, and potential overlap with regions involved in other processes (e.g., inhibition, working memory).

They performed three ALE meta analyses: effortful listening, inhibition, working memory.

Speech-in-noise: anterior insula, cingulate (aka cingulo-opercular network), STG;

Inhibition (stroop incongruent>neutral): also overlaps cingulo-opercular

Working memory (n-back): also overlaps cingulo-opercular

Conclusion: suggestive of shared cognitive functions between speech-in-noise, working memory, and inhibition

(Side note: every time someone uses a figure from one of my papers I am humbled, thrilled, and self-conscious about my artistic skills!)

Jonathan Peelle · @jpeelle

1185 followers · 425 posts · Server neuromatch.socialJonathan Peelle · @jpeelle

1186 followers · 421 posts · Server neuromatch.socialHappy Monday from #AROMWM! I am going to try to do a better job tooting from the conference today, in part because I was talking up Mastodon to a group of trainees yesterday. Will try my very best to keep the toots unlisted so as not to clog your feed (unless you follow me, in which case, you asked for it).

Jonathan Peelle · @jpeelle

1185 followers · 417 posts · Server neuromatch.socialAt the spARO session on networking someone commented they were much more likely to have someone come up and comment on a current paper than something from social media.

So far I’ve had two unsolicited social media comments and one paper comment. I think I know where my influence lies. 😂

Jonathan Peelle · @jpeelle

1185 followers · 414 posts · Server neuromatch.socialInstructions: You can provide your presentation in either Powerpoint or Keynote format

Translation: We only accept presentations in Powerpoint

😂

· @abbynoyce

54 followers · 20 posts · Server fediscience.orgSamantha Davis · @sndavis

20 followers · 20 posts · Server fediscience.orgthere’s nothing like the day 1 energy of #ARO2023 — excited to see all the great science and the people behind it!

@AROMWM @spARO_news