Elod Pal Csirmaz 🏳️🌈 · @csirmaz

18 followers · 51 posts · Server mathstodon.xyzA new type of #neuralnetworks and #AI

I've been thinking that #backpropagation based neural networks will reach their peak (if they haven't already), and it may be interesting to search for an alternative #machinelearning method. I've had some observations and ideas:

The two main modes of #neuralnetworks - training when weights are adjusted, and prediction when states are adjusted should be merged. After all real-life brains do prediction and learning at the same time, and they survive for long-term; they are not restarted for every task. Recent successes at one shot tasks where states changes effectively become learning also point in this direction.

It'd also be nice to free neural networks from being #feedforward, but we don't have any training methods for freer networks. Genetic algorithms come to mind, but a suitable base structure has to be found that supports gene exchanges to some kind of recursion for repeated structures. Even with these it's unclear if we'd get any results in a reasonable amount of time.

Another idea is to use the current, imperfect learning method (backpropagation) to develop a new one, just like when #programming language or manufacturing machine can be used to make a new, better one. Here #backprop would be used to learn a new learning method.

I've been thinking if suitable playgrounds (set of tasks) for these systems to operate in and developed one earlier in #C. Recently I've come across the #micromice competitions, and a #maze that a system needs to navigate and learn to go through them as fast as possible may also be an interesting choice.

If anyone is interested in #collab #collaboration on any of these aspects, even just exchanging thoughts, let me know!

#collaboration #collab #maze #micromice #c #backprop #programming #FeedForward #machinelearning #backpropagation #ai #neuralnetworks

JeremyFromEarth · @jeremyfromearth

9 followers · 6 posts · Server sigmoid.socialI need to find the distance between two high dimensional matrices. I'm thinking of a naive solution, wherein I flatten each matrix into a 1D vector and then find the Euclidean distance. I've done some initial experiments with this and it seems to work, but I'm not an expert in Linear Algebra, so thought I'd ask if anyone can provide a better solution. By the way, I'm doing this in #JavaScript with the math.js lib, so no numpy unfortunately. #math #linearalgebra #matrices #backprop

#javascript #math #linearalgebra #matrices #backprop

antonio vergari · @nolovedeeplearning

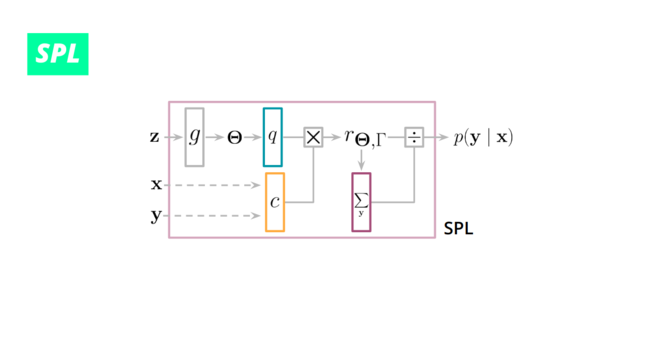

345 followers · 162 posts · Server mastodon.socialOur #Semantic #Probabilistic #Layers #SPLs instead always guarantee 100% of the times that predictions satisfy the injected constraints!

They can be readily used in deep nets as they can be trained by #backprop and #maximum #likelihood #estimation.

4/

#likelihood #estimation #semantic #probabilistic #layers #SPLS #backprop #maximum