David Meyer · @dmm

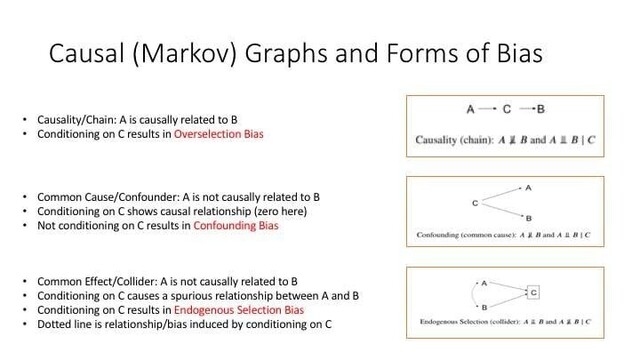

242 followers · 629 posts · Server mathstodon.xyzThis is a slide about causal reasoning that I made a decade or so ago that tries to characterize the sources of bias found in causal (Markov) graphs. These graphs are the basis of many forms of causal reasoning [1].

My notes are here: https://davidmeyer.github.io/ml/dags_causality_and_bias.pdf

As always questions/comments/corrections/* greatly appreciated.

References

--------------

[1] "Graphical Models for Probabilistic and Causal Reasoning", https://ftp.cs.ucla.edu/pub/stat_ser/r236-3ed.pdf

Benjamin Han · @BenjaminHan

395 followers · 1067 posts · Server sigmoid.social10/end

[4] https://www.linkedin.com/posts/benjaminhan_reasoning-gpt-gpt4-activity-7060428182910373888-JnGQ

[5] Zhijing Jin, Jiarui Liu, Zhiheng Lyu, Spencer Poff, Mrinmaya Sachan, Rada Mihalcea, Mona Diab, and Bernhard Schölkopf. 2023. Can Large Language Models Infer Causation from Correlation? http://arxiv.org/abs/2306.05836

#Paper #NLP #NLProc #CodeGeneration #Causation #CausalReasoning #reasoning #research

#paper #nlp #nlproc #codegeneration #causation #causalreasoning #reasoning #research

Benjamin Han · @BenjaminHan

395 followers · 1066 posts · Server sigmoid.social9/

[2] Antonio Valerio Miceli-Barone, Fazl Barez, Ioannis Konstas, and Shay B. Cohen. 2023. The Larger They Are, the Harder They Fail: Language Models do not Recognize Identifier Swaps in Python. http://arxiv.org/abs/2305.15507

[3] Emre Kıcıman, Robert Ness, Amit Sharma, and Chenhao Tan. 2023. Causal Reasoning and Large Language Models: Opening a New Frontier for Causality. http://arxiv.org/abs/2305.00050

#Paper #NLP #NLProc #CodeGeneration #Causation #CausalReasoning #reasoning #research

#paper #nlp #nlproc #codegeneration #causation #causalreasoning #reasoning #research

Benjamin Han · @BenjaminHan

395 followers · 1065 posts · Server sigmoid.social8/

[1] Xiaojuan Tang, Zilong Zheng, Jiaqi Li, Fanxu Meng, Song-Chun Zhu, Yitao Liang, and Muhan Zhang. 2023. Large Language Models are In-Context Semantic Reasoners rather than Symbolic Reasoners. http://arxiv.org/abs/2305.14825

#Paper #NLP #NLProc #CodeGeneration #Causation #CausalReasoning #reasoning #research

#paper #nlp #nlproc #codegeneration #causation #causalreasoning #reasoning #research

Benjamin Han · @BenjaminHan

395 followers · 1065 posts · Server sigmoid.social7/

The results? Both #GPT4 and #Alpaca perform worse than BART fine-tuned with MNLI, and not much better than the uniform random baseline (screenshot).

#Paper #NLP #NLProc #CodeGeneration #Causation #CausalReasoning #reasoning #research

#gpt4 #alpaca #paper #nlp #nlproc #codegeneration #causation #causalreasoning #reasoning #research

Benjamin Han · @BenjaminHan

395 followers · 1065 posts · Server sigmoid.social6/

In a more recent work [5], the authors tested LLMs on *pure* #causalInference tasks, where all variables are now symbolic (screenshot 1). They constructed systematically a dataset starting by picking variables, to generating all possible #causalGraphs, to finally mapping all possible statistical #correlations. They then “verbalize” these graphs into problems for LLMs to solve for a given causation hypothesis (screenshot 2).

#causalinference #causalgraphs #correlations #paper #nlp #nlproc #causation #causalreasoning #research

Benjamin Han · @BenjaminHan

395 followers · 1062 posts · Server sigmoid.social5/

This shows the semantic priors learned from these function names have totally dominated, and the models don’t really understand what they are doing.

How about LLMs on #causalReasoning? There have been reports of extremely impressive performance of #GPT 3.5 and 4, but these models also lack consistency in performance and even possibly have cheated by memorizing the tests [3], as discussed in a previous post [4].

#Paper #NLP #NLProc #Causation #CausalReasoning #reasoning #research

#causalreasoning #gpt #paper #nlp #nlproc #causation #reasoning #research

Benjamin Han · @BenjaminHan

395 followers · 1061 posts · Server sigmoid.social4/

The same tendency is borne out by another paper focusing on testing code-generating LLMs when function names are *swapped* in the input [2] (screenshot 1). They not only found almost all models failed completely, but also most of them exhibit an “inverse scaling” effect: the larger a model is, the worse it gets (screenshot 2).

#Paper #NLP #NLProc #CodeGeneration #Causation #CausalReasoning #reasoning #research

#paper #nlp #nlproc #codegeneration #causation #causalreasoning #reasoning #research

Benjamin Han · @BenjaminHan

395 followers · 1061 posts · Server sigmoid.social3/

The end result? LLMs perform much worse on *symbolic* reasoning (screenshot), suggesting it leverages heavily on the semantics of the words involved rather than really understands and follows reasoning patterns.

#Paper #NLP #NLProc #CodeGeneration #Causation #CausalReasoning #reasoning #research

#paper #nlp #nlproc #codegeneration #causation #causalreasoning #reasoning #research

Benjamin Han · @BenjaminHan

395 followers · 1061 posts · Server sigmoid.social2/

They use a symbolic dataset and a semantic dataset to test models’ abilities on memorization and reasoning (screenshot 1). For each dataset they created a corresponding one in the other modality, e.g., they replace natural language labels for the relations and the entities with abstract symbols to create a symbolic version of a semantic dataset (screenshot 2).

#Paper #NLP #NLProc #CodeGeneration #Causation #CausalReasoning #reasoning #research

#paper #nlp #nlproc #codegeneration #causation #causalreasoning #reasoning #research

Benjamin Han · @BenjaminHan

395 followers · 1061 posts · Server sigmoid.social1/

When performing reasoning or generating code, do #LLMs really understand what they’re doing, or do they just memorize? Several new results seem to have painted a not-so-rosy picture.

The authors in [1] are interested in testing LLMs on “semantic” vs. “symbolic” reasoning: the former involves reasoning with language-like input, and the latter is reasoning with abstract symbols.

#Paper #NLP #NLProc #CodeGeneration #Causation #CausalReasoning #reasoning #research

#LLMs #paper #nlp #nlproc #codegeneration #causation #causalreasoning #reasoning #research

David Jones · @dj13730

1 followers · 1 posts · Server mastodon.socialHello world.

I'm David, a lecturer in Computer Science at #aberystwythuniversity.

Interested in #systemsengineering #cyberphysicalsystems #digitaltwin #knowledgemanagement #engineeringinformatics and #CausalReasoning in work, #homebrewing #wildfermentation #realales #mead, #drawing, #mechanics and #woodworking outside of work, and #linux #opensourcesoftware #aberystwyth intersecting the two.

Current (but fading) special interest is #mandala drawing.

#aberystwythuniversity #systemsengineering #cyberphysicalsystems #digitaltwin #knowledgemanagement #engineeringinformatics #causalreasoning #homebrewing #wildfermentation #realales #mead #drawing #mechanics #woodworking #linux #opensourcesoftware #aberystwyth #mandala #croeso #introducion

Charlotte Guo · @charlotteguo

83 followers · 23 posts · Server mas.toIn need of a holiday read? why not join milu - seen here pondering whether he's capable of #causalinference - in learning about mental models and legal reasoning! brought to you by our PI, David Lagnado, with descending bass lines on top 🐾 if you are interested in #decisionmaking #causalreasoning #mentalmodels and #legalreassoning this book is for you!

#legalreassoning #mentalmodels #causalreasoning #decisionmaking #causalinference

Eva Reindl · @Miss_Daffodil

47 followers · 8 posts · Server mastodon.socialJust adding a few more professional interests, so that people can find me. Hello again 😊 #CulturalEvolution #CumulativeCulture #DevelopmentalPsychology #ToolUse #Innovation #ExecutiveFunctions #WorkingMemory #Primates #ComparativeCognition #Cognition #AnimalCulture #Cerebellum #EvolutionOfTechnology #SequenceLearning #SequenceCognition #SocialLearning #CausalReasoning

#culturalevolution #cumulativeculture #developmentalpsychology #tooluse #innovation #executivefunctions #workingmemory #primates #comparativecognition #cognition #AnimalCulture #cerebellum #evolutionoftechnology #sequencelearning #sequencecognition #SocialLearning #causalreasoning