KubeFred 🗿 · @kubefred

27 followers · 328 posts · Server techhub.socialI like when people ask if you can change the #CNI in a #kubernetes cluster on the fly, without destroying and recreating the cluster. Well, you *can* but is it simple, non-expensive, and not time-consuming? No. It's also very dependent on your specific application deployments.

https://cilium.io/blog/2023/09/07/db-schenker-migration-to-cilium/

#cni #kubernetes #epbf #cilium

Amanibhavam · @defn

26 followers · 410 posts · Server hachyderm.ioI have no idea if my cluster is very faster, but it is now kube-proxy free and passing the Cilium multi-node connectivity tests!

defn · @defn

24 followers · 392 posts · Server hachyderm.ioDID IT!!!!!!!!!!!!!!

Karpenter couldn't recognize the k3s nodes because their node provider-id was k3s://... instead of aws:////$az/$instance_id

The screen shot is Karpenter recognizing Machines that have joined the cluster as Nodes, with Cilium attached to each node.

Michael · @mmeier

210 followers · 3600 posts · Server social.mei-home.netOkay, looks like it. Cilium happily hands out 10.0.0.0/8 addresses.

And it seems that you can't "just remove" cilium either. "cilium uninstall" removes the cilium pods, but it doesn't change anything for newly created pods or existing pods.

So days since I nuked my Kubernetes cluster: 0. 😅

Michael · @mmeier

210 followers · 3592 posts · Server social.mei-home.netIs it possible that Cilium completely ignores the "podSubnet" config I set with kubeadm when I set up my cluster?

It is definitely handing out Pod IPs outside that CIDR range. I guess I now get to find out how well the cluster reacts to me removing Cilium and reinstalling it.

defn · @defn

23 followers · 373 posts · Server hachyderm.ioWow, just solved a long standing problem with cilium's incompatibility with the k3d docker image.

By building nix in a multi-stage Earthly build, I was able to simply copy /nix to k3d's image, and symlink util-linux and bash-interactive packages to /bin.

defn · @defn

9 followers · 203 posts · Server hachyderm.ioLinkerd 2.14 has multi-cluster support for shared flat networks.

I've been using shared VPC subnets and Tailscale to create a shared flat network.

Let's see if Linkerd can work on top of a Cilium CNI connected via Tailscale as external/internal IP.

Even Buoyant thinks this is an awesome idea.

- https://buoyant.io/blog/kubernetes-network-policies-with-cilium-and-linkerd

- https://buoyant.io/blog/announcing-linkerd-2-14-flat-network-multicluster-gateway-api-conformance

Mr.Trunk · @mrtrunk

6 followers · 13867 posts · Server dromedary.seedoubleyou.meSecurityOnline: cilium v1.14.1 releases: eBPF-based Networking, Security, and Observability https://securityonline.info/cilium-ebpf-based-networking-security-and-observability/ #Defense #Cilium

Unni P · @iamunnip

15 followers · 136 posts · Server cloud-native.socialI have completed the Security Summer School 2023 program by successfully finishing the following labs.

✅Isovalent Enterprise for Cilium: Network Policies

✅Cilium Transparent Encryption with IPSec and WireGuard

✅Cilium Enterprise: Zero Trust Visibility

#isovalent #cilium

Unni P · @iamunnip

15 followers · 136 posts · Server cloud-native.socialCompleted Cilium Enterprise: Zero Trust Visibility lab and got a new badge from Isovalent!

#cilium #isovalent #zerotrust #kubernetes

https://www.credly.com/badges/c417fdb5-09dd-4b4c-9de5-3fb4e501dd55/public_url

#cilium #isovalent #zerotrust #kubernetes

Unni P · @iamunnip

14 followers · 132 posts · Server cloud-native.socialCompleted Cilium Transparent Encryption with IPSec and WireGuard lab and got a new badge from Isovalent!

https://www.credly.com/badges/d8cdaae7-b307-48c5-bad6-021f8980b603/public_url

✅ Installing Cilium and setting up IPsec for transparent encryption

✅ Managing Day 2 operations with IPsec on Cilium

✅ Setting up pod to pod transparent encryption using Cilium WireGuard

✅ Setting up node to node transparent encryption using Cilium WireGuard

#cilium #ipsec #wireguard #isovalent

#cilium #ipsec #wireguard #isovalent

Mr.Trunk · @mrtrunk

5 followers · 8455 posts · Server dromedary.seedoubleyou.meSecurityOnline: cilium v1.14 releases: eBPF-based Networking, Security, and Observability https://securityonline.info/cilium-ebpf-based-networking-security-and-observability/ #Defense #Cilium

farcaller · @farcaller

110 followers · 1294 posts · Server hdev.im@SerhiyMakarenko #cilium. It's actually awesome. I’ve tried all the major ones over the years and I’m pretty happy with what cilium provides (it's like a good chunk of #istio service mesh but purely within your CNI)

M. Hamzah Khan · @mhamzahkhan

540 followers · 3688 posts · Server intahnet.co.ukJoseph Ligier :unverified: · @littlejo

13 followers · 71 posts · Server piaille.frMon été avec #Cilium et #EKS https://medium.com/@littel.jo/mon-%C3%A9t%C3%A9-avec-cilium-et-eks-partie-1-99a66ed6671f #kubernetes #networking

#cilium #eks #kubernetes #networking

farcaller · @farcaller

100 followers · 1169 posts · Server hdev.imHere's how you do it: pull in the #cilium hubble flows from prometheus (actually victoriametrics), transform labels to fields, transform add computed fields for all that grafana expects: https://grafana.com/docs/grafana/latest/panels-visualizations/visualizations/node-graph/#data-api

Painfully slow. OOMs.

farcaller · @farcaller

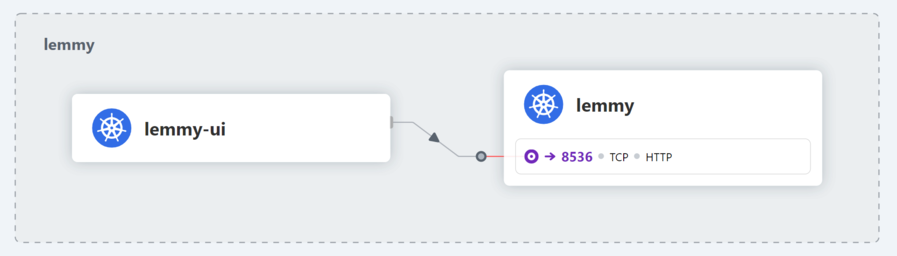

98 followers · 1130 posts · Server hdev.im#cilium's hubble actually tells you which side of the policy is rejecting the traffic! In here it's lemmy not accepting the traffic from lemmy-ui.

Unni P · @iamunnip

11 followers · 121 posts · Server cloud-native.socialLevel-up your security skills with team Isovalent virtual Security Summer School composed of 3 sessions with hands-on workshops and learn how Cilium, Tetragon, and Hubble help improve Kubernetes security.

Earn a swag box by completing all 3 sessions!

Sign up here: https://isovalent.com/events/2023-07-security-summer-school/

#isovalent #cilium #tetragon #hubble #kubernetes #security

#isovalent #cilium #tetragon #hubble #kubernetes #security

farcaller · @farcaller

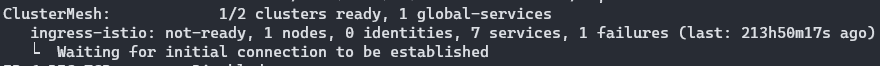

89 followers · 885 posts · Server hdev.imI'm giving up on the #cilium multicluster peering. It's an amazing ide on paper—you can have traffic flow freely between clusters and write network policies that span all the endpoints.

In practice, it's wonky and fragile, e.g. my web cluster failed to get the endpoints from the ingress cluster for DAYS now, meaning the web's network policies will just reject traffic.

Yes, restarting cilium-agent by hand helps. But seriously? Why is that thing so fragile!

Denis GERMAIN · @zwindler

482 followers · 3633 posts · Server framapiaf.orgREX : #Kubernetes, #kubeadm, #Ubuntu 22.04 et #cilium - mes petites galères récentes

https://blog.zwindler.fr/2023/06/06/kubeadm-ubuntu-cilium-mes-petites-galeres/

#cilium #ubuntu #kubeadm #kubernetes