New Submissions to TMLR · @tmlrsub

206 followers · 764 posts · Server sigmoid.socialTraining DNNs Resilient to Adversarial and Random Bit-Flips by Learning Quantization Ranges

#adversarial #quantization #dnns

New Submissions to TMLR · @tmlrsub

203 followers · 735 posts · Server sigmoid.socialBeyond Distribution Shift: Spurious Features Through the Lens of Training Dynamics

#learned #dnns #generalization

Christos Argyropoulos MD, PhD · @ChristosArgyrop

336 followers · 445 posts · Server mstdn.scienceCompressors such as #gzip + #kNN (k-nearest-neighbor i.e. your grandparents' #classifier) beats the living daylights of Deep neural networks (#DNNs) in sentence classification.

H/t @lgessler

Without any training parameters, this non-parametric, easy and lightweight (no #GPU) method achieves results that are competitive with non-pretrained deep learning methods on six in-distribution datasets.It even outperforms BERT on all five OOD datasets.

#gzip #knn #classifier #dnns #gpu #ai #machinelearning

JMLR · @jmlr

675 followers · 275 posts · Server sigmoid.social'Integrating Random Effects in Deep Neural Networks', by Giora Simchoni, Saharon Rosset.

Nick Byrd · @ByrdNick

781 followers · 307 posts · Server nerdculture.deWhy #DeepNeuralNetworks needs #Logic:

Nick Shea (#UCL/#Oxford) suggests

(1) Generating novel stuff (e.g., #Dalle's art, #GPT's writing) is cool, but slow and inconsistent

(2) Just a handful of logical inferences can be used *across* loads of situations (e.g., #modusPonens works the same way every time).

So (3) by #learning Logic, #DNNs would be able to recycle a few logical moves on a MASSIVE number of problems (rather than generate a novel solution from scratch for each one).

#DeepNeuralNetworks #logic #ucl #dalle #gpt #modusponens #learning #dnns #compsci #ai

JMLR · @jmlr

596 followers · 97 posts · Server sigmoid.social'Advantage of Deep Neural Networks for Estimating Functions with Singularity on Hypersurfaces', by Masaaki Imaizumi, Kenji Fukumizu.

http://jmlr.org/papers/v23/21-0542.html

#dnns #dnn #singularity

CK's Technology News · @CKsTechnologyNews

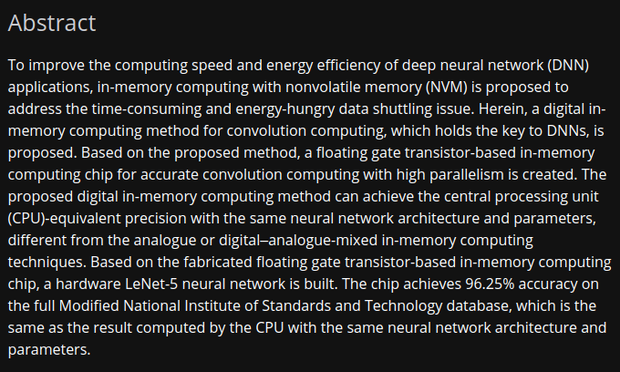

1534 followers · 31987 posts · Server mastodon.socialFloating Gate #Transistor Accurate Digital in‐Memory Computing for #DNNs

Paper

https://onlinelibrary.wiley.com/doi/full/10.1002/aisy.202200127