Abel Soares Siqueira · @abelsiqueira

90 followers · 40 posts · Server mathstodon.xyzA very common question in many forums is why some languages, such as #JuliaLang, doesn't compute 0.1 + 0.2 - 0.3 correctly. Check https://abelsiqueira.com/blog/2023-08-30-julia-language-cannot-handle-simple-math/ for my latest video and post on the subject.

#floatingpoint #scientificcomputing #julialang

Marc B. Reynolds · @mbr

270 followers · 416 posts · Server mastodon.gamedev.placeI have some thoughts I'm attempting to write up WRT that quadratic paper (1) shared by @fatlimey. But anyway since probably most peeps seeing this are interested in single precision results....handling that case (if you can promote to doubles) is almost no work (2) at all. (Of course it's all moot if spurious over/underflows aren't a concern)

1) https://mastodon.gamedev.place/@fatlimey/110584284601341773

2) https://gist.github.com/Marc-B-Reynolds/7dee8803d22532e12d2a973c16a33897

Stewart Russell · @scruss

240 followers · 1622 posts · Server xoxo.zoneI've found a decent working approximation of π for #PostScript

/pi 0.001 sin 2 div 360000 mul def

Accurate to 7 decimal places. The language uses single-precision floating point, and all its trig routines work in degrees.

This calculates π using the area of a unit radius n-gon, with n=360000

A = ½n⋅sin(360°÷n)

One of Ramanujan's approximations

π ≅ 9801÷(2206⋅√2)

is about as accurate but opaque.

#postscript #mathematics #pi #approximation #floatingpoint

Jitse Niesen · @jitseniesen

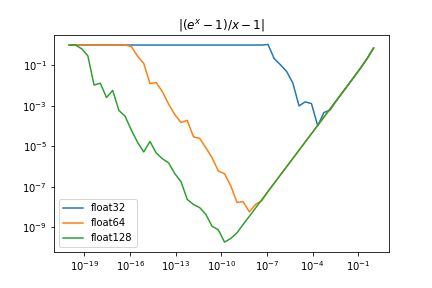

60 followers · 127 posts · Server mathstodon.xyzToday I learned that what NumPy calls float128 is not the same as what IEEE calls binary128 and what I call quad precision. Sad.

Instead, NumPy's float128 is what I call extended precision (80 bits).

#numericalanalysis #numpy #floatingpoint

· @frederic

13 followers · 327 posts · Server mastodon.cloudWhy does 2**(-1022-53) get rounded to 2**(-1022-52) (the smallest subnormal 64-bit floating point number) in Python , whereas it gets rounded to 0 in node.js ?

#IEEE754 #JavaScript #Nodejs #Python #floatingpoint

According to the IEEE 754 standard it should get rounded to 0 as the closest representable number with an even digit in the last position. So node.js is conforming to the IEEE 754 standard, while Python isn't.

Or am i making a mistake?

#ieee754 #javascript #nodejs #python #floatingpoint

· @frederic

13 followers · 302 posts · Server mastodon.cloudThe IEEE-754 Floating Point Guide

https://floating-point-gui.de/formats/fp/

#IEEE754 #Learning #Programming #Float #FloatingPoint

#ieee754 #learning #programming #float #floatingpoint

Felix LeClair (Wants a job😊) · @fclc

381 followers · 960 posts · Server mast.hpc.socialHas anyone managed to get an Asic for Posits fabbed yet?

If so, what did they implement? “Simple” mul/add/sub/div?

Going to try and power through the littérature and get a design ready for Monday

Keep finding littérature references to FPGAs, but little to no references to ASICS

JohnMoyer · @johnmoyer

21 followers · 140 posts · Server okla.social@steve

For those confused about NaN in the responses. NaN is not a number. One may get a NaN by 0/0 while 1/0 is infinity.

http://perso.ens-lyon.fr/jean-michel.muller/goldberg.pdf

What Every Computer Scientist Should Know About

Floating-Point Arithmetic

DAVID GOLDBERG

Felix LeClair (Wants a job😊) · @fclc

379 followers · 907 posts · Server mast.hpc.socialNot sure if there’s a proper metric for Posit numbers yet, but can we please call them pops? Posit operations per second?

The idea of saying “this system can do 150 TPops” seems *really* fun

(Not to mention the audio folks can talk about how quickly they process Kpops 😝)

David Zaslavsky · @diazona

163 followers · 1908 posts · Server techhub.social@stylus You're taking a negative number to a fractional power, that's why it's complex. Note that the same thing happens without any trig:

>>> (-1) ** (1/100**100)

(1+3.141592653589793e-200j)

And the real component of the true result differs from 1 by so little that a floating-point number doesn't have the precision to represent it. If you try it with some larger exponents, you'll notice that the real component differs from 1 by roughly the imaginary component squared:

>>> 1 - ((-1) ** (1/100**2))

(4.9348021557982236e-08-0.00031415926019126653j)

>>> 1 - ((-1) ** (1/100**3))

(4.934830322156358e-12-3.141592653584625e-06j)

>>> 1 - ((-1) ** (1/100**4))

(4.440892098500626e-16-3.1415926535897924e-08j)

Extending the pattern, the real component in your result would be something like 1 - (something)e-400, and you would need roughly a 1350-bit floating point data type to accurately represent that. Otherwise it just rounds to 1.

Stylus 🦉 · @stylus

208 followers · 1715 posts · Server octodon.socialCan someone explain this to me? How did I get a complex number? how is the magnitude strictly above 1? #python #mathIsHard #floatingPoint (python3 on amd64 linux)

cos(9) ** (1 / 100**100.)

(1+3.141592653589793e-200j)

#python #mathishard #floatingpoint

Edward Betts · @edward

604 followers · 323 posts · Server octodon.socialWhoops! Did somebody represent a price as a float?

#ocado #floatingpoint #roundofferror

Jorge Stolfi · @JorgeStolfi

309 followers · 1602 posts · Server mas.toOn a hunch, I went back and checked some 2000 C source files that I wrote over 30+years for instances of 'abs' that should have been 'fabs'. To my embarrassment, found more than 20 instances.

That could explain quite a few frustrating experiences with numerical software, such as improper stopping of iterations.

They could have been detected with the gcc -Wfloat-conversion switch, but it took me a decade or two to learn about it...

#languagedesign #clibrary #floatingpoint #programming

Corbin · @corbin

10 followers · 98 posts · Server defcon.socialI had a weird line of thought yesterday. Consider sine or cosine. These functions are periodic, and both the average value and integral of each period are zero.

So, if we consider inexact floating-point arithmetic (any #floatingpoint experts out there?) then big inputs should return small outputs, because an inexact big input covers a large range, and both the average and integral on that range are (probably) near zero.

aegilops :github::microsoft: · @aegilops

118 followers · 416 posts · Server fosstodon.org@steve that would "correct" zero real-world issues.

I'd never heard the term "mantissa" before seeing its use in FP - I've never needed to use a logarithm lookup table! They are obsolete.

Terms in mathematics and computer science are often appropriated by analogy, and that's all that happened here. It seems like you object to this?

Please don't spend effort on something that "solves" a non-problem and would lead to more confusion.

#Mantissa #floatingpoint #pedantry

Beej · @beejjorgensen

66 followers · 177 posts · Server mastodon.sdf.orgToggle bits in IEEE 754 floating point format and see what comes out.

#programming #ieee754 #floatingpoint

lagomoof · @lagomoof

2 followers · 12 posts · Server mastodon.socialFor x = 4776508211, √x - ln(x) is so close to an #integer (69090) that #C #double (IEEE754 binary64) #arithmetic can't tell the difference. #floatingpoint #math #maths

#integer #c #double #arithmetic #floatingpoint #math #maths

vitaut 🤍❤️🤍 🇺🇦 · @vitaut

702 followers · 704 posts · Server mastodon.socialThanks to Junekey Jeon {fmt} now uses a faster algorithm when formatting floating point numbers with a given precision https://github.com/fmtlib/fmt/pull/3269 #programming #floatingpoint

George Macon · @gmacon

3 followers · 12 posts · Server indieweb.socialThis week in #FunWithFloatingPoint , @b0rk talks about things that have actually gone wrong: https://jvns.ca/blog/2023/01/13/examples-of-floating-point-problems/

#softwareengineering #floatingpoint #funwithfloatingpoint

Beej · @beejjorgensen

47 followers · 103 posts · Server mastodon.sdf.orgThings that can go wrong with floating point math.

https://jvns.ca/blog/2023/01/13/examples-of-floating-point-problems/

#floatingpoint #computing #programming