Eneko Uruñuela · @eurunuela

16 followers · 24 posts · Server fediscience.orgI just presented our novel multi-subject paradigm free mapping algorithm at the ISMRM Current Issues in Brain Function workshop.

It was lovely to see the interest in this project, which aims to provide estimates of brain activity at the finest temporal and spatial resolution.

Stay tuned! The preprint is coming soon.

Anna Nicholson · @transponderings

170 followers · 713 posts · Server neurodifferent.me5. The experimental conditions (inside an MRI scanner) were perhaps less than optimal for many of the participants, especially the Autistic group, I would imagine!

Participants were presented with a selection of different (supposedly) emotional stimuli in one of two conditions: with and without a red cross superimposed between the actor’s eyes

The experimental design was more sophisticated than that suggests, but the red cross was used to encourage fixation within the region of the actor’s eyes, i.e. to force eye contact

The experimenters found that for three of the subcortical regions associated with face processing (superior colliculus, and left and right amygdalae), there was no significant difference in activation between the Autistic and control groups in ‘free viewing’

When the cross was present (‘constrained viewing’) there was a significant difference with a large effect size for these three regions

The fourth region, the pulvinar, had significantly different activation between Autistic and control groups across the board, except in constrained viewing in the ‘angry’ case

Figure 1, showing these differences: https://www.nature.com/articles/s41598-017-03378-5/figures/1

#ActuallyAutistic #MRIScanner #MagneticResonanceImaging #fMRI #EyeFixation #FaceProcessing

#actuallyautistic #mriscanner #magneticresonanceimaging #fmri #eyefixation #faceprocessing

Eneko Uruñuela · @eurunuela

16 followers · 23 posts · Server fediscience.orgExciting news!

Our work is now visible in Aperture after a year of restructuring the journal 🙌

We show that Paradigm Free Mapping and Total Activation produce identical results, with differences attributed to user parameters.

Original thread: https://twitter.com/eurunuela/status/1420036314066341888

#neuroimaging #fmri #neuroscience

Neurofrontiers · @neurofrontiers

768 followers · 355 posts · Server qoto.orgI managed to write my first post for #blaugust, on the topic of fMRI neurofeedback.

Delving into the details of this topic, and particularly thinking about how this method could be used as a closed-loop form of feedback for testing predictions from network control theory was a lot of fun. And hopefully it will be useful for people who wanna learn more about.

https://neurofrontiers.blog/fmri-neurofeedback-whats-the-fuss/

#blaugust #blaugust2023 #neuroscience #scienceblogging #fmri

Jo Etzel · @JosetAEtzel

446 followers · 800 posts · Server fediscience.org@dpat @effigies I'm traveling so just looking on the phone, and can't cross-check our #fMRIPrep versions yet. But a few thoughts anyway:

- are these CMRR multiband sequences? If so, have you checked the SBRef images as well as the fmaps?

- We're collecting an #fMRI dataset with 3mm iso voxels, CMRR MB4, and haven't had trouble

- are all the person's runs in a session distorted, or just some? If some, compare the fmaps, etc between good and bad runs.

Good luck!

Adam Wysokiński · @adam_wysokinski

92 followers · 355 posts · Server fediscience.orgI didn't know that one:

Neural correlates of interspecies perspective taking in the post-mortem Atlantic Salmon: an argument for multiple comparisons correction

Jo Etzel · @JosetAEtzel

446 followers · 800 posts · Server fediscience.orgSometimes we smile at our data, sometimes it smiles at us.

Ben Waber · @bwaber

661 followers · 2464 posts · Server hci.socialNext was an intriguing talk by @MatthiasNau on MRI-based eye tracking at Western University. The methods presented here seem like a powerful new tool for researchers using #fMRI with gaze as a variable or even to correct for other issues (I would like to see data from many more, varied subjects before widespread adoption) https://www.youtube.com/watch?v=jQvCbIuk794 (6/8) #neuroscience

Jo Etzel · @JosetAEtzel

446 followers · 800 posts · Server fediscience.org@stefanowitsch I noticed similar for #OHBM2023 (#fMRI): not a lot of discussion on here (though some!), but also not as much on twitter as past years. (I logged in to twitter for the first time this year just to check for conference info; the #OHBM organizers still posted there.)

A lot of science social media folks seems to be waiting, though I don't know for what.

Jo Etzel · @JosetAEtzel

418 followers · 714 posts · Server fediscience.org@lakens Another fundamental of science is honesty and transparency. I think emphasizing (and requiring) that data and analysis code be shared as part of the publication is also a logical practice, and often easier to implement than, e.g., a full preregistration of an #fMRI study.

Jo Etzel · @JosetAEtzel

395 followers · 654 posts · Server fediscience.orgGoal (and necessity) for tomorrow: get ready for #OHBM2023!

Not many of us on mastodon, I think, and I'm definitely feeling out of the loop a bit. I'll look for the open science room mattermost; please share any other pointers. (I haven't logged in to twitter so far in 2023, but am wondering if I'll have to to get the #OHBM news and chatter.)

Going to OHBM and want to chat about #fMRI quality control, analysis, #rstats? Let me know! ☺️

Jo Etzel · @JosetAEtzel

395 followers · 654 posts · Server fediscience.orgNastyBigPointyTeeth!🌈♀ · @MsDropbear

215 followers · 1334 posts · Server kolektiva.social>Lonely people see the world differently, according to their brains

Brain activity differs among people who feel out of touch with their peers.

>To investigate what goes on in the brains of lonely people, a team of researchers at the University of California, Los Angeles, conducted noninvasive brain scans on subjects and found something surprising. The scans revealed that non-lonely individuals were all found to have a similar way of processing the world around them. Lonely people not only interpret things differently from their non-lonely peers, but they even see them differently from each other.

>“Our results suggest that lonely people process the world idiosyncratically, which may contribute to the reduced sense of being understood that often accompanies loneliness,” the research team, led by psychologist Elisa Baek, said in a study recently published in Psychological Science.

>Baek want to see if there was something to an idea known as the “Anna Karenina principle.” Leo Tolstoy’s iconic novel Anna Karenina opens with the line, “Happy families are all alike; every unhappy family is unhappy in its own way.”

>In this context, Tolstoy turned out to be right. The fMRI scans showed that the reactions of non-lonely individuals to the videos they watched were extremely similar. Lonely individuals had brain activity that was not only significantly different from that of non-lonely individuals but was even more dissimilar from each other, meaning that each lonely person in this study perceived the world in a distinct way.

>Baek suggests that having a point of view different from others makes the lonely even lonelier, as they’re less likely to feel understood (though she does mention that it’s not clear whether this is a cause or effect of loneliness—or both).

>Anyone who is lonely can now be assured there is probably someone out there who feels just as isolated—just in a completely different way.

#Science #Loneliness #fMRI #Psychology

😞 😭

#science #loneliness #fmri #psychology

Jim Donegan ✅ · @jimdonegan

1515 followers · 4464 posts · Server mastodon.scot#AlanLeshner - Can #Brain Explain #Mind?

https://www.youtube.com/watch?v=9Ns4QCbo-FI&ab_channel=CloserToTruth

#Philosophy #PhilosophyOfMind #Mind #Consciousness #Qualia #PhilosophyOfConsciousness #Neuroscience #NeuroImaging #MindBodyProblem #MRI #FMRI #CloserToTruth #RobertKuhn

#RobertKuhn #CloserToTruth #fmri #mri #mindbodyproblem #neuroimaging #neuroscience #philosophyofconsciousness #qualia #consciousness #philosophyofmind #philosophy #mind #brain #alanleshner

Albert Cardona · @albertcardona

1840 followers · 3031 posts · Server mathstodon.xyz@ginapoe Welcome! Note that here hashtags can be followed, for example #neuroscience, #fMRI or #connectomics. And since search is mostly limited to usernames or hashtags, it's customary to add hashtags to posts so as to make them discoverable.

#connectomics #fmri #neuroscience

Matthias Nau · @MatthiasNau

510 followers · 20 posts · Server fediscience.org#DeepMReye has a new #streamlit app! 🎊 Camera-less #eyetracking in #fMRI has never been easier. https://github.com/DeepMReye/DeepMReye#option-4-streamlit-app

◦ Install & open

◦ Pick a pretrained model

◦ Upload fMRI data

◦ Download gaze coordinates

The app makes #DeepMReye even more accessible as no coding is required. If you use it, be aware though that the pretrained models are not perfect: Validate your results or train your own model using our notebooks!

https://github.com/DeepMReye/DeepMReye/blob/main/notebooks/deepmreye_example_usage.ipynb

Huge kudos to @cyhsm for creating the app!

#fmri #EyeTracking #streamlit #deepmreye

Brian Knutson · @knutson_brain

710 followers · 1797 posts · Server sfba.socialLovely video explainer about using #FMRI to visualize reward anticipation with the Monetary Incentive Delay Task (#MIDTask), courtesy @kingscollegelondon:

https://www.youtube.com/watch?v=z_n3QALr2ls

Jo Etzel · @JosetAEtzel

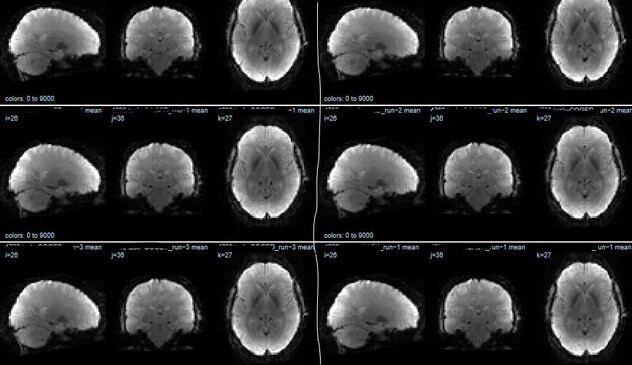

376 followers · 620 posts · Server fediscience.org@dpat I should have included a link to an explanation of what I meant by "tiger stripe" pattern, sorry. 😅 Here is some, and https://mvpa.blogspot.com/2021/06/dmcc55b-supplemental-as-tutorial-basic_18.html has a bit more.

This image shows the idea: the first three rows have typical surface temporal mean images; blotchy, but with "tiger stripes" at the top (central sulcus) visible (arrows). The fourth image is from a failed realignment: the underlying surface shape is ok, but the pattern is all wrong.

#gifti #qc #neuroimaging #fmri

The vOICe vision · @seeingwithsound

379 followers · 1201 posts · Server mas.toUltra-high field #fMRI of visual #mental #imagery in typical imagers and aphantasic individuals https://www.biorxiv.org/content/10.1101/2023.06.14.544909v1 In aphantasic individuals, "the Fusiform Imagery Node was functionally disconnected from fronto-parietal areas"; #congenital #aphantasia #neuroscience

#neuroscience #aphantasia #congenital #imagery #mental #fmri

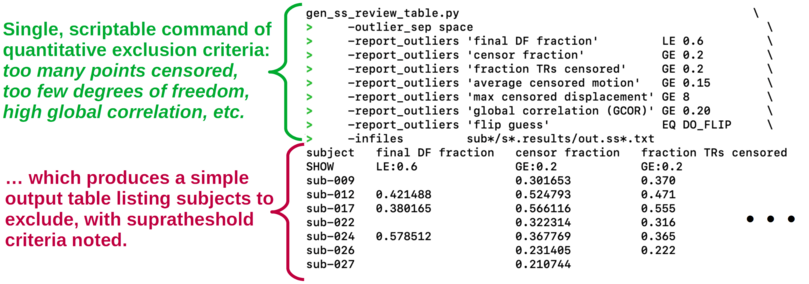

Paul Taylor | MRI Methods · @afni_pt

46 followers · 37 posts · Server fediscience.orgThe afni_proc.py output also contains a summary of useful quantitative features. This can be put into a simple AFNI command to apply drop/exclusion criteria for subjects automatically. In this way, one can integrate both qualitative and quantitative QC efficiently.