PJ Coffey · @Homebrewandhacking

1083 followers · 9183 posts · Server mastodon.ieOof... there's a lot going on there. Having to release your paper because you're worried about minorities being denied jobs by poorly and obscurely implemented black boxes is never a fun time.

#LLM

#AI

#AIResearch

#Preprint

#GPT2

#BERT

#GPT2Small

#Algorithm

#OpenAI

#ResearchPaper

#Science

#Racism

#EmploymentLaw

#llm #ai #AiResearch #preprint #gpt2 #bert #gpt2small #algorithm #openai #ResearchPaper #science #racism #employmentlaw

PLOS Biology · @PLOSBiology

4795 followers · 1219 posts · Server fediscience.orgBrain vs #LLMs? Hierarchical predictions integrating nonlinguistic & linguistic knowledge provide a more comprehensive account of behavioral & neurophys response to #speech, compared to next-word predictions by #GPT2 @YaqingSu @labgiraud #AI #PLOSBiology https://plos.io/409yGSS

#plosbiology #ai #gpt2 #speech #LLMs

PLOS Biology · @PLOSBiology

4794 followers · 1213 posts · Server fediscience.orgBrain vs #LLMs? Hierarchical predictions integrating nonlinguistic & linguistic knowledge provide a more comprehensive account of behavioral & neurophys response to #speech, compared to next-word predictions by #GPT2 @YaqingSu @labgiraud #AI #PLOSBiology https://plos.io/409yGSS

#plosbiology #ai #gpt2 #speech #LLMs

PLOS Biology · @PLOSBiology

4794 followers · 1203 posts · Server fediscience.orgBrain vs #LLMs? Hierarchical predictions integrating nonlinguistic & linguistic knowledge provide a more comprehensive account of behavioral & neurophys response to #speech, compared to next-word predictions by #GPT2 @YaqingSu @labgiraud #AI #PLOSBiology https://plos.io/409yGSS

#plosbiology #ai #gpt2 #speech #LLMs

michabbb · @michabbb

14 followers · 312 posts · Server social.vivaldi.netRun 🤗 Transformers in your browser! - https://github.com/xenova/transformers.js

We currently support #BERT, #ALBERT, #DistilBERT, #T5, #T5v1.1, #FLANT5, #GPT2, #BART, #CodeGen, #Whisper, #CLIP, #Vision Transformer, and VisionEncoderDecoder models, for a variety of tasks....

#bert #albert #distilbert #t5 #t5v1 #flant5 #gpt2 #bart #codegen #whisper #clip #vision #webml

MOULE :MOULE_Logo: · @MOULE

362 followers · 525 posts · Server mastodon.moule.worldMOULE :MOULE_Logo: · @MOULE

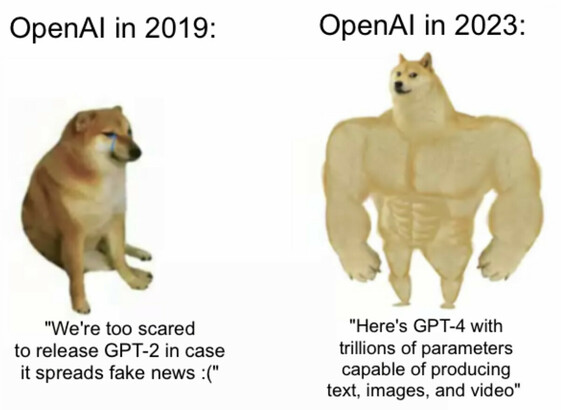

362 followers · 522 posts · Server mastodon.moule.worldWhile waiting for #OpenAI to release #GPT4, let’s not forget that only 4 years ago they were scared to release #GPT2 because of “fake news” — how the turns have tabled! 😂 #AI #ArtificialIntelligence #GPT https:e//betanews.com/2019/02/15/openai-gpt2-fake-news-generator/

#openai #gpt4 #gpt2 #ai #artificialintelligence #gpt

Tomasz Nurkiewicz 🇺🇦 · @nurkiewicz

679 followers · 156 posts · Server fosstodon.orgIf you think #ChatGPT based on #GPT3 is so powerful, think about its predecessor, #GPT2, which was "too dangerous to release" back in 2019... https://www.independent.co.uk/tech/ai-artificial-intelligence-dangerous-text-gpt2-elon-musk-a9192121.html

Fun fact: the article's URL contains `elon-musk`. No mention of Musk in the article whatsoever...

Tom "Paradox" · @Pomero

6 followers · 9 posts · Server mastodon.content.townIs 500mb of raw twitch chat (~4mill messages) enough to fine tune a language model? Maybe! I have no idea what I'm doing. #huggingface #gpt2 #AI

Silot · @tolis

63 followers · 136 posts · Server mastodon.gamedev.placeGenerate Mario levels with GPT2 trained model https://github.com/shyamsn97/mario-gpt You could use generative AI to create levels for your game! Of course you will have to play them and tweak them... #generativeai #ai #mario #gpt2

#gpt2 #mario #ai #generativeAI

Pedro J. Hdez · @ecosdelfuturo

808 followers · 259 posts · Server mstdn.socialMás semejanzas de los modelos #GPT con el cerebro.

¿Recuerdan todos esos estudios neurológicos de activación de determinadas neuronas en tareas concretas del cerebro? Bien, los autores de este escrito afirman haber encontrado una neurona especialmente relevante en la distinción entre el uso de "a" y "an" que hace #GPT2.

Universo Nintendo · @gustavo_rebollar_angel

13 followers · 76 posts · Server tkz.oneMarioGPT recurre a la tecnología de #InteligenciaArtificial de #GPT2 para crear niveles de #SuperMarioBros :3. https://bit.ly/3I0iUSq

#inteligenciaartificial #gpt2 #supermariobros

MOULE · @MOULE

359 followers · 846 posts · Server mas.toRemember back in 2019 when #OpenAI was too scared to release #GPT-2 because they said it was "too dangerous"? 😂 #AI #ArtificialIntelligence #ChatGPT #GPT2 #GPT3 #GPT4 https://futurism.com/openai-dangerous-text-generator

#GPT4 #gpt3 #gpt2 #chatgpt #artificialintelligence #ai #gpt #openai

Jenny Lepies · @Kultanaamio

242 followers · 125 posts · Server mstdn.socialJüngst investierte Microsoft unglaubliche 10 Mrd Dollar in OpenAI. Es gehört damit zu den wertvollsten Start-ups der Welt. Dabei startete #OpenAI einst als gemeinnützige Organisation.

@karenhao durfte 2020 das Labor besuchen. (Text hinter Paywall)

#Microsoft #ChatGPT #GPT3 #GPT2

https://www.heise.de/hintergrund/KI-OpenAI-beendet-seine-Offenheit-4697816.html

#gpt2 #gpt3 #chatgpt #Microsoft #openai

Weiterbildung Digital · @martinlindner_wb

393 followers · 233 posts · Server colearn.socialRT @PY_PlainEnglish@twitter.com

I Fine-Tuned GPT-2 on 100K Scientific Papers. Here’s The Result: https://python.plainenglish.io/i-fine-tuned-gpt-2-on-100k-scientific-papers-heres-the-result-903f0784fe65 #TextGeneration #Gpt2 #FineTuning #Python #LanguageModeling

🐦🔗: https://twitter.com/PY_PlainEnglish/status/1594242920680280066

#textgeneration #gpt2 #finetuning #python #languagemodeling

Ondřej Cífka · @cifkao

23 followers · 4 posts · Server sigmoid.socialThe technique works with any causal LM, as long as it was trained to accept arbitrary text fragments (not necessarily starting at sentence or document boundary), which happens to be how large #GPT-like models (#GPT2, #GPT3, #GPTJ, ...) are usually trained.

The main trick is in realizing that the necessary probabilities can be computed efficiently by running the model along a sliding window. 🧵3/4

Vlic · @vlic

56 followers · 131 posts · Server wien.rocksKoustuv Sinha · @koustuvs

158 followers · 35 posts · Server sigmoid.socialHappy to share our new paper “Language model acceptability judgements are not always robust to context” https://arxiv.org/abs/2212.08979! We prepend several kinds of context to minimal linguistic #acceptability test pairs and find #LMs (#OPT, #GPT2) can still achieve strong performance on BLiMP & SyntaxGym, except in some interesting cases. 🧵 [1/7]

#acceptability #lms #opt #gpt2

Jason Tucker · @jasontucker

357 followers · 400 posts · Server simian.rodeo