Cory Doctorow's linkblog · @pluralistic

44870 followers · 43009 posts · Server mamot.frEven trace amounts of bias in the original training data get refined and magnified when they are output though a decision support system that directs humans to go an act on that output. Algorithms are to bias what centrifuges are to radioactive ore: a way to turn minute amounts of bias into pluripotent, indestructible toxic waste.

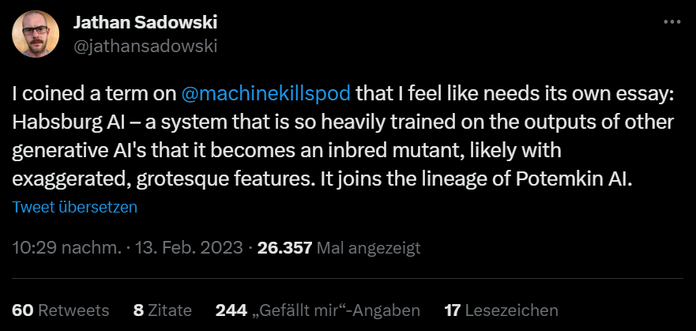

There's a great name for an AI that's trained on an AI's output, courtesy of #JathanSadowski: #HabsburgAI.

18/

Christoph Derndorfer-Medosch · @random_musings

123 followers · 215 posts · Server social.tchncs.deNot sure how I managed to miss it until today, but the term #HabsburgAI is absolutely brilliant:

"Habsburg AI – a system that is so heavily trained on the outputs of other generative AI's that it becomes an inbred mutant, likely with exaggerated, grotesque features."

And I'm not just saying this, because I live in what used to be the capital of the Habsburg empire. 😜

(source over on Twitter: https://twitter.com/jathansadowski/status/1625245803211272194) #ChatGPT #AI #hype

#hype #ai #chatgpt #habsburgai

Cory Doctorow's linkblog · @pluralistic

41584 followers · 41664 posts · Server mamot.frThe internet is increasingly full of garbage, much of it written by other confident habitual liar chatbots, which are extruding plausible sentences at vast scale. Future confident habitual liar chatbots will be trained on the output of these confident liar chatbots, producing #JathanSadowski's #HabsburgAI:

https://twitter.com/jathansadowski/status/1625245803211272194

But the declining quality of Google Search isn't merely a function of chatbot overload. For many years, Google's local business listings have been *terrible*.

2/