Roland Meyer · @bildoperationen

909 followers · 290 posts · Server tldr.nettime.orgIn a recent talk, @nilspooker very convincingly compared the way visual patterns are reproduced and amplified in AI #ImageGeneration to the practice of art forgery: forgers like van Meegeren or Beltracchi were highly successful at not only matching but even overfulfilling the stylistic expectations attached to an artist's name. What I like so much about this comparison is that it allows us to think about the media specificity of fakery. While paintings have been forged ever since there was an art market, photographs are rather manipulated than forged, and what is considered inauthentic fundamentally differs between painting and photography.

Forging a painting is about imitating a style and falsely attributing a unique artifact to an individual author who did not create it. Manipulating a photograph, however, is usually not about authorship, but about the relationship between a reproducible image and the reality it's meant to depict. Faking photographs, therefore, traditionally meant somehow interfering with the photographic process, be it by staging events that never happened, retouching the photographic surface, or combining elements from different photographs into one image.

AI image synthesis may seem to resemble the latter, but it's actually more like forging a painting: there's no actual photo to begin with, only a statistical process aimed at fulfilling the visual expectations associated with a particular label – whether that label is »Vermeer« or »documentary photo«. But the history of art forgery also shows us, as Nils has pointed out, that these expectations are historically conditioned: What looked like a Vermeer in the 1930s now appears to be a rather crude forgery – and what appears like a photo today might indeed reveal us the limits of our visual expectations of what a convincing photograph looks like.

#imagegeneration #platformrealism

Roland Meyer · @bildoperationen

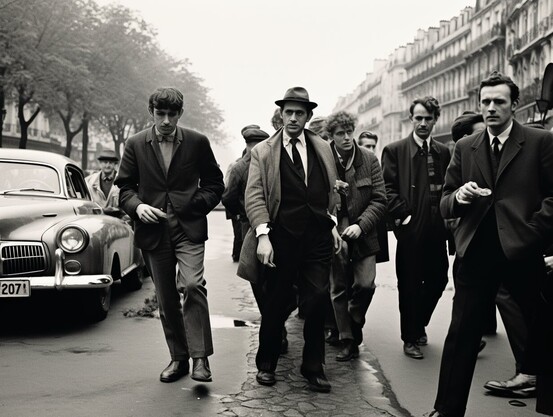

894 followers · 279 posts · Server tldr.nettime.orgFor AI #ImageGeneration tools like #Midjourney, historical dates usually do not indicate singular events, but rather, to quote Fredric Jameson, point to some generic »pastness«: visual styles and atmospheres replace any specific historical past. In #PlatformRealism, history dissolves into a chronological series of aesthetic »vibes« distilled from both historical footage and pop cultural imagery. Ask #Midjourney for a »documentary photo« from May 68 in Paris and you get a scene straight out of a Nouvelle Vague film, while an image from January 6, 2021 in Washington DC looks like an establishing shot from a generic Netflix thriller.

In #PlatformRealism, historical reality and cultural fiction are largely indistinguishable, and the typical tendency toward the dreamlike and fantastic can appear in the most unexpected places, as in Midjourney's version of a »documentary photo« of November 9, 1989 in Berlin. There is, however, at least one historical date that Midjourney refuses to »imagine«: September 11, 2001. When it comes to corporate censorship, some historical dates still seem to point to singular events and a traumatic reality beyond the filters of #PlatformRealism.

#imagegeneration #midjourney #platformrealism

Roland Meyer · @bildoperationen

894 followers · 274 posts · Server tldr.nettime.orgJust arrived: My copy of David Fathi's amazing book-length visual essay »The Machine Seems To Need a Ghost« (L'Artiere 2023), feat. a short text by me on »Latent Nostalgia«. Very thankful for the invitation to contribute to this wonderful project!

#ImageGeneration

https://www.lartiere.com/en/prodotto/the-machine-seems-to-need-a-ghost-david-fathi/

Roland Meyer · @bildoperationen

894 followers · 272 posts · Server tldr.nettime.org»The ideology of attention seeking and sales pitches are built into how the images are produced, interpreted, and characterized. ... In that way, they are always advertisements for themselves.« Rob Horning with some important thoughts on #ImageGeneration & #PlatformRealism https://robhorning.substack.com/p/the-preponderance-of-the-object

#imagegeneration #platformrealism

Roland Meyer · @bildoperationen

884 followers · 270 posts · Server tldr.nettime.orgIf the default #PlatformRealism of #imagegeneration tools can essentially be described as a second-order aesthetic of generic images, it's particularly revealing what #Midjourney does when asked to generate the image of something specific, say a famous building. You’ve probably recognized the buildings depicted here, beginning with New York's Guggenheim Museum. However, they are anything but an accurate representations. Rather, not unlike caricatures, they are faithful only in the most recognizable features, while all details are treated quite generously. What they depict are less specific buildings than their reproducible clichés. However, this transformation took place long before AI: The more images of a famous landmark circulate, the more it has already become a generic icon.

While these buildings themselves have become iconic, their surroundings have not – and #Midjourney consequently replaces them with buildings and sceneries vaguely based on a certain generic idea of what New York, Rome or Venice should look like. Moreover, individual phases in the history of a famous landmark seem to be too specific to be reproducible - even if these phases, like the time when the Berlin Brandenburg Gate was located behind the Wall, lasted for decades and were extensively documented photographically. What these images thus show is how even the concrete and historically specific, like a famous building, can become generic and timeless, a more or less probable variant of a a recognizable, repeatable, and recombinable pattern. Reality enters #PlatformRealism only insofar as it has already become iconic.

#platformrealism #imagegeneration #midjourney

Roland Meyer · @bildoperationen

878 followers · 266 posts · Server tldr.nettime.orgWith each update, #ImageGeneration models promise us more »realistic« representations – but the »reality« these images represent has little to do with the one we live in. Rather, they are best described as #PlatformRealism.

In the age of networked online content, hardly any online text seems complete without at least one accompanying image. Many content management systems expect you to provide images for every post, even if they provide no additional information. These images are not mere illustrations, but attractors designed to make content more visible, shareable, and likable.

Other examples of generic images are stock photos or »key visuals« intended to convey the »identity« of a brand or product. Such images are typically »realistic« in that they are rendered in a style that conforms to our expectations of photographic »realism«. But instead of depicting concrete real-life situations, stock photos and key visuals stage abstract concepts such as competence, tradition, or whatever the client wants, transforming the realistic depiction of bodies and spaces into a stage for the symbolic manifestation of ideas.

Interestingly, this is also what »Socialist Realism« was about, according to Boris Groys – rather than reflecting the reality of real existing socialism, its mission was to convey »transcendental« messages by »incarnating« them in what only superficially looked like real people. It may be no coincidence that many of the images produced by Midjourney in its default mode have a striking affinity with the aesthetics of »Socialist Realism« – after all, they too are not depictions of real-world events, but »incarnations« of abstract concepts, as formulated in prompts.

What distinguishes #PlatformRealism from both earlier capitalist and socialist versions of generic »realisms«, however, is its statistical character. It's a second-order aesthetic fueled by the automated extraction of patterns from largely redundant combinations of generic images and concepts.

#imagegeneration #platformrealism

Roland Meyer · @bildoperationen

857 followers · 255 posts · Server tldr.nettime.orgRecently, #Midjourney introduced a new parameter called »weird«, which aims to make results more »unexpected«. This is notable for a number of reasons, not least because it highlights what the company considers »expected« and thus »normal«: images like the first of these, for example, which shows an all-white 1950s nuclear family »enjoying a picnic«. This, according to Midjourney, represents the zero degree of »weirdness.«

Pump up the algorithmically generated »weirdness« to 200, and some fun stuff starts to happen, including what appears to be a bit of cross-dressing, but we're still mostly in a white middle-class dream world, just a little quirkier.

Increased »weirdness« doesn't seem to affect MJ's ideological baseline so much as it allows for less conventional compositions. You can see this in the third image of the series - at a »weirdness« level of 500, an oblique (so-called »Dutch«) angle appears, a compositional technique popular in early modernist cinema and photography, but quite unusual for AI-generated imagery. Also, heteronormativity seems to be temporarily suspended.

In the final image we reach the maximum weirdness (3000) that Midjourney now seems to be able to »imagine« under standard conditions. I have to admit that I'm disappointed. The composition is a bit more unusual than expected, the family has grown into a small crowd, unidentifiable things are falling from the trees, and the faces are distorted in typical AI-Francis-Bacon fashion, but overall nothing here comes close to the surreal strangeness that was regularly produced by previous versions of the software. Even the artificially enhanced »weirdness« seems to have been sanitized. In the end, what the company considers »expected« is far more disturbing than what its new »weird« parameter produces ...

Prompts were: »photograph of a family enjoying a picnic --ar 4:3 --weird [0-3000] --seed 12345

TechInsiderBuzz · @TechInsiderBuzz

3 followers · 59 posts · Server me.dmWepik AI: Presentations, Images, and AI Writing Made Effortless

https://techinsiderbuzz.com/blog/wepik-ai-presentations-images-and-ai-writing-made-effortless/

Effortlessly create visuals, presentations, and content with Wepik AI tools. Transform ideas into stunning reality!

#artificialintelligence #artificial_intelligence #artificialintelligencetechnology #ai #wepik #Writing #image #imagegeneration #presentation #tech #technology #Medium #MeDm

#artificialintelligence #artificial_intelligence #artificialintelligencetechnology #ai #wepik #writing #image #imagegeneration #presentation #tech #technology #medium #medm

PIXEL REFRESH News 🕹️📱 💻 · @pixelrefresh

63 followers · 54 posts · Server mstdn.socialHow Does the Magic of AI Generation Work?

Sam takes us on a journey of how A.I. can create an image using its collection of pictures and artwork and construct something we perceive as unique.

https://www.pixelrefresh.com/how-does-the-magic-of-ai-generation-work/

#imagegeneration #artificialintelligence #AI

White House Press Office · @press

58 followers · 254 posts · Server whitehouse.org#ImageGeneration has just gotten a whole lot easier with the launch of Meta's CM3leon! This model uses only 5x less compute, and requires a smaller training data set than previous models! #AI #Revolutionary #Transformers #Meta #CM3leon http://www.techmeme.com/230714/p8#a230714p8

#imagegeneration #ai #revolutionary #transformers #meta #cm3leon

BoydstonLaw · @BoydstonLaw

363 followers · 195 posts · Server mstdn.socialFive Reasons Why You Should be Monitoring These Four Artificial Intelligence Cases

The GitHub case looks to be settling - the other three are steaming right along.

I'll link the three cases in the comments.

#Law #LawFedi #AI #LegalTech #Copyright #MachineLearning #Litigation #ImageGeneration #CodeGeneration #ThompsonReuters

#thompsonreuters #codegeneration #imagegeneration #litigation #MachineLearning #Copyright #legaltech #AI #lawfedi #law

Roland Meyer · @bildoperationen

828 followers · 228 posts · Server tldr.nettime.orgAI image synthesis tools are redundancy processing machines: they feed on repeating patterns in their training data, digest them, compress them, and spit them out again, sometimes in strangely distorted ways. This is particularly evident in Midjourney's new "Zoom Out" feature.

Take the first of these images: this is how Midjourney imagines »a family enjoying a barbecue«: an all-white, middle-class, suburban American dream world, as if painted by Norman Rockwell – though he would never have depicted the whole family around the burning grill. Despite such weird details, this is already a picture of redundancies: There is no new information here, just the repetition of worn-out patterns or clichés.

But once you »zoom out« of this scene, some interesting things happen. Instead of providing more context to the scene, Midjourney just keeps adding more of the same elements: more people in casual clothes, more generic landscape, more clouds, more barbecue grills – the image becomes both more crowded and more redundant with each step. Meanwhile, the mood changes drastically, from a bright summer afternoon to a gloomy night scene. This seems to be due to the subtle vignetting effect of the first image – to maintain visual consistency, the slightly darker edges of the first image gradually lead to an overall darkness.

As you continue to zoom out, the images get weirder with each step: the scales become more confusing, the use of light more paradoxical. As Midjourney tries to maintain visual consistency while adding more redundancy, it heads straight for the uncanny valley. And this seems to me to be one of the most fascinating aesthetic aspects of AI image synthesis tools: they show how the drive for consistency, taken to extremes, produces paradoxes, how redundancies collapse in on themselves, and how clichés turn into nightmares.

(Note: I only show four of the original seven steps)

ErosBlog Bacchus · @ErosBlog

364 followers · 1011 posts · Server kinkyelephant.comEarlier today I noticed @LordCaramac making #GenerativeArt on here with #GenerativeAI #bot @stablehorde_generator. It interfaces with @stablehorde, a #crowdsourced distributed cluster for #ImageGeneration. Of course I instantly poked it to see if it allows generation of so-called #NSFW imagery (#adult themes, #naked #nudes, #porn). And it does! I deleted my tests here (generative #AI is controversial and some may not want to see it) but of course I blogged all the deets: http://www.erosblog.com/2023/07/11/erotic-generative-art-via-ai-horde/

#ai #porn #nudes #naked #adult #nsfw #imagegeneration #crowdsourced #bot #generativeai #generativeart

Charnita Fance · @chachafance

1 followers · 55 posts · Server techhub.socialLearn about the best settings for Midjourney to enhance your AI-generated images. Adjust the model version, Niji mode for Anime content, stylize level, and variation mode to get the desired results. Experiment and optimize for better outcomes. #Midjourney #AI #imagegeneration

#midjourney #AI #imagegeneration

CryptoNewsBot · @cryptonewsbot

592 followers · 31262 posts · Server schleuss.onlineWhat is DALL-E, and how does it work? - Discover the process of text-to-image synthesis using DALL-E’s au... - https://cointelegraph.com/news/what-is-dall-e-and-how-does-it-work #text-to-imagesynthesis #imagegeneration #generativeai #deeplearning #autoencoder #dall-e

#dall #autoencoder #deeplearning #generativeAI #imagegeneration #text

Anker Kafory · @anker

19 followers · 145 posts · Server me.dm🎉 Stability AI introduces SDXL 0.9, a major leap in their text-to-image models! 🚀 This release revolutionizes generative AI imagery for films, TV, music, design, and industry. 🌟 With its massive parameter count and improved composition, SDXL 0.9 generates hyper-realistic images at a resolution of 1024x1024. 😲 Explore image-to-image prompting, inpainting, and outpainting functionalities too! #AI #ImageGeneration #CreativePossibilities 🌐 https://go.digitalengineer.io/ED

#ai #imagegeneration #creativepossibilities

Harald Klinke · @HxxxKxxx

1131 followers · 453 posts · Server det.socialThis is surely the latest of the best authors in the field of #AI #ImageGeneration

Generative Imagery: Towards a ‘New Paradigm’ of Machine Learning-Based Image Production

#ai #imagegeneration #digitalhumanities #generativeart #ki

Roland Meyer · @bildoperationen

794 followers · 178 posts · Server tldr.nettime.orgOut now: My essay »The New Value of the Archive. AI Image Generation and the Visual Economy of ›Style‹«, part of a special issue of IMAGE on »Generative Imagery«, edited by Lukas R.A. Wilde, Marcel Lemmes und Klaus Sachs-Hombach, also featuring great contributions by @hannesbajohr, @CyberneticForests and many others

#imagegeneration #imagearchives

Miro Collas · @Miro_Collas

358 followers · 9525 posts · Server masto.aiPlaying with #StableDiffusion #AI #ImageGeneration

#stablediffusion #ai #imagegeneration

j2i.net · @j2inet

27 followers · 243 posts · Server masto.aiA photographer asked that his photos be removed from a dataset used by Stable Diffusion. In response, he received a letter from a lawyer demanding he pay 887 Euros for legal fees, and told that the data set only contains links to images and not the images themselves.

"Only the links to images" sounds dishonest and illusive to me. The actual images are consumed in the training process.

#ai #imagegeneration #art #petapixel