Published papers at TMLR · @tmlrpub

516 followers · 386 posts · Server sigmoid.socialMachine Explanations and Human Understanding

Chacha Chen, Shi Feng, Amit Sharma, Chenhao Tan

Action editor: Stefan Lee.

#intuitions #explanations #Intuition

Nick Byrd · @ByrdNick

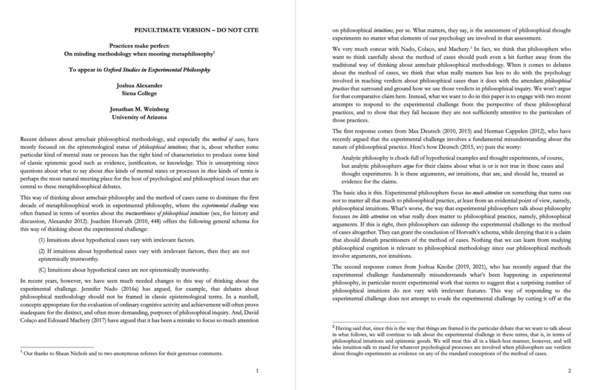

748 followers · 226 posts · Server nerdculture.deThere's a #debate in #ExperimentalPhilosophy (#xPhi) about whether #intuitions about philosophical thought experiments are "stable" (vs. manipulable).

Some (e.g., @xphilosophy) seem convinced of stability—e.g., because some replications of instability results and new experiments find #pValues > 0.05.

Alexander & @jonweinberg express a different view (https://philpapers.org/rec/ALEPMP). I agree that the debate needs parties to agree on a smallest effect size of interest.

Page images attached.

#debate #experimentalphilosophy #xPhi #intuitions #pvalues

@peterrenshaw · @peterrenshaw

282 followers · 497 posts · Server ioc.exchange“Employees at Tapestry, a portfolio of luxury brands, were given access to a #forecasting model that told them how to allocate stock to stores. Some used a #model whose #logic could be interpreted; others used a model that was more of a #blackbox. Workers turned out to be likelier to overrule models they could understand because they were, mistakenly, sure of their own #intuitions. #Workers were willing to accept the #decisions of a model they could not fathom, however, because of their #confidence in the #expertise of people who had built it. The #credentials of those behind an ai matter.”

Credentials & expertise of developers.

#Bartleby / #economist <https://economist.com/business/2023/02/02/the-relationship-between-ai-and-humans>

#forecasting #model #logic #blackbox #intuitions #workers #decisions #confidence #expertise #credentials #bartleby #economist

New Submissions to TMLR · @tmlrsub

148 followers · 301 posts · Server sigmoid.socialMachine Explanations and Human Understanding

#intuitions #explanations #Intuition