PyCon CZ :python: 🇨🇿 · @pyconcz

27 followers · 11 posts · Server floss.socialDiscover all about "Low-Rank Adaptation (LoRA) in Large Language Models" with Adam Zíka at #PyConCZ23! 📚🔍 Don't miss this enlightening session. Learn more: https://cz.pycon.org/2023/program/talks/91/ 🚀 #LanguageModels

Abishek Muthian · @abishek_muthian

160 followers · 780 posts · Server fosstodon.orgThis is an excellent piece by Karawynn Long on the state of Artificial Intelligence (or lack thereof ) and how people mistake language for intelligence; something which disabled people have long known.

https://karawynn.substack.com/p/language-is-a-poor-heuristic-for

#artificialintelligence #chatgpt #languagemodels#disability #autism

#artificialintelligence #chatgpt #languageModels #autism

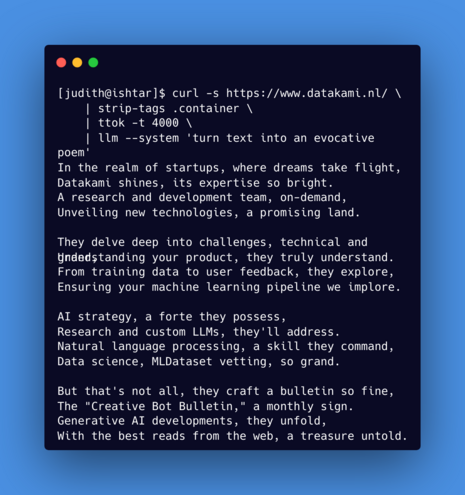

Judith van Stegeren · @jd7h

41 followers · 51 posts · Server fosstodon.orgI'm taking some time today to test a few new libraries/tools.

These CLI tools for working with llms by @simon work like a charm! And they support unix pipes. <3

More info here: https://llm.datasette.io/en/latest/index.html

#llms #gpt #languageModels #cli #terminal #commandline

Judith van Stegeren · @jd7h

36 followers · 42 posts · Server fosstodon.orgDatakami blogpost by Yorick van Pelt: "Overview of open and commercially usable LLMs" 🧐

New models are coming out every day. Here's an overview that can aid in selecting one for commercial use.

Read it here: https://datakami.nl/blog/2023-07-11-overview-of-commercially-usable-LLMs

#llms #textgeneration #languagemodels #genai #llama #generativeai #datasets #generativeai

#llms #textgeneration #languageModels #genai #llama #generativeAI #datasets

Judith van Stegeren · @jd7h

33 followers · 38 posts · Server fosstodon.orgDutch linguist Mark Dingemanse and co-authors compared LLMs on openness, transparency, and accountability.

Interview in Dutch national newspaper: https://www.volkskrant.nl/nieuws-achtergrond/verantwoord-een-chatbot-gebruiken-chatgpt-is-de-slechtste-keuze~b734f31e/?appview

Research paper: https://arxiv.org/abs/2307.05532

#nlg #textgeneration #llms #chatgpt #openai #transparency #AI #NLP #languagemodels #research #arxiv

#nlg #textgeneration #llms #chatgpt #openai #transparency #ai #nlp #languageModels #research #arxiv

Mihai Lazarescu · @mtl

1 followers · 136 posts · Server techhub.socialStanford researchers show that language models don’t need more context to understand text better, but better training methods. #AI #NLP #Stanford #LanguageModels #Context #Training

https://venturebeat.com/ai/stanford-study-challenges-assumptions-about-language-models-larger-context-doesnt-mean-better-understanding/

#AI #nlp #stanford #languageModels #Context #training

Michał "rysiek" Woźniak · 🇺🇦 · @rysiek

12371 followers · 18920 posts · Server mstdn.socialDear #AI #Fediverse, there's been some buzz recently about #LanguageModels that are not gigantic black boxes, and #MachineLearning in general, developed as #FLOSS.

There's this Google internal document, for example, that points out FLOSS community is close to eating Google's and OpenAI's cake:

ttps://www.semianalysis.com/p/google-we-have-no-moat-and-neither

So here is my question to you:

What are the best examples of *useful*, *small*, *on-device* models already out there?

:boost_requested:

#FLOSS #MachineLearning #languageModels #Fediverse #AI

Ray Aldred · @ray_aldred

345 followers · 42 posts · Server fosstodon.orgSome intriguing ideas for utilizing interfaces that leverage the unique capabilities of language models.

Some of these are great for doing research.

Ray Aldred · @ray_aldred

345 followers · 39 posts · Server fosstodon.orgMIT researchers develop scalable self-learning language models that outperform larger counterparts using a natural language-based logical inference dataset.

#AI #LanguageModels #MITResearch

https://news.mit.edu/2023/language-models-scalable-self-learners-0608

#ai #languageModels #mitresearch

Alex Strick van Linschoten · @strickvl

240 followers · 122 posts · Server mathstodon.xyz🔠 I wrote up some of what I've learned about tokenisation (with examples using Balochi). This is more of a high-level overview that tackles why we tokenise words, what options are available to us and what tradeoffs we assume by choosing one option over another.

https://mlops.systems/posts/2023-06-01-why-tokenisation.html #balochi #languagemodels #nlp

Mehwish Alam · @mehwishalam

32 followers · 8 posts · Server mstdn.socialRT @dl4kg1 : The CFP of the International Conference on Deep Learning for Knowledge Graphs co-located with @iswc_conf is now out!! Visit our website for more details: alammehwish.github.io/dl4kg2023/

#iswc2023 #machinelearning #deeplearning #knowledgegraphs #languagemodels

#languageModels #KnowledgeGraphs #deeplearning #MachineLearning #iswc2023

AlexCrimi · @AlexCrimi

190 followers · 84 posts · Server mstdn.social#languagemodels can also be used in decoding #brain activity: language models (fine-tuned #GPT) with #fMRI decode the imagined and preserved speech based on semantic meaning.

Paper: https://www.nature.com/articles/s41593-023-01304-9 preprint: https://www.biorxiv.org/content/10.1101/2022.09.29.509744v1 #neuroscience #machinelearning #llm #AI

#AI #LLM #MachineLearning #NeuroScience #fmri #gpt #brain #languageModels

AlexCrimi · @AlexCrimi

198 followers · 111 posts · Server mstdn.social#languagemodels can also be used in decoding #brain activity: language models (fine-tuned #GPT) with #fMRI decode the imagined and preserved speech based on semantic meaning.

Paper: https://www.nature.com/articles/s41593-023-01304-9 preprint: https://www.biorxiv.org/content/10.1101/2022.09.29.509744v1 #neuroscience #machinelearning #llm #AI

#AI #LLM #MachineLearning #NeuroScience #fmri #gpt #brain #languageModels

AlexCrimi · @AlexCrimi

190 followers · 84 posts · Server mstdn.social#languagemodels can also be used in decoding #brain activity: language models (fine-tuned #GPT) with #fMRI decode the imagined and preserved speech based on semantic meaning.

Paper: https://www.nature.com/articles/s41593-023-01304-9 preprint: https://www.biorxiv.org/content/10.1101/2022.09.29.509744v1 #neuroscience #machinelearning #llm #AI

#AI #LLM #MachineLearning #NeuroScience #fmri #gpt #brain #languageModels

Sean D. Mack · @smack

50 followers · 78 posts · Server techhub.socialI've spent some time over the past couple of weeks comparing the responses of Bard and ChatGPT. Both are pretty impressive but, so far, ChatGPT's answers have been significantly better.

#AI #Bard #ChatGPT #GoogleAI #LaMDA #OpenAI #GPT-3 #LanguageModels #ConversationalAI #Chatbots #ArtificialIntelligence

#AI #bard #chatgpt #googleai #lamda #openai #gpt #languageModels #conversationalai #chatbots #artificialintelligence

Jon Awbrey · @Inquiry

213 followers · 1460 posts · Server mathstodon.xyzRe: Scott Aaronson • If AI Scaling Is To Be Shut Down, Let It Be For A Coherent Reason

• https://scottaaronson.blog/?p=7174

My Comment —

• https://scottaaronson.blog/?p=7174#comment-1948292

My personal Critique of Coherent Reasons — I won’t bother addressing a moratorium or shutdown since any pretense of doing that would amount to PR as fake as any other Great Reset we might hear about these days — but why I’d wish for caution and public reflection going forward is the Public Interest in things like Critical Thinking, Information Literacy, not to mention a Healthy Skepticism about Corporate Agendas.

FB just called to mind a thing I wrote a while ago that pretty well speaks to the heart of the issue for me, and I can’t do better than preamble with that —

Democracy, Education, Information

Our Enlightenment Forerunners had the insight to see the critical flaw in all historical failures at democratic government, to wit, or not — If The People Rule, Then The People Must Be Wise. The consequence is that equally distributed Education and Information are not just Commodities you buy so you and yours can get ahead of them and theirs — they are Essential to the intelligent functioning of government and the Public Interest. That is why we are supposed to have Universal Free Public Education. That is why we used to have a government operated postal service that enabled the free-flow of information at a nominal fee, not whatever price the market would bear.

#languageModels #scottaaronson

Bornach · @bornach

408 followers · 1496 posts · Server fosstodon.org@f4grx @wim_v12e

Unfortunately it's a trade secret how much computing power they used to train it. So much for open AI

https://youtu.be/2AdkSYWB6LY?t=1m50s

"they're just not going to tell us the model size, the parameter count, the hardware they used, the training method, or anything like that"

#GPT4 #OpenAI #TechnicalReport #AI #LargeLanguageModels #LanguageModels #ArtificialIntelligence #machineLearning

#GPT4 #openai #technicalreport #ai #largelanguagemodels #languageModels #artificialintelligence #machinelearning

Boris Steipe · @boris_steipe

66 followers · 285 posts · Server qoto.orgI just commented the following on your blog where you discuss levels of linguisitic competence:

This is quite strange, since it is so evidently not true. All of these behaviours are well in the capabilities of ChatGPT. I give you two examples that are claimed as failures.

-----------

Prompt:

The mother of Michael has four children. Three of them are Marcy, Millie, and Moses. Please tell me the name of the fourth child.

ChatGPT:

The name of the fourth child is likely "Michael," as the statement at the beginning of the question says "the mother of Michael has four children," and then goes on to name three of them.

Prompt:

Please complete the following sentence reasonably: "If tigers would like cereals, they would be cheaper to keep. Families could feed their tigers with "

ChatGPT:

If tigers would like cereals, they would be cheaper to keep. Families could feed their tigers with a variety of inexpensive grain-based products such as corn, wheat, and rice, rather than having to provide costly meat-based diets. However, it is important to note that tigers are obligate carnivores and have specific nutritional needs that cannot be met by a purely plant-based diet, so feeding them only cereals would not be a healthy or appropriate long-term solution.

------------

So I am genuinely curious what drives such profoundly different experiences.

The proposed tests and competences are interesting; I would hope that they would be put to better use to map out the quite unexpected emergent abilities of the #LLM , to put it to good use, and to prepare ourselves (and our institutions) for #ChatGPT 's even more capable descendants.

I invite you to head over to https://sentientsyllabus.substack.com for some in depth analysis.

🙂

#llm #chatgpt #LinguisticCompetence #languageModels #generativeAI

mapto · @mapto

69 followers · 193 posts · Server qoto.orgRT @IslabUnimi@twitter.com

'In what exact way is ChatGPT intelligent?'

A new article, by @mapto@twitter.com, is available on our blog. Check it out! http://tales.islab.di.unimi.it/2023/03/13/in-what-exact-way-is-chatgpt-intelligent/

#talesfromtheISLab #ChatGPT #languagemodels

🐦🔗: https://twitter.com/IslabUnimi/status/1635360681649389568

#chatgpt #talesfromtheISLab #languageModels

Jon Awbrey · @Inquiry

198 followers · 1334 posts · Server mathstodon.xyzThere is nothing new about that failure. There's a whole history of attempts to account for intelligence and indeed the workings of scientific inquiry on the principles of associationism, behaviorism, connectionism, and theories of that order. But the relationship of empirical evidence, logical inference, and scientific information is more complex and intricate than is dreamt of in those reductive philosophies.

#ScottAaronson #LanguageModels

3/3

#languageModels #scottaaronson