Deyan Ginev · @dginev

307 followers · 415 posts · Server mathstodon.xyzIt is neat to see MetaAI using LaTeXML productively for arXiv preprocessing on their Nougat OCR work.

Good discussion in "5.2 Text modalities": there is indeed a lot of hidden complexity when recovering TeX input strings.

Rather tempting to wish for a way to normalize to "canonical" expressions...

project homepage: https://facebookresearch.github.io/nougat/

arXiv preprint:

https://arxiv.org/abs/2308.13418

Deyan Ginev · @dginev

301 followers · 387 posts · Server mathstodon.xyzThis lovely open access book is also distributed as MathML-native HTML and (to my surprise!) with some help from LaTeXML.

"Artificial Intelligence 3E: foundations of computational agents",

by David L. Poole & Alan K. Mackworth

Deyan Ginev · @dginev

299 followers · 383 posts · Server mathstodon.xyzRichard Zach announces today that their book:

"forall x: Calgary. An Introduction to Formal Logic"

is now available as accessible HTML, via #LaTeXML and #BookML

Read more at

https://openlogicproject.org/2023/07/27/forall-x-now-in-html-for-extra-accessibility/

Deyan Ginev · @dginev

296 followers · 354 posts · Server mathstodon.xyzA common story:

"Nothing really worked perfectly – #LaTeXML looks great, but is still a little complicated.

So, I [...] recreated the table as a machine-readable YAML file which is transformed to TeX and HTML by using respective templates with Jinja."

https://x-dev.pages.jsc.fz-juelich.de/2022/11/02/gpu-vendor-model-compat.html

Leonard/Janis Robert König · @ljrk

349 followers · 12956 posts · Server todon.euCurrently experimenting with adapting the @pandoc output from #Markdown to #HTML to work with the awesome #CSS built for #arxiv by @dginev. I could also use #pandoc to convert to #LaTeX and then use #LaTeXML through their https://github.com/dginev/ar5ivist tool for #arxiv but why the round-trip? The changes are yet incomplete as not all font things are adjusted and right now all footnotes are represented through the same symbol. Yet, I'm quite happy with the intermediate results :-)

This gives me reactive footnotes, either in the margins (almost #Tufte style) or through hovering, nicer link highlighting, quite acceptably justified text (I'm surprised how far the web has come). I didn't yet tweak the fonts further and I want to keep my code indented.

Left: Run through pandoc with custom HTML template & some #Lua filters

Right: Current state as seen on https://ljrk.codeberg.page/unixv6-alloc.html produced with a minimalist CSS stylesheet I stole from somewhere.

#markdown #html #css #arxiv #pandoc #latex #latexml #tufte #lua

Deyan Ginev · @dginev

224 followers · 163 posts · Server mathstodon.xyzMy honest response is, as usual for this theme: "here we go again" 😀

There are so many unfinished LaTeX implementations - but some of them are indeed useful.

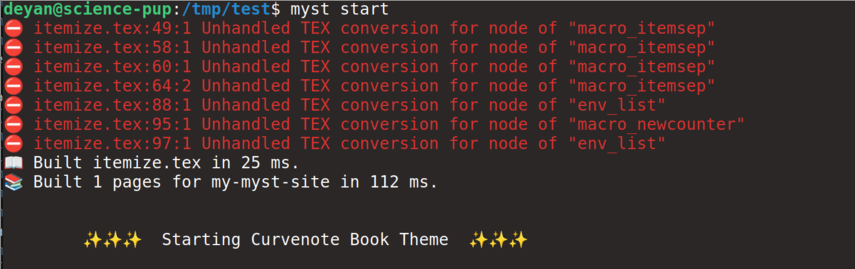

I have a "fake" benchmark file that I use to compare various tools, and here's the first encounter with it. It isn't particularly important - one of the many #LaTeXML tests.

On my machine you stack up quite well - only tralics, pandoc, hevea and the Rust rewrite of LaTeXML are faster:

https://gist.github.com/dginev/35236be7bc55fe1947e61e848e37bc3b

Deyan Ginev · @dginev

177 followers · 102 posts · Server mathstodon.xyzLaTeXML 0.8.7 was just released!

We're ready for MathML Core, which is expected to be available in all major browsers, early in 2023.

With gratitude to the wider academic community, who helped drive another productive year of extending our TeX interpretation fidelity and our LaTeX ecosystem coverage.

Full release notes at:

https://github.com/brucemiller/LaTeXML/releases/tag/v0.8.7

Deyan Ginev · @dginev

105 followers · 42 posts · Server mathstodon.xyz#introduction Hi everyone, Deyan here!

I tend to discuss the journey of converting #arXiv into #ar5iv: an HTML5 preview site for the world's largest preprint server.

I'm helping to develop the next generation of #latexml and #mathml, focusing on the most idiosyncratic corners of #LaTeX and math syntax.

And you'll see the occasional AI art / Large language model experiment flying by as well...

#latex #mathml #latexml #ar5iv #arxiv #introduction