Carsten Spille · @CarstenSpille

96 followers · 41 posts · Server social.tchncs.deApparently,

@AMD

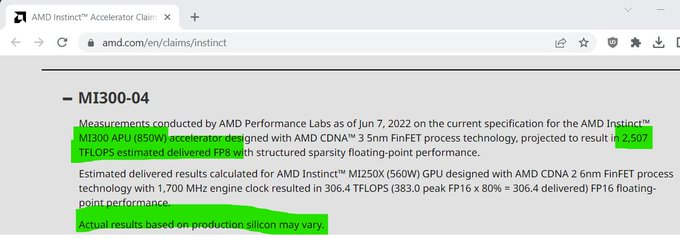

Instinct #MI300A will deliver 2507 TFlops of FP8 with sparsity at 850 watts based on pre-production silicon. That's put against 306,4 delivered TFlops (FP16, no FP8-support) on MI250X at 560 watts (80% utilization). Footnote's a year old, but wasn't live until a couple of days ago and in David Wang's Presentation at AMD FAD22, both numbers weren't present.

Benjamin Carr, Ph.D. 👨🏻💻🧬 · @BenjaminHCCarr

797 followers · 2009 posts · Server hachyderm.io#AMD Has a #GPU to Rival #Nvidia’s #H100

#MI300X is a GPU-only version of previously announced #MI300A supercomputing chip, which includes a #CPU and #GPU. The MI300A will be in El Capitan, a supercomputer coming next year to the #LosAlamos #NationalLaboratory. El Capitan is expected to surpass 2 exaflops of performance. The MI300X has 192GB of #HBM3, which Su said was 2.4 times more memory density than Nvidia’s H100. The SXM and PCIe versions of H100 have 80GB of HBM3.

https://www.hpcwire.com/2023/06/13/amd-has-a-gpu-to-rival-nvidias-h100/

#amd #gpu #nvidia #H100 #mi300x #mi300a #cpu #LosAlamos #nationallaboratory #hbm3

Benjamin Carr, Ph.D. 👨🏻💻🧬 · @BenjaminHCCarr

797 followers · 2005 posts · Server hachyderm.io#AMD Instinct#MI300 is THE Chance to Chip into #NVIDIA #AI Share

NVIDIA is facing very long lead times for its #H100 and #A100, if you want NVIDIA for AI and have not ordered don't expect it before 2024. For a traditional #GPU, MI300 is GPU-only part. All four center tiles are GPU. With 192GB #HBM, & can simply fit more onto a single GPU than NVIDIA. #MI300A has 24 #Zen4, #CDNA3 GPU cores, and 128GB #HBM3. This is CPU deployed in the El Capitan 2+ Exaflop #supercomputer.

https://www.servethehome.com/amd-instinct-mi300-is-the-chance-to-chip-into-nvidia-ai-share/

#amd #nvidia #ai #H100 #a100 #gpu #hbm #mi300a #Zen4 #cdna3 #hbm3 #supercomputer