Alexandre B A Villares · @villares

23 followers · 42 posts · Server pynews.com.brAlexandre B A Villares · @villares

23 followers · 41 posts · Server pynews.com.brErik W. Bjønnes · @Erik_W_B

99 followers · 604 posts · Server mastodon.gamedev.placeSpent the day programming #python for the first time in ages, and on the one hand it’s great how large the ecosystem is and how quickly I can get something up. On the other hand, it didn’t take long before I wished I was back in #RustLang or even #CPlusus..!

The amount of foot guns, trip mines and quicksand was #%^*! Luckily #numpy helped keep me on track for the most part.

Perhaps it’s time to check out using python libraries from rust…

#python #rustlang #cplusus #numpy

Alexandre B A Villares · @villares

20 followers · 35 posts · Server pynews.com.brAlexandre B A Villares · @villares

20 followers · 34 posts · Server pynews.com.brDan Gohman · @sunfish

1532 followers · 426 posts · Server hachyderm.ioElod Pal Csirmaz 🏳️🌈 · @csirmaz

21 followers · 16 posts · Server fosstodon.orgHow decomposing #4D objects into lower-dimensional faces helps to determine intersections and containment: https://onkeypress.blogspot.com/2023/08/hypergeometry-intersections-and.html Part of an ongoing project to extend #raytracing to higher dimensions. #CGI #Python #numpy

#4d #raytracing #cgi #python #numpy

Michał Górny · @mgorny

186 followers · 853 posts · Server pol.socialMyślicie, że jest wam dzisiaj gorąco?

Najpierw walczyłem z nowym segfaultem w #pydantic 2 z Pythonem 3.12. Nie udało mi się zajść daleko, co najwyżej ustalić, że to #heisenbug.

https://github.com/pydantic/pydantic/issues/7181

Potem testowałem świeży snapshot #LLVM — tylko po to, by odkryć, że testy znów padly na 32-bitowych platformach. Po bisect'cie, okazało się, że przyczyną była zmiana "NFCi" (niezamierzająca zmian funkcjonalnych) do logiki hashowania — wygląda na to, że LLVM drukuje teraz funkcje w kolejności zależnej od platformy.

https://reviews.llvm.org/D158217#4600956

Na koniec walczyłem z segfaultem w testach #trimesh. Najwyraźniej jest to regresja związana z betą #numpy 1.26.0, więc wypracowałem backtrace i zgłosiłem błąd.

#python #gentoo #numpy #trimesh #llvm #heisenbug #pydantic

Sergi · @sergi

33 followers · 500 posts · Server floss.socialI really liked @t_redactyl's talk about #Python optimization with #Numpy. I thought it was going to be the typical the typical "arrays are quicker than loops and that's it", but I didn't know about broadcasting and really liked the trick with sorting.

I also liked a lot the time taken to explain how lists work on memory vs arrays.

Check it out at https://youtu.be/8Nwk-elxdEQ

Tom Larrow · @TomLarrow

465 followers · 1092 posts · Server vis.socialToday's #CreativeCoding is some inset rectangle packing. Rather than storing all these as objects as in a traditional packing algorithm, it is evaluating the pixel array as a numpy array, and then comparing the values and making sure they are all the same value

This is really inefficient, and takes more than an hour to generate an image, but this was more my way of learning more #Numpy functions and deepening my #python understanding #py5

Code: https://codeberg.org/TomLarrow/creative-coding-experiments/src/branch/main/x_0095

#creativecoding #py5 #python #numpy

Andreas Dutzler · @adtzlr

3 followers · 15 posts · Server mathstodon.xyzWhy are the "batch" axes always the leading axes in NumPy? I designed all my packages to use the trailing axes as batch axes because this seems more natural to me. Now I'm thinking about switching to NumPy's convention - just to make things more intuitive for NumPy users. Any ideas on that? #python #batch #numpy

py5coding · @py5coding

135 followers · 178 posts · Server fosstodon.orgJust added two new methods `to_pil()` and `get_np_pixels()` to the Sketch, Py5Graphics, and Py5Image classes in #py5. The first will return a PIL Image object and the second will get pixels as a #numpy array. Both further the goal of further integrating py5 into the #python ecosystem.

Stark · @Stark9837

485 followers · 2536 posts · Server techhub.socialFor research projects where I use #NumPy and #MatPlotLib, I actually like using #Jupyter! It is just easier to run code and view my plots.

With #PyQt, you can even have widgets like sliders and other #Qt stuff.

It just speeds up my prototyping and makes me more productive. Naturally, only my plotting code and math exist in the .ipynb, and the rest is just imported from normal .py files. Thus, it allows for quick conversions once the prototyping is done.

#numpy #matplotlib #jupyter #pyqt #qt #python

Barrett · @ba66e77

12 followers · 55 posts · Server fosstodon.orgI know it's common in #python to import #pandas as `pd` and #numpy as `np`, but I just hate it.

For some reason it takes me extra brain power to decipher `pd` to pandas when I'm reading over modules. I'd rather improve readability and type a bit more when I'm writing the program.

Is it just me?

WhizKidz · @whizkidz

3 followers · 9 posts · Server mslink.comJoin us on a journey to Python: Across the Numpy-Verse on September 3rd at 5:30 PM - 7:00 PM PST. Sign up for free at https://www.meetup.com/whizkidz-computer-programmers/events/294511297/.

#coding #data_science #free #numpy #programming #python #spiderman

#coding #data_science #free #numpy #programming #python #spiderman

Tom Larrow · @TomLarrow

448 followers · 978 posts · Server vis.socialSometimes I go into a #CreativeCoding session wanting to make something specific. Other times I wonder what will happen if I try something weird

This is one of those. I use #Py5 to draw short lines on the screen, then capture the pixel array in a #NumPy array. Then I use the NumPy command roll to literally roll those pixels values around the array writing them back to the canvas when they are in different positions. This is the result after thousands of positions

Code https://codeberg.org/TomLarrow/creative-coding-experiments/src/branch/main/x_0084

Clément Robert · @neutrinoceros

108 followers · 229 posts · Server fosstodon.org#Cython 3.0 is (almost) out !

(binaries are being deployed right now, so it should be available in a couple hours)

https://github.com/cython/cython/releases/tag/3.0.0

This is exciting news for our ecosystem because it's the first stable version able to *not* generate deprecated C-API #numpy code. When it's widely adopted, Numpy devs will finally be able to move forward with performance optimisations that were not possible without breaking everyone's favourite package !

dillonniederhut · @dillonniederhut

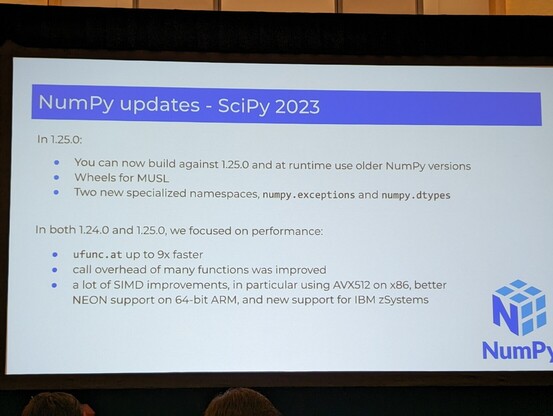

95 followers · 98 posts · Server fosstodon.orgIf you use #NumPy, upper bound your dependencies to <2.0 now.

Also, as of 1.25, you no longer need to use oldest-supported-numpy in your builds.

stark@techhub.social:~$ █ · @Stark9837

380 followers · 2007 posts · Server techhub.socialIsn't that the rule? If your code is slow, use #Numpy. If you need more speed and optimization, implement it out of first principles in C?

dillonniederhut · @dillonniederhut

73 followers · 56 posts · Server fosstodon.orgIt's really hard to write native C code for operating on arrays that works faster than #NumPy. Even in 1D.

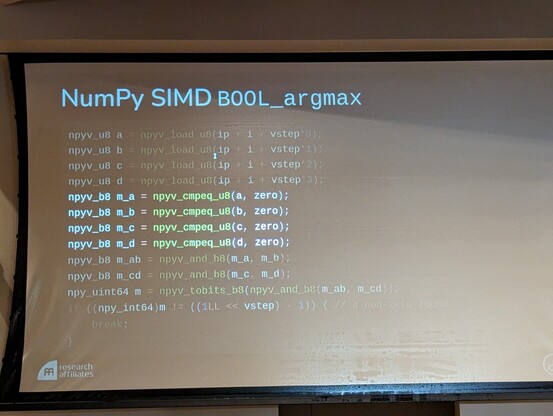

Why? NumPy makes extensive use of SIMD.

- Christopher Ariza at #SciPy2023