David Meyer · @dmm

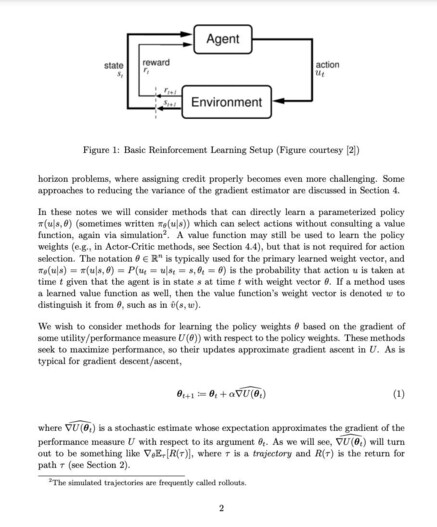

222 followers · 579 posts · Server mathstodon.xyzPolicy gradient methods in reinforcement learning are very cool and are used in a wide variety of machine learning applications including robotics, game playing, autonomous vehicles and many others, including incremental training of Large Language Models (LLMs).

A few of my notes on policy gradients are here: https://davidmeyer.github.io/ml/policy_gradient_methods_for_robotics.pdf. The LaTeX source is here: https://www.overleaf.com/read/kbgxbmhmrksb.

As always questions/comments/corrections/* greatly appreciated.

#machinelearning #Reinforcementlearning #policygradients #largelanguagemodelsa #TeXLaTeX

#texlatex #largelanguagemodelsa #policygradients #reinforcementlearning #machinelearning

David Meyer · @dmm

218 followers · 510 posts · Server mathstodon.xyzWhy is the Law of Large Numbers important when estimating likelihood ratio policy gradients?

Because it allows us to compute an unbiased estimate of an expectation as a sum, see the attached image.

Some of my notes on likelihood ratio policy gradients are here: http://www.1-4-5.net/~dmm/ml/lln_policy_gradient.pdf.

As always, questions/comments/corrections/* greatly appreciated.

#math #machinelearning #policygradients #reinforcementlearning #lawoflargenumbers

#LawOfLargeNumbers #reinforcementlearning #policygradients #machinelearning #math

David Meyer · @dmm

211 followers · 483 posts · Server mathstodon.xyzThe "log derivative trick" (see image below) is an incredibly cool and useful thing. Essentially what it does (in many cases anyway) is to provide a method for estimating a gradient in terms of an expectation, which is a big win (because the law of large numbers tells us that we can estimate the expectation in an unbiased way directly from the samples). One place it comes up is in likelihood ratio policy gradients for reinforcement learning.

See https://davidmeyer.github.io/ml/policy_gradient_methods_for_robotics.pdf for some notes on all of this.

#policygradients #logderivativetrick #machinelearning