Harald Sack · @lysander07

741 followers · 454 posts · Server sigmoid.socialNext stop on our brief #timeline of (Large) #LanguageModels is 2022:

InstructGPT is introduced by OpenAI, a GPT-3 model complemented and fine-tuned with reinforcement learning from human feedback.

ChatGPT is introduced by OpenAI as a combination of GPT-3, Codex, and InstructGPT including lots of additional engineering.

#ise2023 lecture slides: https://drive.google.com/file/d/1atNvMYNkeKDwXP3olHXzloa09S5pzjXb/view?usp=drive_link

#RLHF explained: https://huggingface.co/blog/rlhf

#ai #creativeai #rlhf #gpt3 #gpt #openai #chatgpt #lecture #artificialintelligence #llm

#timeline #languagemodels #ise2023 #ai #creativeai #rlhf #gpt3 #gpt #openai #chatgpt #lecture #artificialintelligence #llm

Bundesligatrainer · @Bundesligatrainer

7 followers · 203 posts · Server det.social@SheDrivesMobility @isotopp Netter Text, nur warum alle #ChatGPT mit Inhalten füttern kann ich nicht nachvollziehen. Der Laden gehört dem quasi Desktop OS #Monopolisten #Microsoft .

Wer dieses Tool nutzt, bringt uns in noch größere Abhängigkeit von denen.

Nutzt wenn möglich Alternativen oder sogar #opensource Alternativen:

https://dev.to/ayka_code/introducing-palm-rlhf-the-open-source-alternative-to-openais-chatgpt-4iaf

#palm #rlhf

#chatgpt #monopolisten #microsoft #opensource #palm #rlhf

danmcquillan · @danmcquillan

1053 followers · 630 posts · Server kolektiva.socialpeople awed by LLMs are missing the way their awe is being constructed: '...devising complex scenarios to trick chatbots into giving dangerous advice, testing a model’s ability to stay in character, & having detailed conversations about scientific topics" https://www.theverge.com/features/23764584/ai-artificial-intelligence-data-notation-labor-scale-surge-remotasks-openai-chatbots #ai #llm #rlhf

danmcquillan · @danmcquillan

1053 followers · 629 posts · Server kolektiva.socialbest summary of RLHF i've seen: "ChatGPT seems so human because it was trained by an AI that was mimicking humans who were rating an AI that was mimicking humans who were pretending to be a better version of an AI that was trained on human writing" https://www.theverge.com/features/23764584/ai-artificial-intelligence-data-notation-labor-scale-surge-remotasks-openai-chatbots #ai #rlhf

Hobson Lane · @hobs

778 followers · 2326 posts · Server mstdn.social@jneno

Yes. #RLHF rewards answers that attract likes. So the answers get more likable and agreeable (and not necessarily more factual) over time.

#stochasticsycophant #stochasticchameleon #rlhf

Hobson Lane · @hobs

778 followers · 2326 posts · Server mstdn.socialReally excited to hear how multimodal learning and smarter metrics (other than just a like button) are helping to overcome the biases and lies caused by #ChatGPT's myopic focus on the #RLHF appeal/likeability of responses.

The TWIML AI Podcast: Unifying Vision and Language Models with Mohit Bansal - #636

webpage: https://twimlai.com/podcast/twimlai/unifying-vision-and-language-models

Audio file: https://chrt.fm/track/4D4ED/traffic.megaphone.fm/MLN9407594028.mp3?updated=1688409213

Hobson Lane · @hobs

776 followers · 2316 posts · Server mstdn.socialReally excited to hear how multimodal learning and smarter metrics than just a like button are helping to overcome the biases and lies caused by #ChatGPT's myopic focus on the #RLHF appeal/likeability of responses.

The TWIML AI Podcast: Unifying Vision and Language Models with Mohit Bansal - #636

webpage: https://twimlai.com/podcast/twimlai/unifying-vision-and-language-models

Audio file: https://chrt.fm/track/4D4ED/traffic.megaphone.fm/MLN9407594028.mp3?updated=1688409213

Ulrich Junker · @UlrichJunker

376 followers · 2367 posts · Server fediscience.org@TedUnderwood you are referring to #RLHF (reinforcement learning by human feedback) as a way of correcting transformer output by human authors. But this technique also covers learning preferences from humans and this aspect hasn’t found much attention in the debate of #LLMs, but may rather be determining for ChatGPT’s success. What is your opinion about this? https://proceedings.neurips.cc//paper_files/paper/2022/hash/b1efde53be364a73914f58805a001731-Abstract-Conference.html

Eric Horwath · @EricHorwath

45 followers · 44 posts · Server hachyderm.io@brianchristian thanks for sharing: The Alignment Problem - #MachineLearning and #Human #Values

My takeaways:

"AI is a force multiplier for everything. For good, but also for #capitalism and #neoliberalism "

"Putting culture on autopilot is dangerous"

"I dont think that US law systems favors trade secrets over civil rights" <3

#opensource #foss

#Machine #love by Joe lehman http://export.arxiv.org/abs/2302.09248v2

#ai #artificialintelligence #chatgpt #ml #rlhf (Reinforcement learning from human feedback)

#machinelearning #human #values #capitalism #neoliberalism #opensource #foss #machine #love #ai #artificialintelligence #chatgpt #ml #rlhf

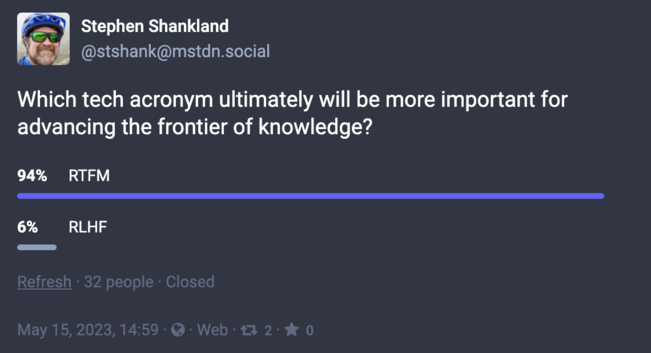

Stephen Shankland · @stshank

2710 followers · 604 posts · Server mstdn.socialUlrich Junker · @UlrichJunker

357 followers · 1684 posts · Server fediscience.orgInteresting interview which mentions #ReinforcementLearning with human feedback #rlhf.

“#ChatGPT architect John Schulman discusses his journey with #AI during UC Berkeley visit”

#ai #chatgpt #rlhf #ReinforcementLearning

coffe · @coffe

65 followers · 317 posts · Server social.piewpiew.se3. Modellerna behöver tränas om och om igen. Det görs oftast på både ny och tidigare känd information. Det kräver mycket energi och mycket data.

3/4

#ai #rlhf #gpt #bard #LLM #data #language #energi #Google #openAI

#ai #rlhf #gpt #bard #llm #data #language #energi #google #openai

coffe · @coffe

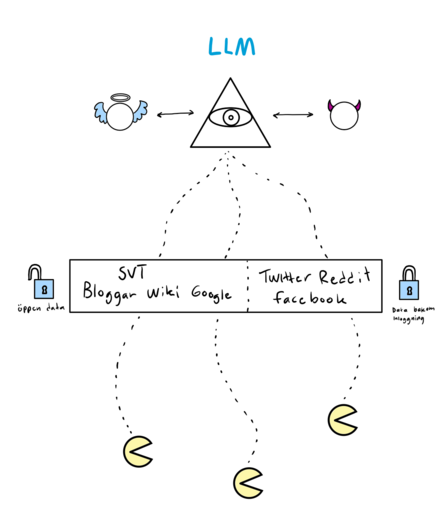

65 followers · 316 posts · Server social.piewpiew.se2. De flesta av AI-lösningarna vi läser om i media just nu är Large Language Models (LLM). Dom har har tränats på stora mängder textdata. Dvs allt från blogginlägg, nyheter, böcker, sociala medier osv. Allt fler aktörer vill nu att Ai-företagen ska betala dem för tillgång till deras data. Ex. Twitter och Reddit.

2/4

#ai #rlhf #gpt #bard #LLM #data #language #energi #Google #openAI

#ai #rlhf #gpt #bard #llm #data #language #energi #google #openai

coffe · @coffe

65 followers · 315 posts · Server social.piewpiew.seNågra saker att komma ihåg när vi snackar om ChatGPT

1. ChatGPT är ett av många sätt att interagera med den underliggande modellen / modellerna som kort och gott heter GPT plus ett versionsnummer. Det är inte bara chatGPT som använder sig av GPT-modellerna. Flera företag har byggt tjänster som nyttjar GPT. Bland annat Microsoft.

1/4

#ai #rlhf #gpt #bard #LLM #data #language #energi #Google #openAI

#ai #rlhf #gpt #bard #llm #data #language #energi #google #openai

coffe ⚓ · @coffe

170 followers · 1248 posts · Server fosstodon.org3. The models need to be retrained over and over again. This is usually done on both new and previously known information. It requires a lot of energy and a lot of data.

3/4

#ai #rlhf #gpt #bard #LLM #data #language #energy #Google #openAI

#ai #rlhf #gpt #bard #llm #data #language #energy #google #openai

coffe ⚓ · @coffe

170 followers · 1247 posts · Server fosstodon.org2. Most of the AI solutions we read about in the media right now are Large Language Models (LLMs). They have been trained on large amounts of text data, including blog posts, news, books, social media, etc. More and more actors now want AI companies to pay them for access to their data, e.g. Twitter and Reddit.

2/4

#ai #rlhf #gpt #bard #LLM #data #language #energy #Google #openAI

#ai #rlhf #gpt #bard #llm #data #language #energy #google #openai

coffe ⚓ · @coffe

170 followers · 1246 posts · Server fosstodon.orgA few things to keep in mind when we talk about ChatGPT:

1. ChatGPT is one of many ways to interact with the underlying model(s) that are simply called GPT plus a version number. It's not just ChatGPT that uses the GPT models. Several companies have built services that use GPT, including Microsoft.

1/4

#ai #rlhf #gpt #bard #LLM #data #language #energy #Google #openAI

#ai #rlhf #gpt #bard #llm #data #language #energy #google #openai

5h15h · @shish

95 followers · 616 posts · Server techhub.social#OpenAI uses #ReinforcementLearning from human feedback (#RLHF), an established technique, to enhance the safety, usefulness, and alignment of its models https://openai.com/research/instruction-following

#openai #reinforcementlearning #rlhf #AI #genai #generativeAI #chatgpt #gpt #rl

Tech news from Canada · @TechNews

441 followers · 12575 posts · Server mastodon.roitsystems.caArs Technica: The mounting human and environmental costs of generative AI https://arstechnica.com/?p=1929528 #Tech #arstechnica #IT #Technology #largelanguagemodels #generativeai #Features #chat-gpt #Tech #LLMs #rlhf #AI

#Tech #arstechnica #it #technology #largelanguagemodels #generativeAI #features #chat #LLMs #rlhf #ai

IT News · @itnewsbot

3086 followers · 256081 posts · Server schleuss.onlineThe mounting human and environmental costs of generative AI - Enlarge (credit: Aurich Lawson | Getty Images)

Over the past f... - https://arstechnica.com/?p=1929528 #largelanguagemodels #generativeai #features #chat-gpt #tech #llms #rlhf #ai

#ai #rlhf #LLMs #tech #chat #features #generativeAI #largelanguagemodels