Mayobrot · @Mayobrot

104 followers · 913 posts · Server zirk.usWhat stuff should I put in my robots.txt? Feel free to suggest overly restrictive entries.

Markus Feilner :verified: · @mfeilner

696 followers · 4778 posts · Server mastodon.cloudThe question is:

Is Scraping for AI systems of ALT-right US corporations "fair use" .

I have a strong opinion. But I am not with the IP corps either.

#chatGPT #robotstxt

New York Times, CNN and Australia’s ABC block OpenAI’s GPTBot web crawler from accessing content | Artificial intelligence (AI) | The Guardian https://www.theguardian.com/technology/2023/aug/25/new-york-times-cnn-and-abc-block-openais-gptbot-web-crawler-from-scraping-content

🐙 Compañero Allende · @morpheo

383 followers · 17853 posts · Server kolektiva.socialI have a question. It's really dumb, but here goes: Why would any bad actor (e.g. Shitter) care to respect robots.txt?

#robotstxt #RhetoricalQuestions

Dirk Haun · @dirkhaun

102 followers · 640 posts · Server tinycities.netPSA: If you're running @writefreely, make sure your server is set up to serve a robots.txt so that you can block bots you don't want to gobble up the contents of your website (looking at you, #ChatGPT).

Something like

location /robots.txt {

alias /complete/path/to/your/robots.txt;

}

in your #nginx configuration.

A wordier version of this #ServiceToot can be found here: https://blog.tinycities.net/dirkhaun/robots-txt-chatgpt-and-writefreely 🙈

#chatgpt #nginx #servicetoot #writefreely #robotstxt

bananabob · @bananabob

75 followers · 1781 posts · Server mastodon.nzSites scramble to block ChatGPT web crawler after instructions emerge

#arstechnica #chatgpt #gptbot #robotstxt

Ross A. Baker · @ross

838 followers · 964 posts · Server social.rossabaker.comSetting up /robots.txt, not because it helps, but because being crabby in compliance with an RFC is satisfying.

Who has some unsavory ones besides ChatGPT and Twitterbot?

Mr.Trunk · @mrtrunk

6 followers · 11961 posts · Server dromedary.seedoubleyou.meGizmodo: Google Says It Will Scrape Publishers’ Data for AI Unless They Force It Not To https://gizmodo.com/google-bard-ai-scrape-websites-data-australia-opt-out-1850720633 #applicationsofartificialintelligence #generativepretrainedtransformer #computationalneuroscience #artificialintelligence #largelanguagemodels #technologyinternet #thenewyorktimes #robotstxt #deepfake #gizmodo #chatbot #chatgpt #google #openai #palm2 #bard

#applicationsofartificialintelligence #generativepretrainedtransformer #computationalneuroscience #artificialintelligence #largelanguagemodels #technologyinternet #thenewyorktimes #robotstxt #deepfake #gizmodo #chatbot #chatgpt #google #openai #palm2 #bard

Benjamin Carr, Ph.D. 👨🏻💻🧬 · @BenjaminHCCarr

977 followers · 2499 posts · Server hachyderm.ioNow you can block #OpenAI’s #webcrawler

OpenAI now lets you block its web crawler from scraping your site to help train #GPT models. OpenAI said website operators can specifically disallow its #GPTBot crawler on their site's #Robots.txt file or block its IP address.

https://www.theverge.com/2023/8/7/23823046/openai-data-scrape-block-ai #privacy #security #RobotsTxT

#openai #webcrawler #gpt #gptbot #robots #privacy #security #robotstxt

Tomodachi94 · @tomodachi94

17 followers · 274 posts · Server floss.social@Seirdy updated on my blog to include both of the user agents.

🙈 I didn't actually know you could do that on GitHub Pages, but it turns out... you can!

Laravista · @laravista

20 followers · 257 posts · Server mastodon.uno#RobotsTxt is not the answer: Proposing a new meta tag for LLM/AI

https://searchengineland.com/robots-txt-new-meta-tag-llm-ai-429510

Sebastian Nagel · @sebnagel

4 followers · 4 posts · Server fosstodon.orgReleased #CrawlerCommons 1.4: Java 11, #RobotsTxt compliant with #rfc9309 - https://github.com/crawler-commons/crawler-commons#18th-july-2023----crawler-commons-14-released

#crawlercommons #robotstxt #rfc9309

PCH🎙️ :wp_fedi: · @phillycodehound

7452 followers · 4780 posts · Server masto.ai@rustybrick do you think #Google will just ignore Robots.txt? I mean they'd love to be able to train on everything.

Though I would love more controls on stopping AI from scraping without blocking my sites from Search

#google #seo #ai #scraping #robotstxt

Angus McIntyre · @angusm

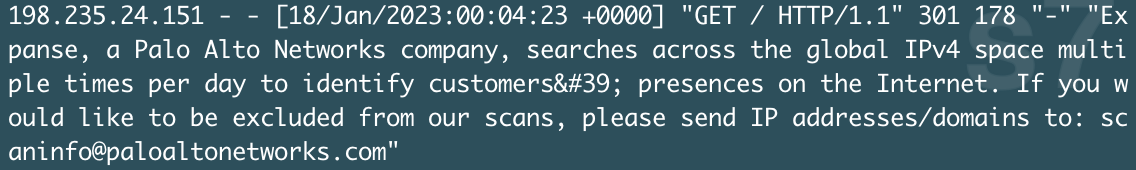

578 followers · 585 posts · Server mastodon.socialWhat the actual fuck?

Will someone kindly explain to "global cybersecurity leader" Palo Alto Networks that the User-Agent header is a place to put the name of your user agent? You send the name of your user agent, and you obey `robots.txt` (which they don't, of course). You DO NOT write a short essay ending with a request for people to mail you to opt-out. It is 2023 and the right way to do this was established DECADES ago.

#paloaltonetworks #clownshoes #robotstxt #webcrawlers #www #web

#paloaltonetworks #clownshoes #robotstxt #webcrawlers #www #web

Angus McIntyre · @angusm

511 followers · 483 posts · Server mastodon.socialUnsurprisingly, webmeup's assurance that "you will not see recurring requests from the BLEXBot crawler to the same page" turns out to be ... not true?

At least according to my log files, which show the same page getting hit at 5 day intervals as part of their process of fetching every single page on my site over and over to satisfy some vague marketing need.

So I think BLEXBot can join AHRefsBot and SEMRushBot in my robots.txt. And nothing of value was lost.

#crawlers #webspiders #robotstxt

toot box · @cyborg

49 followers · 444 posts · Server gamers.ripI'm having this wild experience where I recall being able to put a sort of #NOARCHIVE and/or #NOCACHE command in robots.txt, not just meta tags.

Was that deprecated when I wasn't looking, or should I just blame the #MandelaEffect? :eyes_squint:

#robotstxt #websitedesign #mandelaeffect #nocache #noarchive

Éamonn · @eob

225 followers · 76 posts · Server social.coop#Media companies and #journalists, you can partially boycott #Twitter by adding the following to the robots.txt file on your website:

User-agent: Twitterbot

Disallow: *

This prevents Twitter using your images in links to your articles.

How to add a #RobotsTxt:

https://developers.google.com/search/docs/crawling-indexing/robots/create-robots-txt

#media #journalists #twitter #robotstxt

Mike Blazer 🇺🇦 · @MikeBlazer

531 followers · 508 posts · Server mastodon.socialI was checking video and image CDN hosts of several sites and found out that their robots.txt files are 404.

As per @johnmu:

"If the robots.txt file is unreachable, we'll see that as blocking crawling."

https://twitter.com/JohnMu/status/1435688745681014798

My question to John: if example.com/robots.txt returns "200 OK" but cdn84.video-image-12.com/robots.txt has "404 not found", would images and videos of this website have problems ranking on Google Images and Google Videos?

#seo #cdn #google #404 #robotstxt

gaby_wald · @gaby_wald

70 followers · 16249 posts · Server framapiaf.org#dev #blog "How Search Engines Work: Finding a Needle in a Haystack" #SearchEngines #Web #WWWeb #InterWeb #Google #Indexation #CloudComputing #DistributedSystems #DataBases #RobotsTXT #index ... https://dev.to/gbengelebs/how-search-engines-work-finding-a-needle-in-a-haystack-4lnp

#dev #blog #searchengines #web #wwweb #interweb #google #indexation #cloudcomputing #distributedsystems #databases #robotstxt #index

gaby_wald · @gaby_wald

74 followers · 16277 posts · Server framapiaf.org#dev #blog "How Search Engines Work: Finding a Needle in a Haystack" #SearchEngines #Web #WWWeb #InterWeb #Google #Indexation #CloudComputing #DistributedSystems #DataBases #RobotsTXT #index ... https://dev.to/gbengelebs/how-search-engines-work-finding-a-needle-in-a-haystack-4lnp

#index #robotstxt #databases #distributedsystems #cloudcomputing #indexation #google #interweb #wwweb #web #searchengines #blog #dev

ijliao · @ijliao

299 followers · 6174 posts · Server g0v.social