Charlie · @cdp1337

180 followers · 878 posts · Server social.veraciousnetwork.comdavidochobits · @davidochobits

658 followers · 839 posts · Server mastodon.bitsandlinux.comMastodon: Solventar uso excesivo de memoria de Sidekiq

postmodern · @postmodern

1342 followers · 2020 posts · Server ruby.socialpostmodern · @postmodern

1341 followers · 2012 posts · Server ruby.socialITX Mike · @mspsadmin

143 followers · 1107 posts · Server msps.ioBrook Miles · @brook

819 followers · 1700 posts · Server sunny.gardenI've posted an update to my #Sidekiq tuning guide for small Mastodon servers.

Added a description of weighted queues.

Changed the estimated number of worker processes from relying on user counts, to a rule of thumb based on jobs processed per day:

1 worker process (5 threads) for every 500k jobs/day

https://hub.sunny.garden/2023/07/08/sidekiq-tuning-small-mastodon-servers/

BeAware :verified420: · @BeAware

366 followers · 2776 posts · Server social.beaware.liveHmmm 🤔 #mastodon memory leak or I just don't understand how things work. #Sidekiq seemingly can handle all to jobs quite easily, yet after a couple days of my instance running without restarting, RAM usage grows quite high, talking 80% with #elasticsearch enabled on a 16 GB machine. Is it normal? Or does that mean memory leak somewhere? Maybe I should spend a week figuring out how to run another instance and load balance, but I feel this shouldn't be necessary for a single user instance...🤷🏻♂️

#mastodon #sidekiq #elasticsearch #fediverse

Steven Harman · @stevenharman

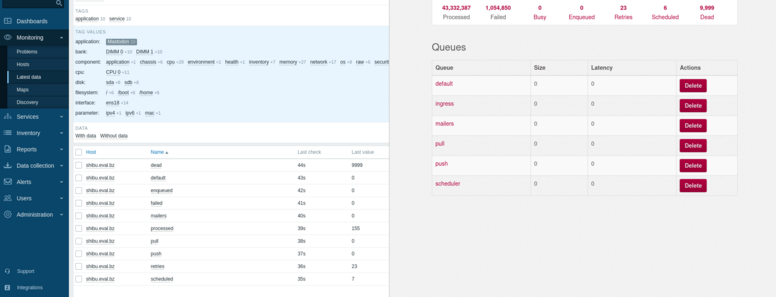

224 followers · 503 posts · Server ruby.socialAs of a few hours ago, the last trickle of jobs were moved over to latency-based queues. We're slotting into one of:

* within_30_seconds

* within_5_minutes

* within_1_hour

* within_24_hours

We've hit a few snags - blowing those SLAs when slow jobs clog the works, starvation, unable to detect and auto-scale the lower latency queues fast enough, etc… Those problems were always there, bug didn't "see” and so didn't address/talk about them. Now we see them. And fix them.

Mattias Schlenker · @mattias

726 followers · 3506 posts · Server toot.bikeCould someone help me on this? I have two #Mastodon instances (with #Checkmk, but SW is irrelevant), one that was set up using 3.5 and updated the way to 4.1 and one that was set up at 4.1. Both run Ubuntu 20.04 and were installed from source.

Now I try to extract #Sidekiq monitoring data. This works on the "old" instance, but not on the new. Can someone point me on the different configuration that might cause this?

Boost welcome.

Here is my script and more details:

https://forum.checkmk.com/t/redis-sidekiq-questions/40563

Ryan Baumann · @ryanfb

939 followers · 685 posts · Server digipres.clubdon't know who else needs to hear this but Redis has now finally updated their hosted Redis offering so that you can use Redis 7.2 (meaning you can now upgrade a #Rails app that uses hosted #Redis to #Sidekiq 7) https://redis.com/blog/introducing-redis-7-2/

Esther Schindler · @estherschindler

587 followers · 1255 posts · Server newsie.socialWhat do you do when you need to speed up #Mastodon? These benchmark tests explore the practicalities of using #Redis Enterprise Cloud to power the queues for #Sidekiq. https://thenewstack.io/optimizing-mastodon-performance-with-sidekiq-and-redis-enterprise/

Steven Harman · @stevenharman

222 followers · 482 posts · Server ruby.socialIt's been years since I last used #DelayedJob. Like, the early 2010's or so? Back then it was a mix of DJ and #Resque. Then #Sidekiq came on the scene, I moved over pretty quickly.

Anyhow, the point is, I was under the impression that DelayedJob doesn't have a mechanism to recover from jobs that crash/SIGKILL’d (like, think OOM or something). And to be fair, DJ itself doesn't. But the ActiveRecord backend does, though it's not really advertised. https://github.com/collectiveidea/delayed_job_active_record/blob/97f26a3e1b82b338cd8270aad988c75b82ea5c86/lib/delayed/backend/active_record.rb#L57

#delayedjob #resque #sidekiq #ruby #rails #opensource

qbi · @qbi

2084 followers · 3 posts · Server freie-re.deSo es sieht so aus, als wäre der Umzug gut gegangen. Das #Sidekiq hat einen kleinen Hüpfer gemacht. Aber nun scheint wieder alles im Lot zu sein.

FastRuby.io · @FastRuby

40 followers · 131 posts · Server ruby.social🚀 Ruby upgrade tip: Fix those sneaky ArgumentErrors during your upgrade from Ruby 2 to Ruby 3.

Francois breaks it down on the blog: https://www.fastruby.io/blog/custom-deprecation-behavior.html?utm_source=Mastodon&utm_medium=Organic&utm_campaign=Blogpromo&utm_term=safeguardingfromdeprecation&utm_content=Gif&utm_id=

#rubyonrails #railsupgrade #activejob #sidekiq

Steven Harman · @stevenharman

221 followers · 469 posts · Server ruby.socialI remember when @getajobmike first announced the paid version of Sidekiq way back in the early 2010’s (??? is that right? Has it been that long? Fuuuuudge). At first I was upset that we were losing something. But now, as a customer of Sidekiq Pro/Enterprise at multiple orgs, I think it's safe to say he's done a bang-up job and we have a much stronger product as a result. Thanks, Mike!

This Toot prompted by a change we wanted to make to `reliable_push!`(https://github.com/sidekiq/sidekiq/discussions/5909#discussioncomment-6607443).

Stefano Marinelli · @stefano

560 followers · 397 posts · Server mastodon.bsd.cafeGood morning, friends of the #BSDcafe and #fediverse

I'd like to share some details on the infrastructure of BSD.cafe with you all.

Currently, it's quite simple (we're not many and the load isn't high), but I've structured it to be scalable. It's based on #FreeBSD, connected in both ipv4 and ipv6, and split into jails:

* A dedicated jail with nginx acting as a reverse proxy - managing certificates and directing traffic

* A jail with a small #opensmtpd server - handling email dispatch - didn't want to rely on external services

* A jail with #redis - the heart of the communication between #Mastodon services - the nervous system of BSDcafe

* A jail with #postgresql - the database, the memory of BSDcafe

* A jail for media storage. The 'multimedia memory' of BSDcafe. This jail is on an external server with rotating disks, behind #cloudflare. Aim is georeplicated caching of multimedia data to reduce bandwidth usage.

* A jail with Mastodon itself - #sidekiq, #puma, #streaming. Here is where all processing and connection management takes place.

All communicate through a private LAN (in bridge) and is set up for VPN connection to external machines - in case I want to move some services, replicate or add them. The VPN connection can occur via #zerotier or #wireguard, and I've also set up a bridge between machines through a #vxlan interface over #wireguard.

Backups are constantly done via #zfs snapshots and external replication on two different machines, in two different datacenters (and different from the production VPS datacenter).

#bsdcafe #fediverse #freebsd #opensmtpd #redis #mastodon #postgresql #cloudflare #sidekiq #puma #streaming #zerotier #wireguard #vxlan #zfs #sysadmin #tech #servers #itinfrastructure #bsd

Steven Harman · @stevenharman

221 followers · 459 posts · Server ruby.socialWe have done a bunch of work to integrate our #GitHub formatted `CODEOWNERS` into our #Bugsnag, #Sidekiq, #Rails, PagerDuty, logging, #OpenTelemetry Tracing, etc… to the point that I think the bulk of it could be gemified, with extension Gems handing specific tooling integration. But then, that means the OSS maintainer gauntlet, and I’m just not sure I have the energy nor bandwidth for that slog right now. If only I could talk @searls into doing this for me. 😂

#github #bugsnag #sidekiq #rails #opentelemetry

jcrabapple :virginia_badge: · @jcrabapple

1745 followers · 3066 posts · Server dmv.communityJust added two more 'push' queues to the DMV.Community #sidekiq.

Also, that 'ingress' queue really does not like to let go of RAM.

HousePanther · @housepanther

870 followers · 531 posts · Server mstdn.goblackcat.comIt's interesting because I wish I knew what offending entry in the #mastodon #postgresql database was causing my #sidekiq ingress queue to deny everything from one of my internal subnets, 172.16.0.0/24. Any expert have any ideas?

#mastodon #postgresql #sidekiq