AskUbuntu · @askubuntu

232 followers · 1689 posts · Server ubuntu.social꧁~DialUpCea~꧂ · @alcea

46 followers · 3616 posts · Server pb.todon.de#Writing a #php #website #downloader seems to be a daunting task.

Neither #curl , nor #wget or even the built in fetch, get the job done.

wget --mirror --convert-links --adjust-extension --page-requisites --no-parent https://vgmdb.net/album/131275

doesn't work.

And no matter what I do, it just. won't #download the associated images.

#writing #php #website #downloader #curl #wget #download #wtf #brokencodealcea

AskUbuntu · @askubuntu

232 followers · 1723 posts · Server ubuntu.socialKevin Karhan :verified: · @kkarhan

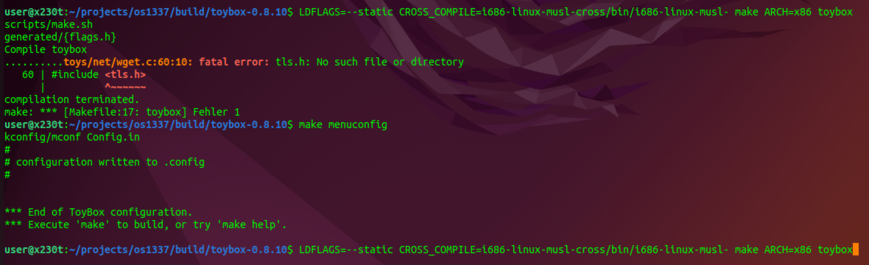

1478 followers · 106924 posts · Server mstdn.social@bagder @BrodieOnLinux also because #wget as implemented in #Toybox doesn't support #SSL unless one fiddles with it or can use the pre-made binaries...

Kevin Karhan :verified: · @kkarhan

1458 followers · 104859 posts · Server mstdn.socialSadly, #toybox doesn't like to build it's #wget with #SSL / #TLS due to a missing header file...

https://github.com/OS-1337/OS1337/issues/1

Kevin Karhan :verified: · @kkarhan

1458 followers · 104859 posts · Server mstdn.social@whitekiba I mean I don't look for computational efficiency....

OFC modern crypto needs a lot of processing power...

But I'd even consider doing a #ramdisk and making a script that #wget's #dropbear as a working hack...

Even if that makes it a sort-of "#netlive" [ #netinstaller meets #live #linux] workaround...

#Linux #Live #netinstaller #netlive #dropbear #wget #ramdisk

lbr59🇨🇵 · @lbr59

27 followers · 392 posts · Server mastodon.roflcopter.frHé #mastodon #fedivers @sebsauvage Quelqu'un saurait-il comment #sauvegarder une page #flipboard qui est en infinite scroll (chargement en continu)

A base de #curl #wget ou tout autre moyen.

J'ai déjà essayé avec #Firefox et un #script d'auto scroll mais la consommation mémoire est astronomique et on obtient pas quelque chose de correct. Idem avec des scraper #python .

Je sais, j’aurai dû dès le début utiliser #shaarli pour mon #bookmark au lieu d'une solution propriétaire et commerciale...

#mastodon #fedivers #sauvegarder #flipboard #curl #wget #firefox #script #python #shaarli #bookmark

Jordan Erickson · @lns

18 followers · 185 posts · Server fosstodon.orgKevin Karhan :verified: · @kkarhan

1381 followers · 97074 posts · Server mstdn.social@bagder also #curl is way more versatile and useful than #wget and is available as a #standalone #binary:

No need to fiddle with shit: #ItJustWorks!

#itjustworks #binary #standalone #wget #curl

Kevin Karhan :verified: · @kkarhan

1319 followers · 91237 posts · Server mstdn.social@Llammissar @darthyoshiboy @benjedwards lets just say I love how #curl is a portable, single executeable.

OFC one needs to specify for curl to retive/save the input/output from/as file, whereas #wget does that automagically.

I wished #GnuPG would be equally elegant and just allow something like:

gpg --encrypt -s unencrypted.txt -k pubkey.asc -o encrypted.tot.gpg

&

gpg -- decrypt -s encrypted.txt.gpg -k privkey.asc -o unencrypted.txt

Kevin Karhan :verified: · @kkarhan

1319 followers · 91237 posts · Server mstdn.social@DarthYoshiBoy @benjedwards also #curl just works and not just like #wget in pulking stuff but also #POST & #PUSH!

ricardo :mastodon: · @governa

1220 followers · 8160 posts · Server fosstodon.orgKevin Karhan :verified: · @kkarhan

1202 followers · 80200 posts · Server mstdn.social@YourAnonRiots I just have a portable #Windows binary of #wget accesible or use #certutil for that...

Teapot Ben · @teapot_ben

170 followers · 25 posts · Server fosstodon.orgDoes anyone have any suggestions for open source software that can crawl a website, and then display it graphically as a hierarchy of pages including any links between the pages?

I'm thinking along the lines of #wget spider to crawl, and maybe #graphviz to create the diagramme, bit I'm not set on these.

I might be able to write some basic script to do this with plenty of time, but don't want to reinvent the wheel if someone has already done it. Suggestions welcome.

Andres Salomon · @Andres4NY

672 followers · 5001 posts · Server social.ridetrans.itJust heard my wife sing to herself (while working at her laptop), "🎶 #wget to the rescuuuuue🎶" 😀

intro · @intro

17 followers · 891 posts · Server mastodontech.deIch meine auch bei

wget https://russia.com/gutesw.sh | bash

Ist dies keine gute Idee, ich würde die file gutesw.sh Downloaden und erst dann ausführen, wenn man gleich an bash piepd und vllt. Ein Disconect stattfindet läuft das Programm nicht mehr weiter und im schlimmsten Fall ist das System zerschossen nicht mehr boot fähig. Oder irre ich mich da ?

AskUbuntu · @askubuntu

135 followers · 1887 posts · Server ubuntu.socialMark Gardner :sdf: · @mjgardner

678 followers · 4277 posts · Server social.sdf.org@leobm @Perl At minimum you need exactly one thing from #CPAN in dev to snapshot and pin all your upstream #Perl dependencies: https://metacpan.org/pod/Carton

At minimum you need exactly one thing in #CI and production: https://metacpan.org/pod/cpanm, which you can download standalone with #curl or #wget: https://metacpan.org/pod/App::cpanminus#Downloading-the-standalone-executable

Everything else is bells and whistles

Hrafn · @hth

183 followers · 534 posts · Server androiddev.socialAskUbuntu · @askubuntu

125 followers · 1869 posts · Server ubuntu.socialWget always downloading index.html? #firefox #downloads #filesharing #wget #webapps

#firefox #downloads #filesharing #wget #webapps